Enhancing Vision-Based Cigarette Smoke Detection in Smart Vehicles by Transfer Learning

Copyright ⓒ 2025 The Digital Contents Society

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-CommercialLicense(http://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

In contemporary times, many companies are investing in autonomous vehicles, with several models already on the market and their usage increasing daily. Smart vehicles utilize various sensors to enhance passenger safety, but challenges remain, particularly for individuals with dyspnea or motion sickness. Cigarette smoke, which contains harmful substances like carbon monoxide (CO), poses risks to passengers and can interfere with the vehicle’s sensor ecosystem due to its odor and particulate matter. Detecting cigarette smoke is challenging due to its varying textures, colors, and shapes. To address this, we propose a Deep Learning (DL)-based detection system using Transfer Learning (TL). A pre-trained VGG19 network, originally trained on ImageNet, was fine-tuned for feature extraction and trained on a cigarette smoke dataset. Our model achieved a classification accuracy of 96.05%, surpassing those of existing architectures. Additionally, we compared our model to current architectures and satisfied our expectations.

초록

현대 사회에서 많은 기업들이 자율주행차에 투자하고 있으며, 이미 여러 모델이 시장에 출시되어 있고 그 사용량이 날로 증가하고 있다. 스마트 차량은 다양한 센서를 활용하여 승객의 안전을 강화하고 있지만, 특히 호흡곤란이나 멀미가 있는 사람들에게는 여전히 어려움이 있다. 일산화탄소(CO)와 같은 유해 물질을 함유한 담배 연기는 승객에게 위험을 초래할 뿐만 아니라 냄새와 미세먼지로 인해 차량의 센서 생태계에 영향을 미칠 수 있으며, 다양한 질감, 색상, 모양으로 인해 감지가 어렵다. 이 논문에서는, 이러한 문제 해결을 위해 전이 학습을 활용한 딥러닝 기반 흡연 감지 시스템을 제안한다. ImageNet을 기반으로 사전 학습된 VGG19 네트워크는 특징 추출을 위해 담배 연기 데이터셋을 기반으로 미세 조정하여 재학습하였다. 제안하는 모델은 기존 모델들을 능가하는 96.05%의 분류 정확도를 달성하였고, 기존 아키텍처와 비교 분석하여 문제 해결을 위한 기대치를 충족함을 확인하였다.

Keywords:

Cigarette Smoke Detection, Smart Vehicle, Deep Learning, Transfer Learning, Automobile키워드:

흡연 감지, 스마트 차량, 딥러닝, 전이 학습, 자동차Ⅰ. Introduction

Cigarette smoking remains the single most preventable cause of death and disease worldwide, and it is the leading cause of Chronic Obstructive Pulmonary Disease (COPD) and numerous other conditions [1]. It is a deadly habit that slowly damages our bodies, especially when done in enclosed spaces, causing considerable suffering for non-smokers. In recent years, the popularity of autonomous cars has been increasing, so it’s crucial to consider the risks associated with smoking in vehicles. Smoking in cars not only harms the smoker but also exposes passengers to secondhand smoke (SHS), especially in self-steering cars. Smoking exposes passengers to harmful SHS and thirdhand smoke (THS). Nonsmokers with respiratory issues who use autonomous cars may face prolonged exposure to CO due to the sealed windows in such vehicles. This could significantly impact passengers’ respiratory systems and potentially lead to fatal consequences. Researchers said that SHS can be particularly hazardous in the relatively confined space of an autonomous car [2]. Opening car windows can reduce, but not eliminate, the danger. An examination conducted in 2011, which monitored car trips involving smokers found that the concentration of fine particulate matter in cars where smoking occurred greatly exceeded international indoor air quality standards further posing a health threat to both children and adults. Similarly, according to the National Center, smoking one cigarette in a tightly closed vehicle can produce over 100 times the EPA’s 24-hour fine particle exposure limit [3]. These particles, contain cancer-causing chemicals and can lodge deep in a person’s lungs and irritating the respiratory system [4].

There are approximately 600 ingredients in cigarettes. When burned, it creates more than 7,000 chemicals. Some of these chemicals are known to induce cancer and asthma, which can lead to death in humans. One of these substances is CO, respiration of CO is the leading cause of fatal poisoning in the world of endeavor [5]. CO decreases the distribution of oxygen (O2) to the tissues by displacing O2 from hemoglobin and forming carboxyhemoglobin (COHb) [6]. The first line of treatment for CO poisoning involves the administration of 100% O2 to displace CO from hemoglobin and restore O2 delivery to tissues [7]. In cases of severe CO poisoning, the only additional therapy available is hyperbaric O2. Unfortunately, hyperbaric chambers are unsuitable for emergency treatment, since they are relatively uncommon [8]. Even in locations where hyperbaric chambers are available, delays in initiating therapy are inevitable due to the time needed to transport the patient, assemble trained personnel, and set up the chamber, often resulting in frequent deaths. Another Research shows that 50,000 individuals in the United States seek treatment in emergency departments due to CO poisoning. High levels of exposure cause headaches, nausea, chest pain, and shortness of breath, and may require ventilation and shock treatment [8]. Every year, at least 420 people die in the U.S. from accidental CO poisoning and more than 100,000 visit the emergency department for it.

Similarly, CO in cigarette smoke inside contemporary vehicles poses difficulties, especially for sensors monitoring exhaust emissions. The smoke can damage sensors, and affect their performance. In driverless cars, passenger smoking poses health risks and compromises the interior, reducing passenger experience. Without regular cleaning, dirt, and debris can potentially harm these sensors. It impedes vehicles’ ability to sense obstacles, assess surroundings, and plan routes effectively [9]. Consequently, if passengers smoke inside, it puts other occupant’s health at danger. Notably, driverless vehicles are equipped with over 40 major sensor categories, enhancing their ability to perceive and navigate their surroundings safely [10].

Over the past few years, advances in DL have driven tremendous improvements in image detection, segmentation, and classification, while also playing a vital role across various industries, from self-driving research to trip forecasting and fraud prevention, enhancing user experiences. Smoking is a major global issue that causes severe health crises [11]. The WHO estimates that around 8 million people die every year because of smoking [12]. Constructing an extensive dataset of cigar smoking and training a model for a predictive system poses significant obstacles where often utilized TL for solving classification problems. By employing TL principles, a DL architecture can leverage its prior knowledge and experience. This enables enhanced cigar smoke image classification, providing faster and more effective solutions in diverse contexts. Among DL architectures, VGG19 holds significant prominence in convolutional neural network (CNN) architectures. VGG19 comprises 16 convolutional layers with 5 pooling layers and 3 fully-connected layers [13]. Studies indicate [14] the successful utilization of VGG19 architecture in addressing diverse classification problems through architectural modifications and the application of TL. TL with CNN has been proven to work with great accuracy in many medical domains like diabetic retinopathy, Alzheimer’s disease, and skin lesion detection [15]-[20] among others. Therefore, TL and CNN are considered in our experiment, where intense cigar smoking detection using VGG19 following TL principles is not a usual procedure.

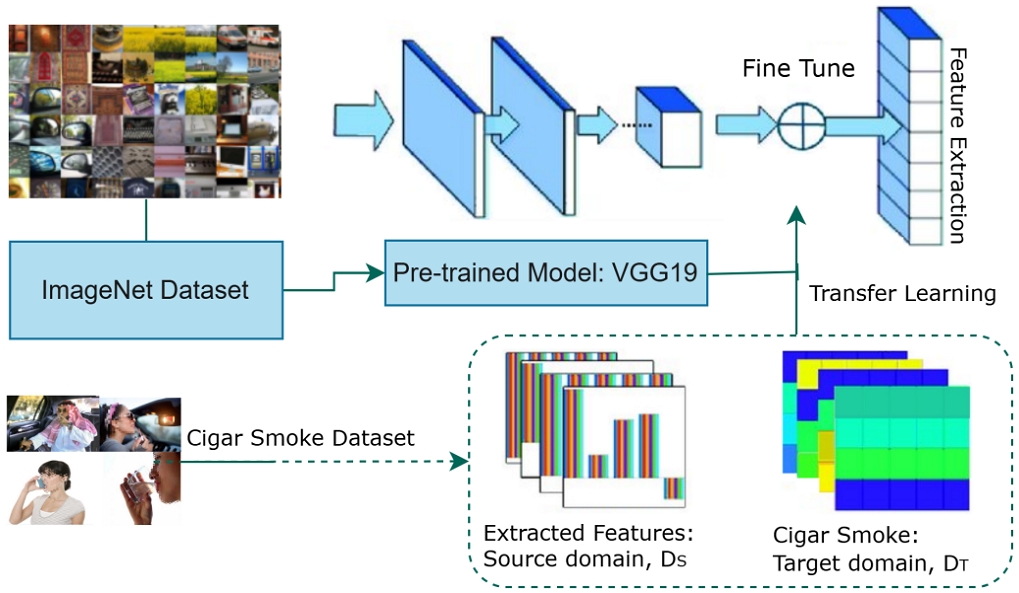

As illustrated in Fig. 1, formally in supervised machine learning given a source domain, DS, and learning task, KS, a target domain, DT , and its learning task, KT . TL aims to help improve the learning of the target predictive function ϕT (·) in KT using the knowledge in DS and KS, where DS ̸= DT or KS ̸= KT . DS represents the ImageNet and smoke dataset in our research and its learning task, KS, where KS = {γS, ϕS(·)}, given that γS is the source label space, and ϕS(·) is the source predictive function that is used to map a new image feature xi to its label yi. DT represents the datasets in this research and its learning task, KT , where KT = {γT , ϕT (·)}. As stated before, we want to enhance ϕT (·) of the new modality, DT , using the cigar smoke dataset, DS, and its objective function, ϕS(·) of KS. Here, we also state that if f [m, n] is the convolution filter, g[u,v] is the input image, and o[u, v] is the output feature map, this is how the convolution and feature map operations are conducted.

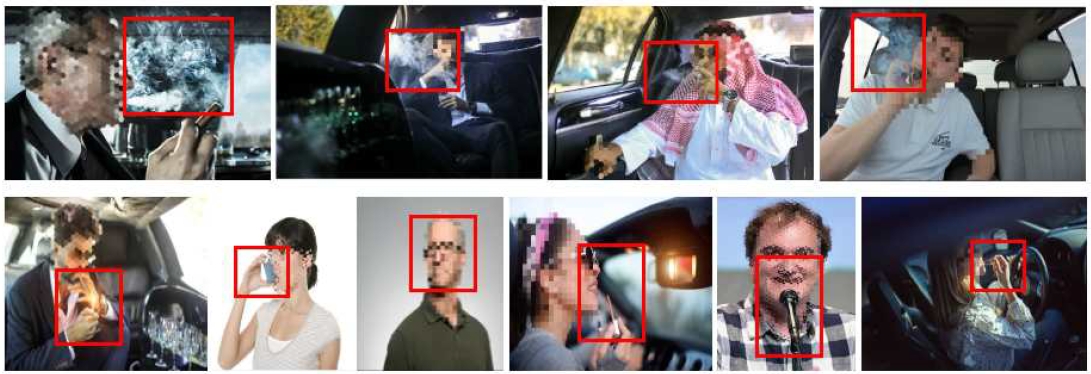

Overview of objects within the context of a car and different environmental problems such as holding small objects similar to cigarettes, and covering faces with a hand while using an inhaler in front of the mouth

It is seen in the literature that VGG19 architecture is used successfully in solving various classification problems by making changes (improvements or modifications) on its architecture and even by making use of TL. In this paper, VGG19 architecture has been proposed for the classification of the existence of cigarette smoke in vehicles through images by modifying it with a different number of layers to increase the model prediction success. A new smoking data set was produced by collecting images from different online sources [21]-[24]. Smoking images in the dataset were used as input data in DL architectures. The suggested models focus on the definition of cigar smoke images using a combination of different numbers of Rectified Linear Activation (ReLU), Pooling and Convolution layers (3, 4, & 6Conv_Sigmoid and 3, 4 Conv_SVM, & 6Conv_SVM_FE). Finally, fully connected layer, activation function, and FE processes are used in the original VGG19 architecture. The approached models classify cigar smoke images as smoking or non-smoking. In this paper, the Sigmoid classifier layer used in VGG19 was removed and the support vector machine (SVM) classifier was also used instead including FE. Therefore, the evaluation performances of the proposed models with the Sigmoid, SVM containing FE classifier, and other recent classification models were also examined.

In summary, the supervised model we offer not only addresses current challenges but also introduces new advancements to the field, providing significant improvements in the area. The brief contribution of this research include:

- ㆍ Introduces a new framework that can adopt a new modality behavior (Cigar smoke) within a smart car environment using DL and TL principals.

- ㆍ Proposes TL-based tricks using VGG19 and feature extraction techniques for classifying smokers based on smoking and non-smoking intensity, achieving higher accuracy.

- ㆍ Aimed at offering a high-accuracy solution while addressing some of the shortcomings found in recent studies, accurately identifies confusing objects such as lipstick, walkie-talkies, pens, and chocolate, as well as complex actions such as holding the phone vertically, drinking from a glass or bottle, sneezing with a folded hand, and covering faces with a hand while using an inhaler in front of the mouth.

- ㆍ Evaluate the suggested approach for in-depth analysis of the newly created smoker detection model with current and new datasets for the smoker classification problem and also compare it with other recent CNN classification models.

The remaining of the paper is ordered as follows. Section II describes the literature review of recent studies and techniques. Section III narrated the background of this research, including its pros and current limitations. Section IV represents the detection methodologies with datasets used in this study. Section V explain the results of each experiment and the conclusive brief can be found in Section VI.

Ⅱ. Related Work

In recent years, the development of autonomous vehicles has advanced amazingly because of remarkable research results coming from the domains of lidar, wireless communication, cameras, embedded systems, sensors, navigation, and ad hoc network technologies. The concept of autonomous cars started with “phantom autos” in the 1920s, where the car was controlled through a remote control device [25]. The Autonomous Land Vehicle, one of the earliest examples of self-managed cars, was created in the 1980s by experts at Carnegie Mellon University’s NavLab [26]. Around the same period, Mercedes introduced the prometheus project, which achieved a vital result with the design and track lane markings [27]. In the 21st century, interest in autonomous cars has been fueled due to affordability and high-performance technologies. Major companies globally have welcomed them, but despite that broad acceptance, several challenges persist like sensor performance, which is prompting ongoing research and industry efforts [9]. Especially in environments with smoke, such as cigar smoke. When smoke is present inside the vehicle, sensors may not function optimally and posing risks to passengers’ safety [28]. Apart from the compromised performance of the sensors themselves, there’s also concern about the presence of harmful substances like CO emitted by smoke, which can endanger passengers’ health [29]. It does not only affect the functionality of the vehicle but also raises health hazards to individuals, including SHS and THS exposure [30]-[32].

There have been many studies involving different applications of detecting smoking using computer vision technology [33]. These applications include real-time vision based detection [34], smoking cessation system [35], detection system based on face analysis [36], smoking behavior observation from video [34], smoke sensing on smart phone [37], fire smoke recognition [38] and so on. There was some existing research where researchers tried to solve smoking problems by considering human action. The scheme proposed by Wu et al. [36] smoke detection systems attempted to automatically detect illegal behavior and transmit alarms within an indoor monitoring system. They used the YCbCr color system for skin recognition and analyzing from mouth to the detected site of smoking. Likewise, another human activity recognition framework used a deep Q-network with a distance-based reward rule and LSTM networks to classify motion data [39], while another approach used channel state information (CSI), translated CSI data into images, and used a 2D-CNN classifier to classify human engagement [40]. In [41], The authors highlighted a smoking detection method using two 9-axis IMUs, focusing on the elbow-to-wrist position, and predicted using a random forest model. Tang et al. [42] reported a two-layer ML method using wristwatch accelerometer data to detect smoking by integrating high-level smoking patterns with low-level time factors.

Considering the risks associated with smoking, researchers have explored not only cigars but also fire smoke using various models. Rentao et al. [43] enhanced YOLOv3-tiny for indoor image-based smoke detection, reducing ambient disturbances comparison to sensor-based strategies, and applied SVM for fire and cigar smoke detection [44]. The YOLOV8 offered feed-forward DNNs used for the classification and clustering of images based on similarity and detection of objects within a scene, which then led to an alarm [45]. Although the context of threshold value changes frequently which leads to a high false detection rate and poor applicability. Thus, despite its strength in real-time monitoring systems, YOLOv8 detection is inefficient for detecting cigar smoking, with little accuracy. Therefore, choosing a more fitting strategy that addresses past limitations and enhances accuracy in cigar smoking detection would be advisable. TL could be especially suitable for cigar smoking detection because it utilizes extensive previous research, minimizing the laborious task of generating a huge dataset.

In recent years, TL techniques have been successfully applied in image classification, pattern, and speech recognition mostly focused on medical issues [13]. Many CNN architectures have been developed to improve system performance using TL approaches, especially in object identification applications. Notable examples of these architectures are the well-known AlexNet, VGG16, and VGG19. Shaha et al. [46] compared the performance of the pre-trained VGG19 network with AlexNet, VGG16, and a hybrid CNN-SVM technique in order to fine-tune the network parameters using transfer learning for an image classification task. Few studies have looked closely at classifying illnesses, plants, crops, and smoke utilizing a variety of input photos and videos and different fine-tuning methodologies [47]-[51]. Macalisang et al. [33] used YOLOv3 for detecting smokers, while Zhang et al. [52] developed SmokingNet, a CNN model based on GoogleNet, for smoker detection. All things considered, TL techniques have been frequently employed to enhance predictions. In this field, there has been a noticeable lack of efficient models for detecting cigarette smoke through TL, particularly in the context of autonomous cars, despite a significant opportunity in this region.

Overall, our study advances the existing work and fills the gaps mentioned above about TL and detection systems. There is little research on passenger safety and well-being in driverless vehicles. Exposure to SHS and THS in cramped areas can harm passengers and might result in respiratory problems or even death from CO inhalation. However, by leveraging TL techniques, we can overcome these obstacles and create a prediction model, addressing previous limitations such as the confusion of object detection. For instance, objects like lipstick, walkie-talkies, pens, and chocolate may be inaccurately recognized, as well as complex movements related to hand and face. With the support of aforementioned causes in this paper, we proposed various VGG19 architecture following TL principles which can aim to detect the existence of cigarette smoke in vehicles from images by adjusting the number of layers to increase the model prediction accuracy. The dataset was created by gathering images from diverse sources with smoking images serving as input in the prediction model. After the refinement of the model, we enhanced the original VGG19 architecture by integrating fully connected layers, activation functions, and FE processes. It effectively distinguishes between smoking and non-smoking images of cigar smoke. We removed the Sigmoid classifier layer from the VGG19 model and replaced it with an SVM classifier, along with FE techniques. The resulting ‘6Conv SVM FE’ model achieved an impressive accuracy of 96.05%, outperforming other models. Finally, we evaluated the performance of the final model using a new dataset containing similar smoking-related images and also examined recent classification models including computational comparison.

Ⅲ. Background

Autonomous vehicles are at the forefront of modern transportation, driven by advancements in AI and sensor technologies. These vehicles are designed to improve road safety, optimize travel efficiency, and enhance passenger comfort. To achieve these goals, various sensors are integrated to monitor the external and internal environment of the vehicle. However, there are still challenges in addressing passenger health concerns, such as dyspnea or motion sickness, especially in confined spaces where air quality can deteriorate due to pollutants like cigar smoke. Cigar smoke, containing harmful substances like CO and other toxic chemicals, poses serious risks not only to passengers but also to the vehicle’s sensor ecosystem. It can interfere with existing sensors by introducing particulates and odors that reduce their effectiveness over time. These challenges highlight the need for innovative and reliable smoke detection systems within the car environment. With the rise of DL and TL, CNNs have emerged as a powerful solution for image-based detection tasks. Unlike traditional sensors, which rely on detecting particulate matter or chemical changes, CNN-based systems leverage visual data to detect patterns and features associated with specific events, such as the presence of cigar smoke. In this work, we propose a novel approach utilizing the pre-trained VGG19 model to detect cigar smoke in smart vehicle environments. VGG19, originally trained on the ImageNet dataset, is fine-tuned using transfer learning to classify instances of cigar smoke. The key advantage of this method lies in its adaptability to complex features such as the varying textures, colors, and shapes of smoke, which traditional sensors often struggle to detect. This enables rapid and accurate detection, providing a more robust solution compared to existing sensor technologies.

While traditional smoke sensors are often proposed for such applications; however, their suitability for vehicle environments is questionable due to various technical and practical limitations. Key drawbacks of smoke sensors, particularly in the dynamic and confined environment of a smart car, include sensitivity to environmental conditions, false positives from non-smoke sources, high maintenance needs, and potential health hazards arising from chemical degradation. These limitations are compounded by regulatory hurdles and challenges related to passenger acceptance, as elaborated in Table 1.

Given the limitations of traditional smoke sensors, this study proposes a novel approach using DL to visually detect cigar smoke in smart vehicle environments. By leveraging CNNs and TL, the system can identify patterns and features unique to cigar smoke with remarkable precision. Visual-based detection offers several advantages over traditional methods. CNNs excel at recognizing complex textures, colors, and shapes, enabling accurate smoke detection even under challenging lighting or environmental conditions. Additionally, unlike conventional sensors, CNN-based systems require no physical cleaning or recalibration, significantly reducing maintenance demands and long-term operational costs. These systems are also highly adaptable, capable of identifying low-emission smoke, such as from e-cigarettes, which conventional smoke sensors often miss. Furthermore, their discreet design enhances passenger comfort by eliminating the intrusive presence of visible sensors. This shift to a visual-based detection system not only addresses the inherent drawbacks of traditional smoke sensors but also paves the way for a more efficient, scalable, and passenger-friendly solution in autonomous vehicle technology.

Ⅳ. Cigar Smoke Detection Approach

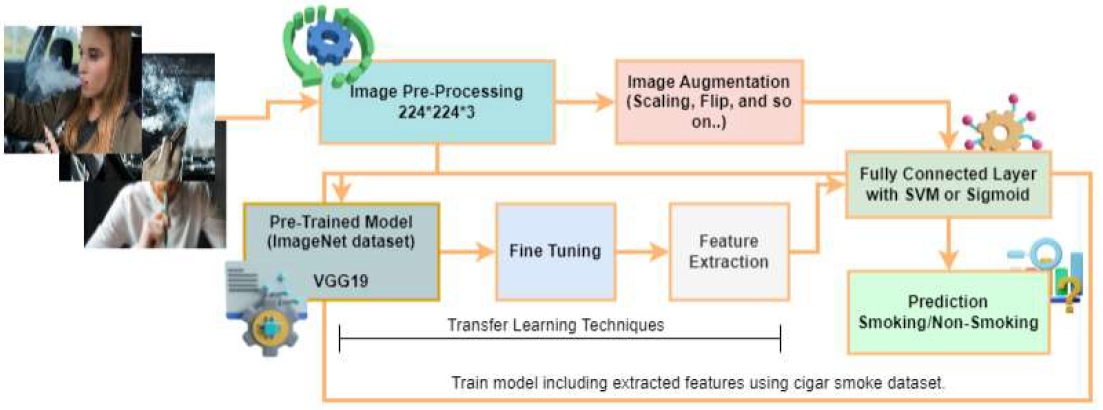

The suggested framework aims to detect cigar smoke in the images captured within the autonomous driving environment. Subsequent actions will be taken depending on the attained results. Fig. 2, outlines the proposed methodical approach for this research. The VGG19 network and different TL techniques were employed to train, validate, and test the image dataset. The strategy of this paper is described in the following:

Comprehensive overview of the proposed approach for smoke detection in a smart car environment, highlighting the integration of preprocessing, feature extraction, Fine-tuning, model training, and Prediction

4-1 Tobacco Smoke Dataset

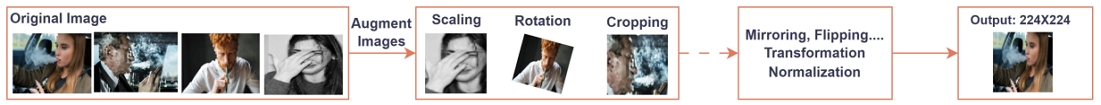

In this experiment, a vehicle environment-based image dataset of cigar smoking and non-smoking is used, which consists of 7,535 images including confusing objects such as lipstick, walkie-talkies, pens, and chocolate, as well as complex actions such as holding the phone vertically, drinking from a glass or bottle, sneezing with a folded hand, and covering faces with a hand while using an inhaler in front of the mouth. This dataset was collected from various open source web platforms [21]-[24]. A few dataset samples with labels are displayed in Fig. 3. We did not perform extensive image pre-processing to avoid any extra computational cost, which consequently slowed down the detecting process. The only image pre-processing that we have done is the resizing of the images to fit in the VGG19 network. On the other hand, image augmentation was employed to provide us with extra photos to train our model and prevent overfitting. We applied scaling, rotation, mirroring, flipping, and cropping techniques. We scaled the image by 0.2, rotated it at 50◦, then translated it horizontally or vertically by 0.2. We also used shear-based transformation up to a factor of 0.2.

4-2 Convolutional Neural Network

Formally, according to [59] in our research, we employed a supervised learning approach where a training dataset consists of images along with their corresponding labels. The model learns patterns from these images using a set of parameters, which we iteratively updated through an optimization process to improve classification accuracy. We utilized a loss function to measure the difference between the model's predictions and the actual labels. This function guided the learning process by evaluating how well the predicted category aligned with the true category of each image.

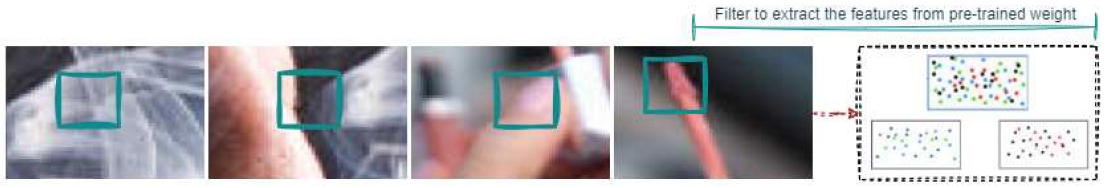

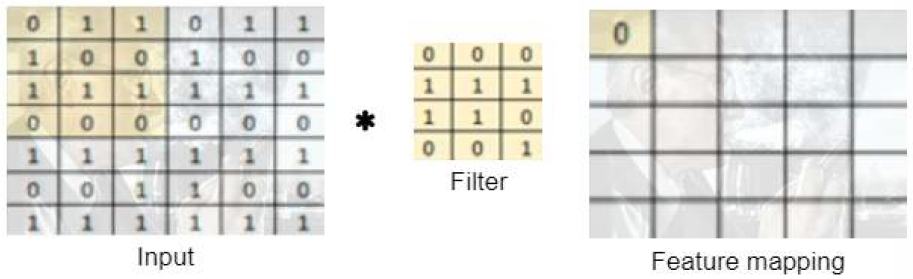

In our study, we incorporated convolutional layers as the primary feature extraction mechanism. These layers perform feature extraction by scanning the input image with small filters known as kernels. Each filter moves across the image in a structured manner, detecting essential patterns such as edges, textures, and shapes. The result of this operation is a feature map, which captures crucial characteristics necessary for classification. Fig. 4, illustrates this process, where an image undergoes convolution operations to generate a feature map that highlights important regions.

Process of Convolution illustrating the three key steps: input representation, filtering through convolutional kernels, and generating the corresponding feature map to capture essential patterns for further processing

To enhance the robustness of our model, we applied multiple filters in convolutional layers to extract different aspects of an image. Each filter learned distinct characteristics, enabling the model to identify complex patterns when combined. The effectiveness of our CNN framework was largely dependent on the learned filter weights, which we adjusted iteratively using backpropagation. To further improve computational efficiency and feature selection, we incorporated pooling layers following the convolutional layers. We specifically employed max pooling to select the most prominent feature within a region, reducing the size of the feature map while retaining key information. This helped our model become more invariant to small transformations in the image, such as scaling and rotation. Fig. 5, demonstrates how we applied convolutional filters to extract features from input images. The transformation of pixel information into structured feature maps was essential for our classification process. Following feature extraction, we processed the extracted features through fully connected layers, which interpreted and classified the learned representations. These layers mapped the feature maps into a prediction space, ultimately determining the category to which each input image belonged. The final classification decision was based on probability scores assigned to each possible class. In our research, we relied on iterative updates to filter weights, ensuring they effectively captured the distinguishing characteristics of each class. To prevent issues such as vanishing gradients, we adopted a proper weight initialization strategy. Instead of initializing all weights to zero, we sampled them from a predefined distribution to facilitate effective learning. This approach contributed to the stability and accuracy of our CNN-based classification model in our approach.

4-3 Transfer Learning

As stated by [60], Transfer Learning can be used in image classification sectors with the following parameters: T : Target, S : Source, m, n (consecutively: Target and Source dataset capacity), ϕ(.) : Target function, F : Attribute space, D : Domain, P (X) : Probability distribution, and Learning extracts: X = {x1, x2, . . . , xn} ∈F, Υ: Label extracts, Task : K = {n, Υ, ϕ(.)}, n ≫m. An image domain, D, is defined as having two components: an attribute space F, and a probability distribution P (X):

| (1) |

For a source domain, S, the dataset is XS = {xS1, xS2, . . . , xSn} ∈ FS. The source domain data can be denoted by:

| (2) |

where xSi ∈ FS is the data example, and ySi ∈ ΥS is the appropriate class label. T, the target domain can be indicated by:

| (3) |

where xT i ∈ FT is an instance of data, and yT i ∈ ΥT is the conforming class label. A task, K, consists of two elements such as attribute space, Υ, and an target function, ϕ(.):

| (4) |

As shown in Fig. 6, DS represents datasets such as ImageNet or Cigarette smoke. KS is defined as KS = {γS, ϕS(·)}, where γS is the source attribute space, and ϕS(·) is the source predictive function that maps a new image feature xi to its label yi. DT represents new datasets in this research. KT is defined as KT = {γT , ϕT (·)}, where γT is the target attribute space, and ϕT (·) is the target predictive function. The goal is to enhance ϕT (·) for the DT using knowledge from the DS and its predictive function ϕS(·). The TL scheme is presented in algorithm 1.

4-4 VGG19 Architectures

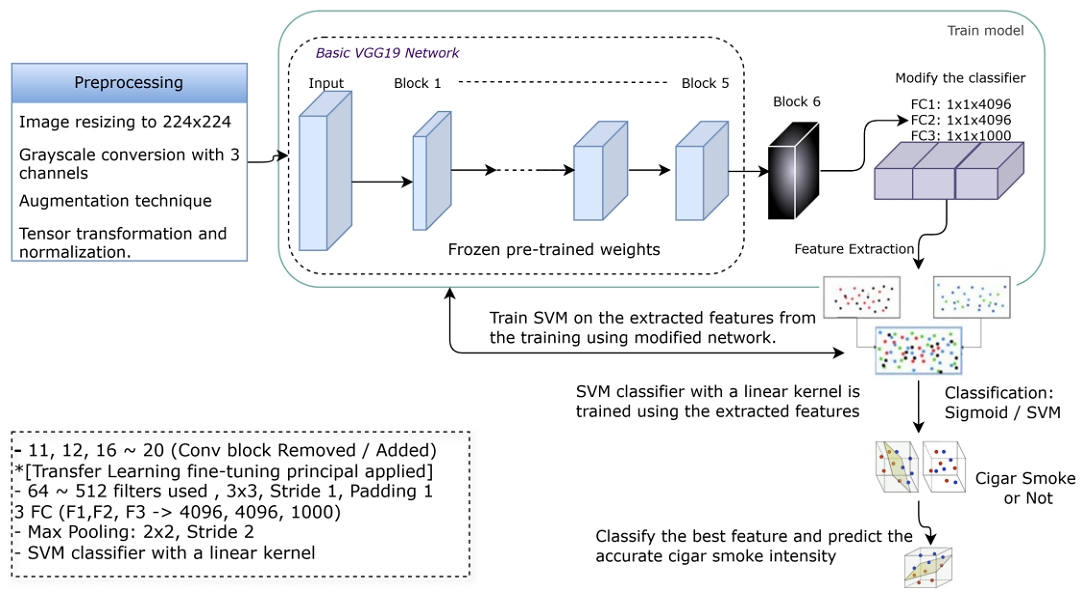

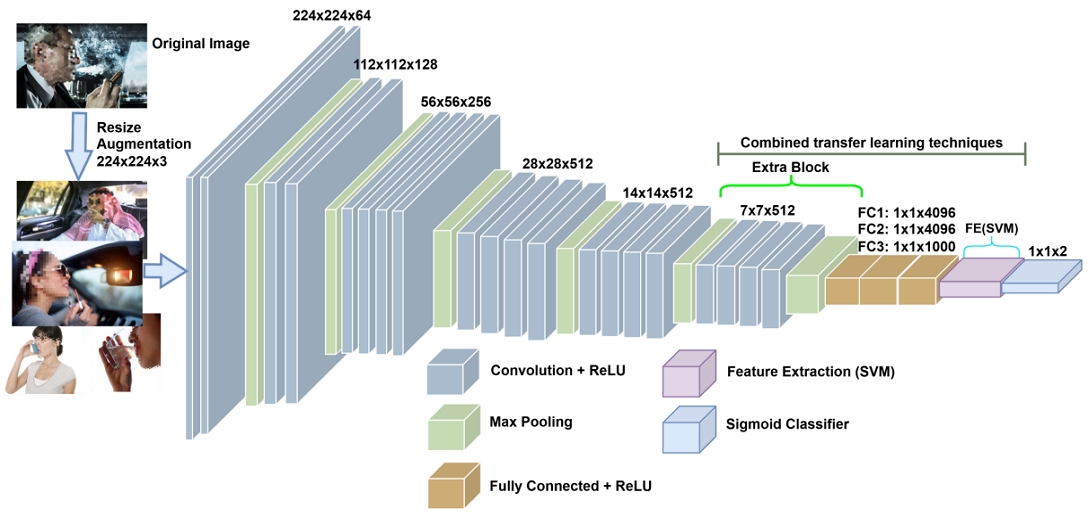

The verification of cigar smoke instances inside driverless cars is crucial for passengers, especially those with dyspnea or motion sickness, to ensure a comfortable travel experience. In this research, a classification problem as cigar smoking or not is solved. Additionally, it is intended to classify the smoke using FE techniques with high accuracy and to investigate the adoption of new modalities using DL models in smoke image processing. To offer a solution to this obstacle, three new architectures with 3, 4, and 6 layers were suggested by modifying the CNN-based VGG19 architecture. In addition, the SVM classifier and FE are also used for each architecture. The CNN architecture finetuned using the original VGG19 architecture [61], with an input image size of 224×224. Three new models were put out by varying the number of convolution layers. To find out how these adjustments impact classification outcomes, each model was tested using both the SVM and sigmoid classifier as in the original VGG19. The accuracy of the model with six convolution layers appeared to be the most promising. The architecture with 20 convolution layers in VGG19, consists of 2 layers with 64, 128 filters, 256, 512 filters in 4 layers, and an additional layer with 512 filters. Each convolution layer uses 3×3 filters, padding of 1, and the ReLU activation function. Architecture with 3 or 4 convolution layers has layers with 3×3 filters, including 3 layers with 64 filters, 2 layers with 128 filters, and 4 layers with 256 filters. After that modified architecture includes an extra convolution layer including more than 4 with 512 filters, making it 21 layers in total. FE processes are also applied to mapping the feature similarity and reduce the computational cost, retain valuable features, and enhance classification accuracy, the FE process is represented in Fig. 7. Max-pooling layers with a 2×2 filter size follow each set of convolution and ReLU layers in both models. The ReLU activation function improves learning speed and classification accuracy by addressing the vanishing gradient problem. The most effective architecture was found the 6Conv_VGG19_FE with 6 convolution layers. The layers and parameter values of the 6Conv_VGG19_FE model are given in Fig. 8.

Visualization of the proposed feature extraction approach, showcasing convolutional, frozen layers, and feature extraction mechanisms for TL

The most effective proposed fine-tuned method, incorporating convolutional layers with ReLU activation, combined transfer learning techniques, an additional six-layer convolutional block for enhanced feature extraction, max pooling for dimensionality reduction, and SVM for classification, achieving optimal performance in feature learning and prediction

Ⅴ. Results

In this study, the performances of the six proposed models we evaluated with the collected cigar smoking image dataset, and the best model was also examined with a similar dataset from Mendeley [24]. All methods were implemented on the PyCharm platform using PyTorch. While experimenting with the results, a computer with technical details of an Intel (R) Core(TM)i9-10900CPU, 2.80GHz, 64GB of RAM, and an NVIDIA GeForce RTX 3070 (×2) GPU was applied for training, testing, and evaluation processes. In all experiments, 32 batch sizes, Stochastic Gradient Descent with Momentum (SGDM) optimizer algorithm, 0.5 decay (drop factor), and 5 drop period parameters were used with a learning rate of 10e-4. Each model was run with 30~150 epochs by allocating 70% of training, 15% for validation, and 15% for testing in the image dataset. Training data was different from validation and testing data.

5-1 Performance Metrics

To assess the results of the six models claimed in this analysis, we generated used an agreed confusion matrix of each model. Performances are measured using the evaluation metrics Accuracy (ACC), Specificity (SPE), Sensitivity (SEN), Precision (PRE), and f1 Score (F1SCO) [62]. In metrics, TP, FP, FN, and TN stand for true positive (correctly confirmed pattern), false positive (falsely confirmed exemplar), false negative (falsely rejected specimen), true negative (correctly rejected pattern), and correspondingly.

| (5) |

| (6) |

| (7) |

| (8) |

| (9) |

5-2 Outcomes with Cigar Smoke Dataset

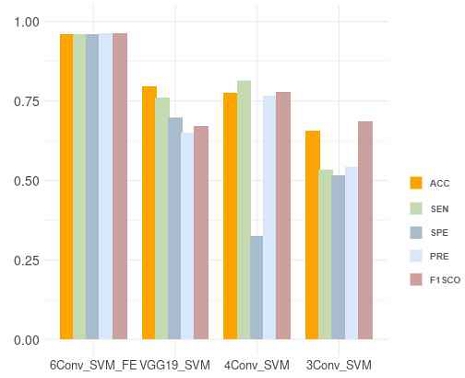

At first, the suggested techniques were examined using cigar smoke dataset and the predetermined experimental parameters. The outcomes are shown in Table II and Table III. According to Table II, the 3 convolution layer method (3Conv_VGG19) acted poorly when used with both Sigmoid and SVM classifiers. The greatest results were obtained in ACC, SEN, SPE, PRE, and F1SCO metrics with a success rate above 96% when our proposed model with 6 convolution layers was used in conjunction with the SVM classifier incorporating the FE process (6Conv_VGG19_FE). When combined with the Sigmoid classifier, the suggested model with the same convolution layers demonstrated performance over 90% as well seen in Table III. In the experiment, it is found that the 6Conv_VGG19_FE and 6Conv_VGG19 models obtained by increasing the convolutional layer increased the success basically after merging FE techniques impacted a lot. Additionally, similar experimental inquiries have been conducted for architectures with 7 or more layers (maximum = 12) by expanding the layers. Since these techniques failed to produce better results, they and their specifics were left out of this work. 6Conv_VGG19_FE recognized the properties of cigar smoke images.

It was discovered to be a highly efficient model on our dataset due to its excellent classification rates, which draw on a highly successful feature extraction formula using transfer learning tuning principles. The model excels at identifying subtle features and patterns unique to cigar smoke particles, such as texture, density, and dispersion patterns. By focusing on these specific characteristics, 6Conv_VGG19_FE enhances the accuracy of smoke detection and classification. The visual comparison of the SVM classifier metric values in Table II is shown in Fig. 9.

5-3 Model Performance Evaluation with New Dataset

The 6Conv_VGG19_FE model was tested in an identical experimental setup on the smoker vs. non-smoker dataset, which is available as open source on Mendeley. Pictures of people who smoke cigars and people who don’t, with different resolutions and actions, were used [24]. The results are listed in Table IV. We utilized our best model to assess this. new dataset. The results of the 6Conv_VGG19_FE model are presented with the following evaluation metrics: ACC, SEN, SPE, PRE, and F1SCO. As seen in Table IV, the evaluation metric outcomes for the 6Conv_VGG19_FE model on this dataset are lower than on our original dataset. It was observed that the performance decreased due to the low number of images. Nevertheless, the 6Conv_VGG19_FE model, which, among the strategies suggested, is the most effective, still achieved a success rate of over 93%.

5-4 Comparison with Other SOTA Models

Our secondary dataset, which includes pictures of smoke and nonsmoke, has never been used in any other study. It is therefore impossible to draw a fair comparison. As a result, we attempted to compare the outcomes by using our dataset with a few current categorization methods. Here, we analyzed (see Table V) multiple DL classification architectures, including Reg- Net, MobileNetV3, EfficientNet, ResNeSt, MLP-Mixer, and our best model 6Conv_VGG19_FE, using key performance metrics such as accuracy, sensitivity, specificity, precision, and F1-Score. The 6Conv_VGG19_FE method appeared as the best performer, boasting the highest accuracy (96.05%), sensitivity (0.9607), specificity (0.9599), precision (0.9644), and F1-Score (0.9622). MobileNetV3 also demonstrated strong performance with a notable accuracy of 94.62% and high precision (0.9625). While RegNet and EfficientNet showed respectable results, EfficientNet’s lower specificity (0.6768) could be a concern. ResNeSt presented balanced but lower overall metrics, and MLP-Mixer pushed back significantly behind. Thus, 6Conv_VGG19_FE’s superior and comprehensive execution makes it the most reliable and efficient choice for our prediction system, ensuring strong and accurate outcomes. This performance consistency across various metrics further highlights the robustness of our proposed approach. Our finding values are laid out in Table V.

5-5 Computational Complexity

In this article, we evaluated models in terms of classification performance, computational complexity, and inference speed on the cigar-smoking data set with GFLOPs. The summary of results is shown in Table VI. Our best performing model was the edited VGG19 model 6Conv_VGG19_FE. With an inference speed of 2.3 milliseconds per image, it came in at 1, and with a compute requirement of 17.05 GFLOPs, the lowest 6. Therefore, it is highly suitable and efficient for prediction. MobileNetV3 had the following results: 58.6 GFLOPs with 4.8 milliseconds for inference on the device and RegNet had the following results: 20.3 GFLOPs with 4.6 milliseconds for inference on the device. Speed and flop estimation with EfficientNet, ResNeSt, and MLP-Mixer performed slower speeds (6.7 (EfficientNet), 2.5 (ResNeSt), and 3.2 (MLP-Mixer) milliseconds) and higher GFLOPs (40 (EfficientNet), 53.9 (ResNeSt), and 69 (MLP-Mixer)).

Ⅵ. Conclusion

In this study, we provided an innovative artificial intelligence (AI) monitoring model intended to improve the management of cigar smoke emissions in smart cars. This method improves travel experience and sensors performance in these vehicles by focusing specifically on passengers who have respiratory issues and motion sickness that is exacerbated by the presence of carbon monoxide, especially in enclosed environments. Moreover, this research has provided a dataset for not only cigar smoker detection problems in car environments but also some confusing objects such as lipstick, walkie-talkies, pens, and chocolate, as well as complex actions such as holding the phone vertically, drinking from a glass or bottle, sneezing with a folded hand, and so on, to help future research on this AI-based cigar smoker detection system. This dataset consists of two classes: cigar smoking and not smoking. Further, to classify the instances of cigar smoke in car surroundings, we proposed a TL-founded technique using the pre-trained VGG19 model including an SVM-based FE approach. In this model, we also tried to consider previous research limitations. The effectiveness of the proposed method for predicting cigar smoke and non-smoke has been evaluated and compared with other recent CNN classification architectures using different metrics. The proposed TL-based 6Conv_VGG19_FE model achieved an accuracy of 96.05%, with precision and recall rates of 96.44% and 96.07%, respectively, in predicting smoking and non-smoking behavior from images using a challenging and diverse dataset. In comparison, other architectures such as RegNet, MobileNetV3, and EfficientNet achieved lower accuracies of 93.67%, 94.62%, and 93.15%, respectively, while models like ResNeSt and MLP-Mixer performed even lower at 91.61% and 79.89%. These results confirm the superior performance of the proposed method. Although we evaluated the proposed method on different image datasets, we also obtained promising results in all cases. In the years to come, we intend to incorporate car accident likelihood and predictions based on real-time camera data. This system will employ map indicators or an application to alert people and autonomous cars to possible threats.

Acknowledgments

This work was supported by Innovative Human Resource Development for Local Intellectualization program through the Institute of Information & Communications Technology Planning & Evaluation(IITP) grant funded by the Korea government(MSIT)(IITP-2024-RS-2022-00156287, 50%), This work was supported by Institute of Information & communications Technology Planning & Evaluation (IITP) under the Artificial Intelligence Convergence Innovation Human Resources Development (IITP-2023-RS-2023-00256629, 50%) grant funded by the Korea government(MSIT)

References

-

S. T. Lugg, A. Scott, D. Parekh, B. Naidu, and D. R. Thickett, “Cigarette Smoke Exposure and Alveolar Macrophages: MechAnisms for Lung Disease,” Thorax, Vol. 77, No. 1, pp. 94-101, 2022.

[https://doi.org/10.1136/thoraxjnl-2020-216296]

- T. W. Krug and T. L. Ketchum, “Confined Spaces: Understnading the Changes to ANSI/ASSP Z117.1.,” Professional Safety, Vol. 67, No. 7, 2022.

- J. Gilliam and E. Hall, “Reference and Equivalent Methods Used to Measure National Ambient Air Quality Standards (NAAQS) Criteria Air Pollutants-Volume I,” Washington, DC: U.S. Environmental Protection Agency, 2016.

-

B. Freeman, S. Chapman, and P. Storey, “Banning smoking in Cars carrying Children: An Analytical History of a Public Health Advocacy Campaign,” Australian and New Zealand Journal of Public Health, Vol. 32, No. 1, pp. 60-65, February 2008.

[https://doi.org/10.1111/j.1753-6405.2008.00167.x]

-

A. Takeuchi, A. Vesely, J. Rucker, L. Z. Sommer, J. Tesler, E. Lavine, ... and J. A. Fisher, “A simple “New” Method to Accelerate Clearance of Carbon Monoxide,” American Journal of Respiratory and Critical Care Medicine, Vol. 161, No. 6, pp. 1816-1819, 2000.

[https://doi.org/10.1164/ajrccm.161.6.9907038]

- P. J. Mikulka, R. D. O’Donnell, P. E. Heinig, and T. James, “The Effect of Carbon Monoxide on Human Performance,” AMRL-TR, Vol. 69, p. 17-40, 1969.

-

J. A. Fisher, J. Rucker, L. Z. Sommer, A. Vesely, E. Lavine, Y. Green- wald, ... and S. Iscoe, “Isocapnic Hyperpnea Accelerates Carbon Monoxide Elimination,” American Journal of Respiratory and Critical Care Medicine, Vol. 159, No. 4, pp. 1289-1292, 1999.

[https://doi.org/10.1164/ajrccm.159.4.9804040]

-

A. Ernst and J. D. Zibrak, “Carbon Monoxide Poisoning,” New England Journal of Medicine, Vol. 339, No. 22, pp. 1603-1608.

[https://doi.org/10.1056/NEJM199811263392206]

-

P. R. Kshirsagar, H. Manoharan, F. Al-Turjman, and K. K. Maheshwari, “Design and Testing of Automated Smoke Monitoring Sensors in Vehicles,” IEEE Sensors Journal, Vol. 22, No. 18, pp. 17497-17504, September 2020.

[https://doi.org/10.1109/JSEN.2020.3044604]

-

H. A. Ignatious, H.-E. Sayed, and M. Khan, “An Overview of Sensors in Autonomous Vehicles,” Procedia Computer Science, Vol. 198, pp. 736-741, 2022.

[https://doi.org/10.1016/j.procs.2021.12.315]

-

V. U. Ekpu and A. K. Brown, “The Economic Impact of Smoking and of Reducing Smoking Prevalence: Review of Evidence,” Tobacco Use Insights, Vol. 8, TUI–S15628, 2015.

[https://doi.org/10.4137/TUI.S15628]

- World Health Organization. Tobacco Fact Sheet [Internet]. Available: https://www.who.int/news-room/fact-sheets/detail/tobacco, .

-

H. Eldem, E. Ülker, and O. Y. Işıklı, “Alexnet Architecture Variations with Tansfer Learning for Classification of Wound Images,” Engineering Science and Technology, an International Journal, Vol. 45, 101490, September 2023.

[https://doi.org/10.1016/j.jestch.2023.101490]

-

K. Simonyan and A. Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition,” arXiv:1409.1556, , 2014.

[https://doi.org/10.48550/arXiv.1409.1556]

-

S. Mohammadian, A. Karsaz, and Y. M. Roshan, “Comparative Study of Fine-Tuning of Pre-Trained Convolutional Neural Networks for Diabetic Retinopathy Screening,” in Proceedings of 2017 24th National and 2nd International Iranian Conference on Biomedical Engineering (ICBME), 2017.

[https://doi.org/10.1109/ICBME.2017.8430269]

-

P. Prentašić and S. Lončarić, “Detection of Exudates in Fundus Photographs Using Convolutional Neural Networks,” in Proceedings of 2015 9th International Symposium on Image and Signal Processing and Analysis (ISPA). 2015.

[https://doi.org/10.1109/ISPA.2015.7306056]

-

N. M. Khan, N. Abraham, and M. Hon, “Transfer Learning with Intelligent Training Data Selection for Prediction of Alzheimer’s Disease,” IEEE Access, Vol. 7, pp. 72726-72735, 2019.

[https://doi.org/10.1109/ACCESS.2019.2920448]

-

A. Farooq, S. Anwar, M. Awais, and S. Rehman, “A Deep cnn Based Multi-Class Classification of Alzheimer’s Disease Using MRI,” in Proceedings of 2017 IEEE International Conference on Imaging Systems and Techniques (IST), 2017.

[https://doi.org/10.1109/IST.2017.8261460]

-

K. M. Hosny, M. A. Kassem, and M. M. Foaud, “Classification of Skin Lesions Using Transfer Learning and Augmentation with Alex-Net,” PloS One, Vol. 14, No. 5, e0217293, 2019.

[https://doi.org/10.1371/journal.pone.0217293]

-

B. Harangi, “Skin Lesion Classification with Ensembles of Deep Convolutional Neural Networks,” Journal of Biomedical Informatics, Vol. 86, pp. 25-32, October 2018.

[https://doi.org/10.1016/j.jbi.2018.08.006]

- Google. Smoking Images: Dataset [Internet]. Available: https://tinyurl.com/59zcybpd, .

- Unsplash. Cigar Smoking Images: Dataset [Internet]. Available: https://unsplash.com/s/photos/cigar-smoke-in-car, .

- iStockphoto. Cigar Smoking Images: Dataset [Internet]. https://www.istockphoto.com/kr/search/search-by-asset?assetid=1367537840&assettype=image, .

- A. Khan, “Dataset Containing Smoking and Not-Smoking Images (Smoker vs Non-Smoker),” Mendeley Data, Vol. 1, 2020.

- The Atlantic. Our Grandmother’s Driverless Car [Internet]. Available: https://www.theatlantic.com/technology/archive/2016/06/beep-beep/489029/, .

-

T. Kanade, C. Thorpe, and W. Whittaker, “Autonomous Land Vehicle Project at CMU,” in Proceedings of the 1986 ACM Fourteenth Annual Conference on Computer Science, pp. 71-80, 1986.

[https://doi.org/10.1145/324634.325197]

- J. Schmidhuber. Robot Car History [Internet]. Available: http://people.idsia.ch/∼juergen/robotcars.html, .

-

B. Hahn, “Research and Conceptual Design of Sensor Fusion for Object Detection in Dense Smoke Environments,” Applied Sciences, Vol. 12, No. 22, 11325, 2022.

[https://doi.org/10.3390/app122211325]

-

A. F. Brown, S. J. Dunjey, and C. T. Myers, “Management of the Moribund Carbon Monoxide Victim,” Emergency Medicine Journal, Vol. 10, No. 4, pp. 383-384, 1993.

[https://doi.org/10.1136/emj.10.4.383]

-

D. A. Apatzidou, “The Role of Cigarette Smoking in Periodontal Disease and Treatment Outcomes of Dental Implant Therapy,” Periodontology 2000, Vol. 90, No. 1, pp. 45-61, October 2022.

[https://doi.org/10.1111/prd.12449]

-

N. Salehi, P. Janjani, H. Tadbiri, M. Rozbahani, and M. Jalilian, “Effect of Cigarette Smoking on Coronary Arteries and Pattern and Severity of Coronary Artery Disease: A Review,” Journal of International Medical Research, Vol. 49, No. 12, 03000605211059893, 2021.

[https://doi.org/10.1177/03000605211059893]

-

D. Marti-Aguado, A. Clemente-Sanchez, and R. Bataller, “Cigarette Smoking and Liver Diseases,” Journal of Hepatology, Vol. 77, No. 1, pp. 191-205, July 2022.

[https://doi.org/10.1016/j.jhep.2022.01.016]

-

J. R. Macalisang, N. E. Merencilla, M. A. D. Ligayo, M. P. Melegrito, and R. R. Tejada, “Eye-Smoker: A Machine Vision-Based Nose Inference System of Cigarette Smoking Detection Using Convolutional Neural Network,” in Proceedings of 2020 IEEE 7th International Conference on Engineering Technologies and Applied Sciences (ICETAS), 2020.

[https://doi.org/10.1109/ICETAS51660.2020.9484241]

-

R. Chen, G. Zeng, K. Wang, L. Luo, and Z. Cai, “A Real Time Vision-Based Smoking Detection Framework on Edge,” Journal on Internet of Things, Vol. 2, No. 2, pp. 55-64, 2020.

[https://doi.org/10.32604/jiot.2020.09814]

-

G. Maguire, H. Chen, R. Schnall, W. Xu, and M.-C. Huang, “Smoking Cessation System for Preemptive Smoking Detection,” IEEE Internet of Things Journal, Vol. 9, No. 5, pp. 3204-3214, March 2022.

[https://doi.org/10.1109/JIOT.2021.3097728]

-

W.-C. Wu and C.-Y. Chen, “Detection System of Smoking Behavior Based on Face Analysis,” in Proceedings of 2011 Fifth International Conference on Genetic and Evolutionary Computing, 2011.

[https://doi.org/10.1109/ICGEC.2011.51]

-

Y. Xie, F. Li, Y. Wu, S. Yang, and Y. Wang, “Hearsmoking: Smoking Detection in Driving Environment via Acoustic Sensing on Smartphones,” IEEE Transactions on Mobile Computing, Vol. 21, No. 8, pp. 2847-2860, August 2022.

[https://doi.org/10.1109/TMC.2020.3048785]

-

R. K. Mohammed, “A Real-Time Forest Fire and Smoke Detection System Using Deep Learning,” International Journal of Nonlinear Analysis and Applications, Vol. 13, No. 1, pp. 2053-2063, March 2022.

[https://doi.org/10.22075/ijnaa.2022.5899]

-

X. Zhou, W. Liang, K. I.-K. Wang, H. Wang, L. T. Yang, and Q. Jin, “Deep-Learning-Enhanced Human Activity Recognition for Internet of Healthcare Things,” IEEE Internet of Things Journal, Vol. 7, No. 7, pp. 6429-6438, July 2020.

[https://doi.org/10.1109/JIOT.2020.2985082]

-

P. F. Moshiri, R. Shahbazian, M. Nabati, and S. A. Ghorashi, “A CSI-Based Human Activity Recognition Using Deep Learning,” Sensors, Vol. 21, No. 21, 7225, 2021.

[https://doi.org/10.3390/s21217225]

-

A. Parate, M.-C. Chiu, C. Chadowitz, D. Ganesan, and E. Kalogerakis, “RisQ: Recognizing Smoking Gestures with Inertial Sensors on a Wristband,” in Proceedings of the 12th Annual International Conference on Mobile Systems, Applications, and Services, pp. 149-161, 2014.

[https://doi.org/10.1145/2594368.2594379]

-

Q. Tang, Automated Detection of Puffing and Smoking with Wrist Accelerometers, Master’s Thesis, Northeastern University, Boston, MA, 2014.

[https://doi.org/10.4108/icst.pervasivehealth.2014.254978]

-

Z. Rentao, W. Mengyi, Z. Zilong, L. Ping, and Z. Qingyu, “Indoor Smoking Behavior Detection Based on YOLOv3-tiny,” in Proceedings of 2019 Chinese Automation Congress (CAC), 2019.

[https://doi.org/10.1109/CAC48633.2019.8996951]

-

K. Muhammad, J. Ahmad, Z. Lv, P. Bellavista, P. Yang, and S. W. Baik, “Efficient Deep CNN-Based Fire Detection and Localization in Video Surveillance Applications,” IEEE Transactions on Systems, Man, and Cybernetics: Systems, vol. 49, no. 7, pp. 1419-1434, July 2019.

[https://doi.org/10.1109/TSMC.2018.2830099]

-

A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet Classification with Deep Convolutional Neural Networks,” Communications of the ACM, Vol. 60, No. 6, pp. 84-90, June 2017.

[https://doi.org/10.1145/3065386]

-

M. Shaha and M. Pawar, “Transfer Learning for Image Classification,” in Proceedings of 2018 Second International Conference on Electronics, Communication Andaerospacetechnology (ICECA), 2018.

[https://doi.org/10.1109/ICECA.2018.8474802]

-

M. Bansal, M. Kumar, M. Sachdeva, and A. Mittal, “Transfer Learning for Image Classification Using VGG19: Caltech-101 Image Data Set,” Journal of Ambient Intelligence and Humanized Computing, pp. 3609-3620, 2023.

[https://doi.org/10.1007/s12652-021-03488-z]

-

L. Wen, X. Li, X. Li, and L. Gao, “A New Transfer Learning Based on VGG-19 Network for Fault Diagnosis,” in Proceedings of 2019 IEEE 23rd International Conference on Computer Supported Cooperative Work in Design (CSCWD), 2019.

[https://doi.org/10.1109/CSCWD.2019.8791884]

-

G. Meena, K. K. Mohbey, A. Indian, and S. Kumar, “Sentiment Analysis from Images Using VGG19 Based Transfer Learning Approach,” Procedia Computer Science, Vol. 204, pp. 411-418, 2022.

[https://doi.org/10.1016/j.procs.2022.08.050]

-

S. Gupta, A. Panwar, S. Gupta, M. Manwal, and M. Aeri, “Transfer Learning Based Convolutional Neural Network (CNN) for Early Diagnosis of Covid19 Disease Using Chest Radiographs,” in Machine Learning and Big Data Analytics (Proceedings of International Conference on Machine Learning and Big Data Analytics (ICMLBDA) 2021, pp. 244-252, 2022.

[https://doi.org/10.1007/978-3-030-82469-3_22]

-

S. Tammina, “Transfer Learning Using VGG-16 with Deep Convolutional Neural Network for Classifying Images,” International Journal of Scientific and Research Publications (IJSRP), Vol. 9, No. 10, pp. 143-150, October 2019.

[https://doi.org/10.29322/IJSRP.9.10.2019.p9420]

-

D. Zhang, C. Jiao, and S. Wang, “Smoking Image Detection Based on Convolutional Neural Networks,” in Proceedings of 2018 IEEE 4th International Conference on Computer and Communications, 2018.

[https://doi.org/10.1109/CompComm.2018.8781009]

-

J. Vargas, S. Alsweiss, O. Toker, R. Razdan, and J. Santos, “An Overview of Autonomous Vehicles Sensors and Their Vulnerability to Weather Conditions,” Sensors, Vol. 21, No. 16, 5397, 2021.

[https://doi.org/10.3390/s21165397]

-

M. Kulkarni, S. M. Sundaram, and V. Diwakar, “Development of Sensor and Optimal Placement for Smoke Detection in an Electric Vehicle Battery Pack,” in Proceedings of 2015 IEEE International Transportation Electrification Conference (ITEC), 2015.

[https://doi.org/10.1109/ITEC-India.2015.7386868]

-

D. J. Wales, J. Grand, V. P. Ting, R. D. Burke, K. J. Edler, C. R. Bowen, ... and A. D. Burrows, “Gas Sensing Using Porous Materials for Automotive Applications,” Chemical Society Reviews, Vol. 44, No. 13, pp. 4290-4321, 2015.

[https://doi.org/10.1039/C5CS00040H]

-

P. Koopman and M. Wagner, “Challenges in Autonomous Vehicle Testing and Validation,” SAE International Journal of Transportation Safety, Vol. 4, No. 1, pp. 15-24, 2016.

[https://doi.org/10.4271/2016-01-0128]

-

F. A. Butt, J. N. Chattha, J. Ahmad, M. U. Zia, M. Rizwan, and I. H. Naqvi, “On the Integration of Enabling Wireless Technologies and Sensor Fusion for Next-Generation Connected and Autonomous Vehicles,” IEEE Access, Vol. 10, pp. 14643-14668, 2022.

[https://doi.org/10.1109/ACCESS.2022.3145972]

-

L.-Y. Lv, C.-F. Cao, Y.-X. Qu, G.-D. Zhang, L. Zhao, K. Cao, ... and L.-C. Tang, “Smart Fire-Warning Materials and Sensors: Design Principle, Performances, and Applications,” Materials Science and Engineering: R: Reports, Vol. 150, 100690, August 2022.

[https://doi.org/10.1016/j.mser.2022.100690]

-

I. Kandel and M. Castelli, “How Deeply to Fine-Tune a Convolutional Neural Network: A Case Study Using a Histopathology Dataset,” Applied Sciences, Vol. 10, No. 10, 3359, 2020.

[https://doi.org/10.3390/app10103359]

-

S. Panigrahi, A. Nanda, and T. Swarnkar, A Survey on Transfer Learning, in Intelligent and Cloud Computing, Singapore: Springer, pp. 781-789, 2021.

[https://doi.org/10.1007/978-981-15-5971-6_83]

-

K. Simonyan and A. Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition,” arXiv:1409.1556, , 2014.

[https://doi.org/10.48550/arXiv.1409.1556]

-

Ž. Vujovic, “Classification Model Evaluation Metrics,” International Journal of Advanced Computer Science and Applications, Vol. 12, No. 6, 2021.

[https://doi.org/10.14569/IJACSA.2021.0120670]

저자소개

2017:Bsc. Computer Science & Engineering, Daffodil International University, Dhaka, Bangladesh

2023:Software Engineer, Samsung Electronics, Dhaka, Bangladesh

Current: Msc. Artificial Intelligence Convergence, Chonnam National University, Gwangju, South Korea

※Research Interests:AI, Deep Learning, Learning Approaches, Classification, and Detection

2023:Bsc. Computer Engineering, Chonnam National University, Gwangju, South Korea

Current: Msc. Artificial Intelligence Convergence, Chonnam National University, Gwangju, South Korea

※Research Interests:Deep Learning, Spatiotemporal prediction

1999:BSc. Electrical and Computer Engineering, KAIST, Daejeon, South Korea

2001:MSc. Electrical and Computer Engineering, KAIST, Daejeon, South Korea

2007:Ph.D. Electrical and Computer Engineering, KAIST, Daejeon, South Korea

2007~2012: Postdoctoral Researcher, University of California Irvine, California, USA

2012~Current: Professor, Chonnam National University, Gwangju, South Korea

※Research Interests:Intelligent Distributed Systems, SDN/NFV, Big Data Platforms, Artificial Intelligence, Blockchain, Social Networks