Performance Analysis of Learning Algorithms Based on Simplified Maximum Zero-Error Probability

Copyright ⓒ 2023 The Digital Contents Society

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-CommercialLicense(http://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

The common Mean Squared Error (MSE)-based learning algorithms are known to yield insufficient performance in non-Gaussian noise environments. In contrast, learning algorithms developed from the Minimum Error Entropy (MEE) criterion can overcome these obstacles. One of the MEE drawbacks is known for requiring sufficient error samples to correctly calculate error entropy, which in turn makes the system complicated. A recently proposed learning method for simple and efficient calculation of error entropy utilizes the difference between two consecutive error samples ek and ek-1 in an iteration times k. Inspired by the fact that the variance of ek-ek-1 could be larger than that of ek or ek-1, this study proposed a new simple learning algorithm based on the Maximum Zero Error Probability (MZEP) criterion and its learning performance was analyzed through adaptive equalization experiment in a communication system model. The proposed simplified MZEP (SMZEP) shows convergence faster by about two times and lower steady-state MSE by about 1 dB than the simplified MEE (SMEE), indicating that the proposed SMZEP can be more appropriate for efficiency-requiring learning systems than the existing SMEE.

초록

일반적으로 쓰이는 MSE(mean squared error) 성능 기준에 근거한 학습 알고리듬은 비 가우시안 잡음환경에서 충분한 학습 성능을 나타내지 못하는 것으로 알려져 있다. 한편, MEE (minimum error entropy)에 기반하여 파생된 학습 알고리듬들은 이러한 장애를 극복할 수 있다. 그러나 MEE의 단점 중 하나로 보다 정확한 오차 엔트로피를 계산할 수 있도록 충분한 수의 오차 샘플이 필요하며 이로 말미암아 학습 시스템이 복잡해지게 된다. 최근 오차 엔트로피 계산의 효율성을 위해 연속 오차 샘플 ek와 ek-1의 차이를 활용하는 간단한 학습 방법(SMEE, simplified MEE)에서 ek-ek-1의 변동 (variance)가 ek 또는 ek-1 자체가 가지는 변동보다 크다는 사실에 착안하여 ek와 ek-1을 활용하는 MZEP (maximum zero error probability)를 제안하고 그 학습 성능을 통신 환경의 등화 학습에 적용하여 실험하고 분석하였다. 이 제안된 SMZEP (simplified MZEP)는 기존의 SMEE에 비해 2배 이상 빠른 수렴 속도와 1dB 이상 낮은 정상상태 MSE 성능을 나타냈다. 이에, 제안한 방법은 효율성이 필요한 학습 시스템에서 기존의 SMEE에 비해 보다 적절한 학습 알고리듬으로 적용될 수 있다.

Keywords:

Error Entropy, Zero-Error Probability, Efficient Calculation, ITL, Impulsive Noise키워드:

오차 엔트로피, 영-오차확률, 효율적 계산, 충격성 잡음Ⅰ. Introduction

Many tasks in machine learning or adaptive signal processing require robustness against noise and rapid learning speed and lower steady state error. The common mean squared error(MSE) based learning algorithms are known not to yield sufficient performance in non-Gaussian noise environments[1],[2]. On the other hand, learning algorithms developed from minimum error entropy(MEE) criterion can break through these obstacles[3],[4]. The MEE criterion is a powerful approach for robust machine learning as well as for non-Gaussian signal processing[4].

MEE criterion has been designed to create error entropy using error probability density based on Parzen density method[5]. In kernel density estimation method like Parzen density, the estimate for error density converges to the true error probability as the number of error samples increases[6]. That is, the MEE requires sufficient error samples for correct calculation of error entropy, which in turn makes the system complicated.

For this reason there has not been any research of using reduced sample points before the approach of using only the current error sample ek and the previous one ek-1 for calculating the error entropy has recently been proposed[7]. The calculation reduced simplified MEE(SMEE) has shown almost equal performance unlike the belief of researchers who work on MEE[7].

The SMEE criterion contains the term ek-ek-1 and the variance of ek-ek-1 could be larger than that of ek or ek-1 itself, which the maximum zero error probability(MZEP) criterion handles error samples not their differences[8]. The variance of error samples leads us to propose a new simple learning algorithm based on the MZEP criterion using only ek and ek-1. In this paper, learning performance of the proposed simplified MZEP(SMZEP) is analyzed through adaptive equalization experiment in a communication system model with severely distorted multi-path fading and impulsive noise[9].

Ⅱ. MSE Criterion and LMS Algorithm

In case of FIR linear filter, a linear combiner with L weights Wk at time k can be used for input vector and output [1]. Defining the error as ek = ak - yk where ak is the desired value or training symbol, its MSE is derived as

| (1) |

Using the instant error power instead of estimating the ensemble average of error power we obtain the weight update equation 1.

| (2) |

Where μLMS is a step-size or convergence parameter that controls its stability. From the fact that LMS algorithm employs the instant error power instead of using the statistically averaged error power (1), we can refer to the LMS algorithm as a simplified version of MSE based algorithms using the ensemble average of error power.

Ⅲ. MZEP Criterion based on ITL

The ITL based performance criterion is constructed by error probability density which uses Parzen window method[5].

In this section we briefly introduce the ITL based criterion that maximizes zero-error probability fE(e = 0) so as to create a concentration of error samples around zero. The estimated error probability can be calculated using the Parzen window method with the sample size N and the Gaussian kernel of kernel size σ[5].

| (3) |

| (4) |

This criterion can be developed by minimizing quadratic probability distance between the PDF of error signal fE(e) and Dirac-delta function of error δ(e), so that fE(e) is forced to form the shape of δ(e).

| (5) |

The term is not defined mathematically but can be treated as a constant since it has no relations with the system weights.

Minimization of QD in (5) induces minimization of and maximization of fE(0), simultaneously. It is noticeable that minimization of indicates maximization of error entropy that makes error samples spread apart. It is apparent that this contradicts the MEE criterion that maximizes [3],[4]. To avoid this contradiction, MZEP criterion that maximizes only the term fE(0) in (5) has been proposed in [8] as:

| (6) |

The estimated zero-error probability can be calculated by replacing e with zero in the kernel density estimation method (3) with the sample size N.

| (7) |

Ⅳ. Simplified MZEP Criterion and related Algorithms

In Parzen window method as in (3), the estimate for error density converges to the true error probability fE(e) as N increases[6]. Also for the error entropy criterion[3], the error entropy approaches Renyi entropy as N increases. For these reasons, there has not been any research of using reduced sample points even to N = 2 for ek and ek-1. The approach of using only ek and ek-1 for calculating the error entropy has recently been proposed in [7]. The SMEE criterion V°(ek) can be written as

| (8) |

From the results of the work[7], learning performance in communication channel equalization based on the SMEE has shown to be similar to the case of sample size N being large enough. Inspired by this idea, we propose to use only ek and ek-1 for the criterion of the zero-error probability and analyze its performance compared to the SMEE in (8).

Then, eq. (7) becomes the following .

| (9) |

And maximization of (9) forces error samples ek and ek-1 to be moved to near zero.

| (10) |

This simplified criterion (9) and (10) can be a summarized expression of SMZEP.

For the maximization of the Criterion (9) with respect to the system in Section II, the gradient ascent method with the step-size μSMZEP can be employed as

| (11) |

where the gradient can be evaluated from

| (12) |

So the weight update equation (SMZEP algorithm) can be expressed as

| (13) |

For comparison, SMEE algorithm in [7] can be written as

| (14) |

It can be noticed that SMZEP algorithm applies Gaussian kernel to the error samples ek and ek-1 separately while SMEE deals with the difference between ek and ek-1. On the other hand, by comparing (2) and (13), we can notice the error term of (13) has a modified form while (2) has only ek. Likewise, it can be considered that the SMEE has a modified error in (14). Then, SMZEP algorithm) becomes

| (15) |

And SMEE algorithm becomes

| (16) |

It may be worthwhile to observe how different performance would be resulted from these different features between SMZEP with and SMEE with . And the role of these modified errors is needed to be analyzed.

Ⅴ. Performance Results and Discussion

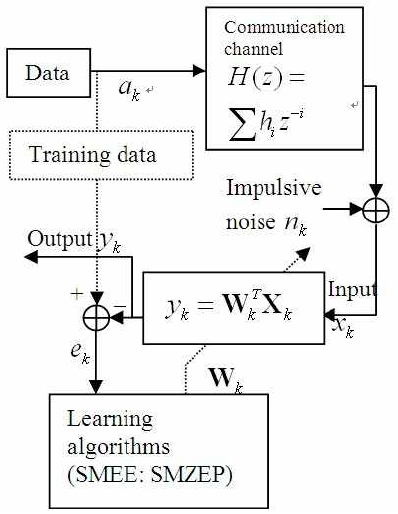

In this Section, the learning performance of the SMEE and the SMZEP algorithm is discussed and resulting properties are analyzed in the experiment of communication channel equalization. The experimental communication system is shown in Fig. 1.

The experimental setup is composed of a data generation (random symbol ak is generated), communication channel H(z), and a receiver equipped with a linear combiner . The random symbol ak at time k is equiprobably chosen among the symbol set{±1,±3} and distorted through the multipath channel H(z) and corrupted by impulsive noise nk.

The transfer function H(z) has two different channel model H1 and H2[9].

| (17) |

| (18) |

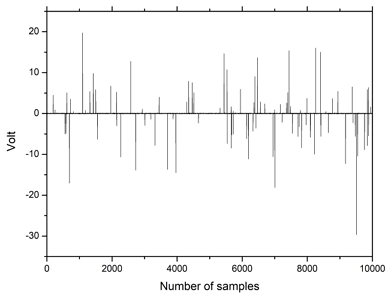

Both of them have spectral nulls and induce severe intersymbol interference (ISI). The impulsive noise consists of impulses and white Gaussian noise. The impulses are generated by Poisson process with variance 50 and occurrence rate 0.03[10],[11]. The variance of the background white Gaussian noise is 0.001. A sample of the impulsive noise is depicted in Fig. 2.

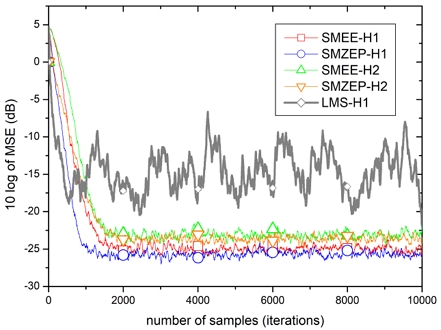

The linear combiner in the receiver has 11 weights which are updated by SMEE or SMZEP with the common step size μSMZEP = μSMEE = 0.02 and the kernel size σ=0.8. Fig. 3 shows the MSE learning curves of SMEE and SMZEP for two different channel models. It is observed that the learning curve of LMS in (2) cannot converge below -16 dB. On the other hand, the SMEE and SMZEP algorithms show fast and stable convergence to below -23dB. In H1, the SMZEP in blue converges in about 1200 samples and the SMEE in red does in about 2000 samples. The steady state MSE is -26dB for SMZEP but -25dB for SMEE. In both convergence speed and lowest MSE, the proposed SMZEP shows superior performance. Similar results are observed in channel model H2 showing SMZEP has a lower minimum MSE.

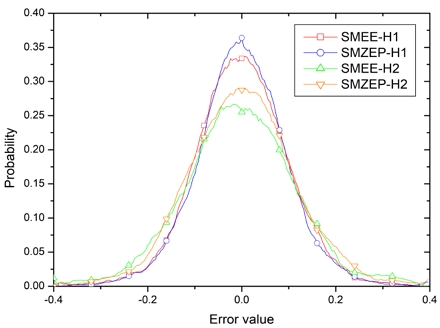

Fig. 4 for the comparison of system error distribution shows their performance differences more clearly. The error value on the horizontal axis is defined as the difference between the transmitted symbol and its corresponding receiver system output. The error probability is on the vertical axis. As shown in Fig. 4, the system error samples of SMEE and SMZEP produces error distributions highly concentrated around zero. Especially the proposed SMZEP has significantly narrower bell shape of error distribution than the SMEE in both channel models revealing that more of the error samples of SMZEP are around zero than those of SMEE.

Probability distribution for error samples ranging from -0.4 to 0.4 (red: SMEE-H1, blue: SMZEP-H1, green: SMEE-H2, Orange: SMZEP-H2)

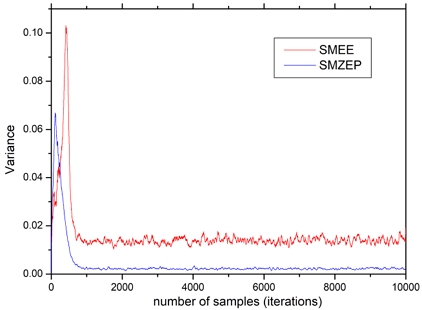

To investigate the cause of the performance difference in more detail, we present the fluctuation (variance) of the center weight of each algorithm in Fig. 5. After sample number 1000 the center weight of both algorithms reaches a steady state but shows different behaviors. The variance of the center weight of SMEE stays at about 0.015 while that of SMZEP indicates 0.0025 which means the center weight of SMEE is 6 times more likely to suffer severe fluctuations.

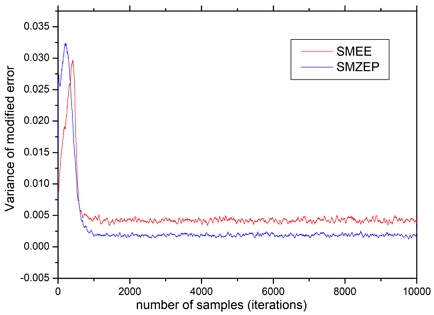

As discussed in Section IV, the modified error in SMZEP and in SMEE are compared in Fig. 6 in the aspect of fluctuation, that is, variance of modified error. The variance of converges to 0.042 while that of does to 0.017 which is lower by more than 2 times. It can be understood that the bigger variance of from SMEE causes the performance degradation in MSE convergence and error distribution compared to SMZEP.

Ⅵ. Conclusion

In Non-Gaussian noise environments, the common criterion MSE does not provide its’ related learning algorithms with acceptable learning performance. The approach based on ITL breaks through these problems but it has been known to require sufficient data sample size that makes the system complicated. A recent study proposed a simplified MEE that utilizes only two error samples ek and ek-1 in an iteration. It yields sufficiently good performance even with two error samples. In this paper, the zero error probability criterion using only two error samples e_k ande_(k-1)like the SMEE is proposed. The proposed SMZEP shows faster convergence by about 2 times and lower steady state MSE by about 1 dB than the SMEE. Also more of the system error samples of SMZEP are concentrated on zero than those of SMEE. From the analysis of the cause of the performance difference, it is found that the modified error of SMZEP produces less fluctuation, which leads to less fluctuation of weight after convergence than that of the SMEE. So we conclude that the proposed SMZEP can be an effective candidate for adaptive learning systems that may employ the existing SMEE algorithm.

Acknowledgments

본 과제(결과물)는 2023년도 교육부의 재원으로 한국연구재단의 지원을 받아 수행된 지자체-대학 협력기반 지역혁신 사업의 결과입니다(2022RIS-005).

References

- S. Haykin, Adaptive Filter Theory, 4th ed. Upper Saddle River, NJ: Prentice Hall, 2001.

- J. Principe, D. Xu, and J. Fisher, Information Theoretic Learning, in Unsupervised Adaptive Filtering, New York, NY: Wiley, pp. 265-319, 2000.

-

D. Erdogmus and J. C. Principe, “An Error-Entropy Minimization Algorithm for Supervised Training of Nonlinear Adaptive Systems,” IEEE Transactions on Signal Processing, Vol. 50, No. 7, pp. 1780-1786, July 2002.

[https://doi.org/10.1109/TSP.2002.1011217]

-

Y. Li, B. Chen, N. Yoshimura, and Y. Koike, “Restricted Minimum Error Entropy Criterion for Robust Classification,” Learning Systems, Vol. 33, No. 11, pp. 6599-6612, November 2022.

[https://doi.org/10.1109/TNNLS.2021.3082571]

-

E. Parzen, “On Estimation of a Probability Density Function and Mode,” The Annals of Mathematical Statistics, Vol. 33, No. 3, pp. 1065-1076, September 1962.

[https://doi.org/10.1214/aoms/1177704472]

- R. O. Duda, P. E. Hart, and D. G. Stork, Pattern Classification, 2nd ed. New York, NY: John Wiley & Sons, 2012.

-

N. Kim and K. Kwon, “A Simplified Minimum Error Entropy Criterion and Related Adaptive Equalizer Algorithms,” The Journal of Korean Institute of Communications and Information Sciences, Vol. 48. No. 3, pp. 312-318, March 2023.

[https://doi.org/10.7840/kics.2023.48.3.312]

- N. Kim and K. Kwon, “Performance Enhancement of Blind Algorithms Based on Maximum Zero Error Probability,” Journal of Theoretical and Applied Information Technology, Vol. 95, No. 22, pp. 6056-6067, November 2017.

- J. Proakis, Digital Communications, 2nd ed. New York, NY: McGraw-Hill, 1989.

-

I. Santamaria, P. P. Pokharel, and J. C. Principe, “Generalized Correlation Function: Definition, Properties, and Application to Blind Equalization,” IEEE Transactions on Signal Processing, Vol. 54, No. 6, pp. 2187-2197, June 2006.

[https://doi.org/10.1109/TSP.2006.872524]

-

N. Kim, “Kernel Size Adjustment Based on Error Variance for Correntropy Learning Algorithms,” The Journal of Korean Institute of Communications and Information Sciences, Vol. 46, No. 2, pp. 225-230, February 2021.

[https://doi.org/10.7840/kics.2021.46.2.225]

저자소개

1986년:연세대학교 전자공학과(학사)

1988년:연세대학교 대학원 전자공학과 (석사)

1991년:연세대학교 대학원 전자공학과 (박사)

1998년~현 재: 강원대학교 교수

※관심분야:통신 신호처리, 정보이론적 학습

1993년:강원대학교 컴퓨터과학과(학사)

1995년:강원대학교 대학원 컴퓨터과학과(석사)

2000년:강원대학교 대학원 컴퓨터과학과(박사)

1998년~2002년: 동원대학 인터넷정보과 교수

2002년~현 재: 강원대학교 전자정보통신공학과 교수

※관심분야:패턴 인식 (Pattern Recognition), 사물 인터넷 (IoT) 응용, 기계학습