Improved Accuracy of Vehicle Part Detection and Damage Classification using YOLO Algorithm

Copyright ⓒ 2024 The Digital Contents Society

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-CommercialLicense(http://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

Continuous advancements in deep learning and computer vision has led to the utilization of such advanced technologies in the field of vehicle maintenance. This study developed vehicle part detection and damage classification models to help drivers develop effective repair plans for improved vehicle safety and performance. The You Only Look Once (YOLO) algorithm and transfer learning were used to train the vehicle part detection model, and the transformer method was to train the damage classification model. The proposed model achieved up to 95% vehicle parts detection accuracy, an improvement of 3% over that of the existing model, and a damage classification accuracy of 89%, an improvement of 6% over that of the existing model. With the recent rapid development of computer vision and deep learning technology, vehicle parts detection and damage classification are expected to provide new scientific method for vehicle repair.

초록

딥러닝과 컴퓨터 비전의 지속적인 발전으로 차량 유지 관리 분야에도 몇 가지 첨단 기술을 도입하고 있다. 본 논문의 목적은 차량의 안전성과 성능을 향상시킬 수 있는 수리 계획을 개발하여 운전자에게 더 나은 도움을 줄 수 있는 차량 부품 탐지 모델과 손상 분류 모델을 제안한다. 본 연구는 YOLO(You Only Look Once)와 전이 학습 방법을 사용하여 차량 부품 감지 모델, 트랜스포머 모델을 사용하여 손상 분류 모델을 사용한다. 제안 모델은 기존 모델보다 최대 95%의 차량 부품 탐지 정확도와 최대 3%의 높은 성능을 보였다. 그리고 제안 모델은 기존 모델보다 최대 89%의 손상 분류 정확도와 최대 6%의 높은 성능을 보였다. 최근 컴퓨터 비전과 딥러닝 기술이 급속도로 발전함에 따라 차량 부품 감지 및 손상 분류는 차량 수리를 위한 새로운 과학적 방법을 제공할 것으로 예상된다.

Keywords:

Computer Vision, Deep Learning, You Only Look Once, Vehicle Part Detection, Damage Classification키워드:

컴퓨터 비전, 딥러닝, 욜로, 차량 부품 탐지, 손상 분류Ⅰ. Introduction

In the current society, vehicle have been the main of transportation in daily life. With the continuous increase in the number of vehicles, the problem of vehicle damage has gradually come to the fore. Therefore, timely and accurate detection of vehicle parts and vehicle damage classification have been urgent problems[1]-[3]. Manual inspection methods for large-scale vehicle numbers are inefficient, time-consuming, and subjective. Computer vision and machine learning techniques provides a new way to solve these problems[4],[5].

In recent years, there has been a surge of interest in the field of computer vision towards detecting vehicle parts and damage classification[6]-[8]. As the performance of the Residual Network-50(ResNet-50) has been proved in the field of image classification(extracting high-quality features of images on ImageNet), Mahboub Parhizkar et al.[9] scheme employed this model to explore some informative details about the damaged car and background. Moreover, by applying deeper layers(more feature extraction layers), a better result in detecting damaged parts of a car will be obtained. Kalpesh Patil et al.[10] scheme employ Convolutional Neural Network(CNN) based methods for classification of car damage types. Specifically, they consider common damage types such as bumper dent, door dent, glass shatter, head lamp broken, tail lamp broken, scratch and smash.

This paper proposes the vehicle parts detection model and damage classification model that use You Only Look Once(YOLO) and transformer technologies for detecting and repairing damages to vehicle parts. The model can efficiently and comprehensively provide vehicle repair recommendation plans.

The purpose of the proposed model is to help driver better to develop a repair plan to improve vehicle safety and performance. It is to collect images of vehicle parts and use YOLO and transfer learning to extract images of each vehicle parts, these images as a dataset, for damage classification based on the transformer method is employed to ensure detailed vehicle repair plans for users. Transfer learning is the reuse of a pre-trained model on a new problem.

The proposed model utilizes YOLO to extract each part of the vehicle and then use transformer technology to classify the damage for each part.

The proposed model used the pre-trained YOLO model and transfer learning to train the vehicle parts detection model, to detect the vehicle parts in the image and get the object box, then each detected vehicle part is extracted from the original image to get a series of image segments containing different parts. Finally, use these segment image and transformer to train the vehicle damage classification model, to classify vehicle damage.

This paper will collect and label large amounts of vehicle image for vehicle repair recommendation guidance. The proposed model has the following features. First, it can detect each vehicle part rapidly. Second, it ensures detection accuracy across various environments. Third, it accurately classifies correlations between different types of damages, thus enhancing classification accuracy and robustness.

What we would like to do is as follow based on the analysis of the latest papers, we plan to design the vehicle parts detection model and vehicle damage classification model based on YOLO, transfer learning and transformer to improve the efficiency of vehicle maintenance. By collecting vehicle data and training model with machine learning, our aim is to propose the models with higher accuracy to provide drivers with more detail repair recommendation report.

The composition of this paper is as follows, chapter 2 discusses the current status and previous works of the vehicle parts detection and damage classification. Chapter 3 proposes the vehicle parts detection model and damage classification model, while Chapter 4 carries out comparative evaluations of the proposed model and the existing model, and finally concludes in the Chapter 5.

Ⅱ. Related Works

2-1 Current Status of the Vehicle Parts Detection and Damage Classification

Recent studies have indicated that the use of advanced technologies to improve the accuracy of vehicle parts detection and damage classification has become a state-of-art in the field of vehicle maintenance. In this field, effective detection of the condition vehicle parts and damage classification are critical to vehicle performance and safety. Which provide useful references for the application of technology and performance optimization in vehicle parts detection and damage classification. Table 1 is a study of the cases of applying vehicle parts detection and damage classification so far in the vehicle field.

2-2 Previous Works

Wang Liqun et al.[10] scheme proposed a method of using depth learning to solve the problem of the detection of vehicle parts, it mainly uses the Visual Geometry Group-16 Layers(VGG-16) network and inceptionv3 module to improve the accuracy of the vehicle parts detection. However, it is limited by the image data, because, most of the vehicle parts detection is covert color image into grayscale images and then detect.

Thonglek et al.[11] scheme proposed the damaged vehicle parts detection platforms, it consists of four modules: the first module is a data labeling(for insurance experts), the second module is deep learning Application Programming Interface(API) for data scientists, the third module is the web monitoring application for the operators, and the fourth module is LINE official integration for field employees.

Jaywardena et al.[12] scheme proposed a method for vehicle scratch damage detection by registering a 3D Computer-Aided Design(CAD) model(ground truth model) of an undamaged vehicle on the image of the damaged vehicle. This innovative approach aims to utilize the precise alignment of the 3D CAD model of the undamaged vehicle with the image of the damaged vehicle, thus facilitating the recognition and localization of scratches. By using the undamaged vehicle model as a reference point, the method provides a systematic and accurate means of detecting and assessing the extent of vehicle scratch damage.

The introduction of a 3D CAD model in the analysis enhances the robustness and reliability of the damage detection process. This contribution by Jaywardena et al.[12] scheme marks a significant advance in the field of vehicle damage detection, particularly in the area of scratch recognition.

Ⅲ. Vehicle Parts Detection and Damage Classification

3-1 Overview

With the continuous advancement of Artificial Intelligence(AI) technology and computer vision, the field of vehicle maintenance has also introduced some advanced technologies, including the application of computer vision and deep learning techniques. By combining computer vision and deep learning methods, users can detect vehicle parts and damages, thereby providing better repair services. However, the need to detect the vehicle parts and classify the damages is increasing because it can be save time and accurately.

This paper proposed a vehicle parts detection model and damage classification model to ensure the user using this to get the details of the vehicle repair plan. The basic design idea of this module mainly includes seven parts. It includes image collection, train vehicle parts detection model, vehicle parts detection result, extract each part image, image processing, train damage classification model and damage classification result.

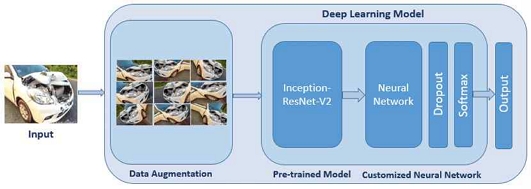

The proposed models aim to collect the vehicle images, and use the YOLO method to train the model, use the model can detect each vehicle parts. According the vehicle parts images, use Transformer method to train model and classify the damage types. Fig. 1 shows the method of damage detection and classification. The proposed model's process is uses Resnet-101 feature pyramid network model as a backbone, initialize the model weights from a pretrained model and train only the network heads to increase the model performance.

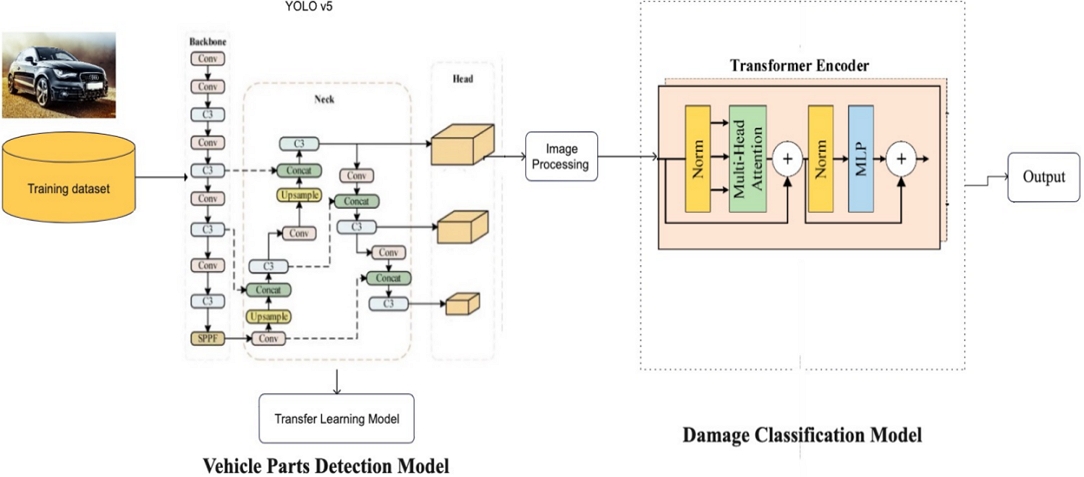

Fig. 2 shows the process for the vehicle parts detection model and damage classification model. The proposed models in Fig. 2 ensures that the images can be detect each part and classify the damages. In particular, in the process, the result is more accurately for users who need to get the detailed repair vehicle plans.

3-2 System Design

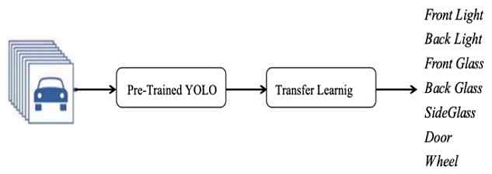

This model is designed to detect the vehicle parts. It mainly consists of dataset, pre-trained YOLO model and transfer learning, as shown in Fig. 3. During the detection process, the each vehicle parts image will be extracted from in the origin image, and it will be as the image sets for the damage classification model.

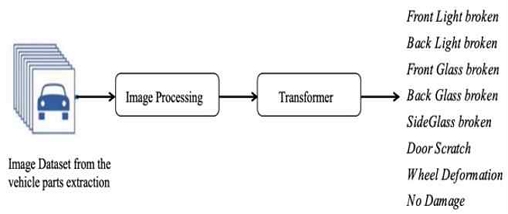

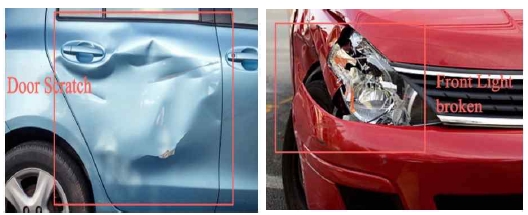

This model is classify the damage types such as front light broken, back light broken, front glass broken, back glass broken, side glass broken, door scratch and wheel deformation as shown in Fig. 4. The driver can get the repair recommendation plans according the result of the damage classification.

3-3 System Process

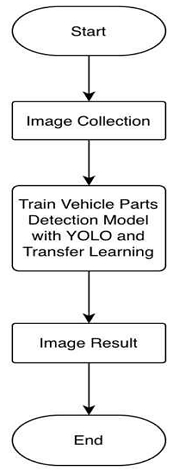

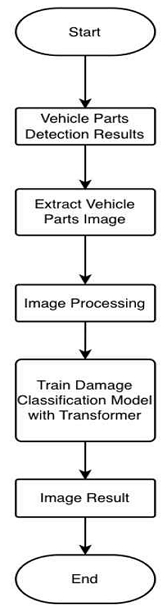

The proposed in this paper consists of two model. The flow chart of the vehicle parts detection model is shown in Fig. 5 and the flow chart of the damage classification model is shown in Fig. 6.

In summary, this model is including the image collection, train model and get the result.

⦁ Image Collection

We collect the vehicle image for this research from the website, such as Kaggle dataset, Common Objects in Context(COCO) dataset, and labeled. The classes of the vehicle parts including the front light, back light, front glass, back glass, side glass, door and wheel.

⦁ Train Vehicle Parts Detection Model

We used pre-trained You Only Look Once(YOLO) model and transfer learning method to train the vehicle parts detection model.

⦁ Image Result

According the trained model, input the vehicle image, can get the result image of each vehicle part.

In summary, this model is including the image result of vehicle parts detection, vehicle parts image extraction, image processing, train model and image result.

⦁ Image Result of Vehicle Parts Detection

According the vehicle parts detection model, we can get the result image.

⦁ Vehicle Parts Image Extraction

From the result image, we can extract the image of each part, then put these as the training data.

⦁ Image Processing

We make these images as the dataset, adjust the size of the images and labelled.

⦁ Train Model

We use the transformer method and the dataset to train the damage classification model.

⦁ Image Result

According the trained model, input the vehicle image, can get the result image of vehicle, it shows the damage types.

Ⅳ. Evaluation and Results

In this chapter, the vehicle parts detection model and vehicle damaged classification model were implemented and tested.

4-1 Experiments

In this paper, for vehicle parts detection model, this paper uses the dataset from website, such as, Kaggle data, COCO Data. For damage classification model, this paper uses the dataset from the vehicle parts detection result images to extract.

The details of the dataset collection are as follows, for vehicle parts detection we have chosen 1000 training sets and 700 test sets and all these 1700 data. For damage classification we extract the each vehicle part image 2000 training sets and 700 test sets and all these 2700 data and labeled from completely different people. There, the robustness of the project is enhanced and the fitting rate is reduced.

4-2 Performance Evaluation

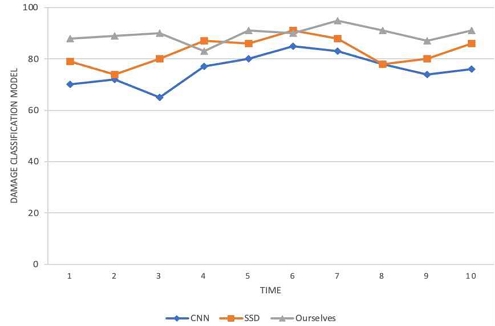

For vehicle Parts Detection, we can get the comparison with CNN, Single Shot MultiBox Detector(SSD) and ourselves as shown in Fig. 7. We use the same image dataset, CNN, SSD and ourselves method to train the vehicle parts detection model 10 times, the Fig. 7 shows the accuracy of each time for each method, and the result shows the accuracy of ourselves method is higher than other methods.

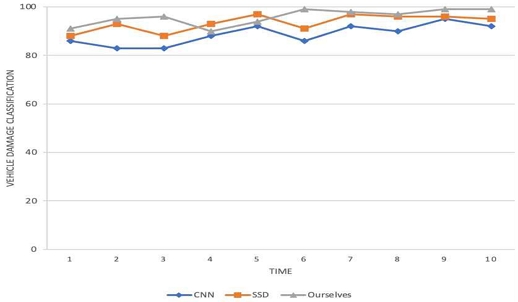

For damage classification, we can get the comparison with CNN, SSD and ourselves as shown in Fig. 8. We use the same image dataset, CNN, SSD and ourselves method to train the damage classification model 10 times, the Fig. 8 shows the accuracy of each time for each method, and the result shows the accuracy of ourselves method is higher than other methods.

We use the accuracy, confusion matrix, precision, recall, F1-score, and support as the performance metrics for each model. In this section, we describe the results for vehicle parts detection model and damage classification model.

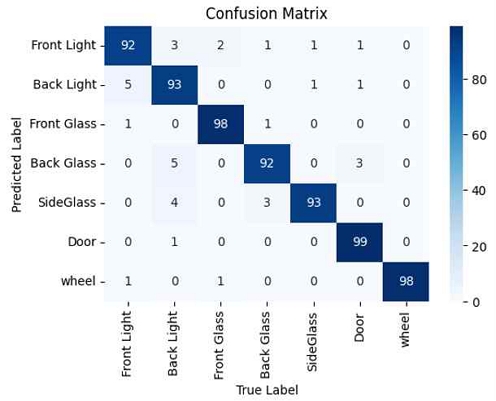

The Confusion Matrix of vehicle parts detection model is shown in Fig. 9. Fig. 9 shows the number of correct detections for each class, according this figure, we can get the performance of each class and the accuracy of vehicle parts detection model.

The Performance of accuracy, precision, recall, F1-score, support is shown in Table 3 and the result image of vehicle parts detection model is shown in Fig. 10. Fig. 10 shows that use the vehicle parts detection model, we can detect each part from the input image.

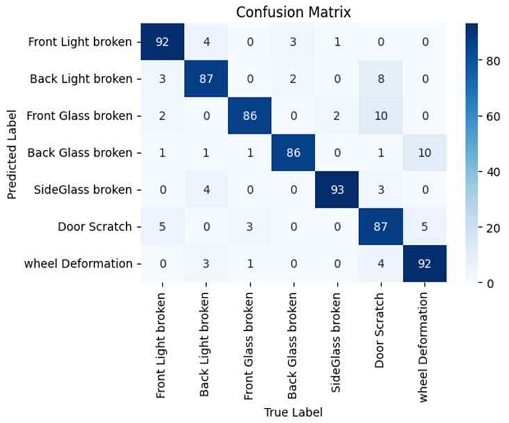

The confusion matrix of damage classification model is shown in Fig. 11. Fig. 11 shows the number of correct detections for each class, according this figure, we can get the performance of each class and the accuracy of damage classification model.

The Performance of accuracy, precision, recall, F1-score, support is shown in Table 4 and the result image of vehicle parts detection model is shown in Fig. 12. Fig. 12 shows that use the damage classification model, we can detect damage part and classified the damage types from the input image.

4-3 Analysis of the Proposed Model

In Table 5, we compare the efficiency of similar studies when the test datasets are unchanged, for vehicle parts detection model, such as Berwo et al.[13] which used a Convolutional Neural Network(CNN) method, and Cao et al.[14] which used a Single Shot MultiBox Detector(SSD) method.

Ⅴ. Conclusion

Recently, with the vehicle have become more important in daily life, vehicle parts detection and damage classification are being applied more extensively. This is due to the application of deep learning and computer vision in the vehicle repair field, which offer for the users to get the better services.

In this paper, we propose a method for vehicle parts detection and damage classification based on computer vision and deep learning techniques using YOLO, transfer learning and transformer models.

Proposed YOLO and transfer learning model ensures to quickly and accurately detect each part of the vehicle, and the proposed transformer model is bale to classify the detected parts with damage types, which effectively improves the accuracy and robustness of the classification. To tackle the current research challenges and better adapt to varying image qualities in different environments, we propose using YOLO to detect each vehicle and use transformer to accurately classify damage types. This method can greatly improve accuracy.

According the comparison of the performance, for the vehicle parts detection model, this paper method is higher 5% accuracy than Berwo et al.[13] Scheme and is higher 3% accuracy than Cao et al.[14] Scheme, for the damage classification model, this paper method is higher 12% accuracy than Berwo et al.[13] Scheme and is higher 6% accuracy than Cao et al.[14] Scheme.

For the future studies, we can use the model trained in this paper, and collect the more images including multiple qualities, then train the new model to get the higher performance for vehicle damage classification.

Acknowledgments

This Research was supported by the Tongmyong University Research Grants 2023(2023A002).

References

- J. de Deijn, Automatic Car Damage Recognition using Convolutional Neural Networks, 2018 Internship report MSc Business Analytics, March 2018.

-

B. Balci, Y. Artan, B. Alkan, and A. Elihos, “Front-View Vehicle Damage Detection Using Roadway Surveillance Camera Images,” in Proceedings of the 5th International Conference on Vehicle Technology and Intelligent Transport Systems, Heraklion, Crete: Greece, pp. 193-198, May 2019.

[https://doi.org/10.5220/0007724601930198]

-

C. T. Artan and T. Kaya, “Car Damage Analysis for Insurance Market Using Convolutional Neural Networks,” in Proceedings of Intelligent and Fuzzy Techniques in Big Data Analytics and Decision Making, Istanbul: Turkey, pp. 313-321, July 2019.

[https://doi.org/10.1007/978-3-030-23756-1_39]

-

K. Patil, M. Kulkarni, A. Sriraman, and S. Karande, “Deep Learning Based Car Damage Classification,” in Proceedings of 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Cancun: Mexico, pp. 50-54, December 2017.

[https://doi.org/10.1109/ICMLA.2017.0-179]

-

O. Nacir, M. Amna, W. Imen, and B. Hamdi, “Yolo V5 for Traffic Sign Recognition and Detection Using Transfer Learning,” in Proceedings of 2022 IEEE International Conference on Electrical Sciences and Technologies in Maghreb (CISTEM), Tunis: Tunisia pp. 1-4, October 2022.

[https://doi.org/10.1109/CISTEM55808.2022.10044022]

-

M. Zhang and S.-S. Shin, “AI-Based Vehicle Damage Repair Price Estimation System,” Journal of Digital Contents Society, Vol. 24, No. 12, pp. 3143-3152, December 2023.

[https://doi.org/10.9728/dcs.2023.24.12.3143]

-

J. S. Thomas, S. Ejaz, Z. Ahmed, and S. Hans, “Optimized Car Damaged Detection using CNN and Object Detection Model,” in Proceedings of 2023 International Conference on Computational Intelligence and Knowledge Economy (ICCIKE), Dubai: United Arab Emirates, pp. 172-174, March 2023.

[https://doi.org/10.1109/ICCIKE58312.2023.10131804]

-

X. Liu, B. Dai, and H. He, “Real-Time On-Road Vehicle Detection Combining Specific Shadow Segmentation and SVM Classification,” in Proceedings of 2011 Second International Conference on Digital Manufacturing & Automation, Zhangjiajie: China, pp. 885-888, August 2011.

[https://doi.org/10.1109/ICDMA.2011.219]

-

M. Parhizkar and M. Amirfakhrian, “Recognizing the Damaged Surface Parts of Cars in the Real Scene Using a Deep Learning Framework,” Mathematical Problems in Engineering, Vol. 2022, 5004129, pp. 1-7, August 2022.

[https://doi.org/10.1155/2022/5004129]

-

W. Liqun, W. Jiansheng, and W. Dingjin, “Research on Vehicle Parts Defect Detection Based on Deep Learning,” Journal of Physics: Conference Series, Vol. 1437, 2020.

[https://doi.org/10.1088/1742-6596/1437/1/012004]

-

K. Thonglek, N. Urailertprasert, P. Pattiyathanee, and C. Chantrapornchai, “Vehicle Part Damage Analysis Platform for Autoinsurance Application,” ECTI Transactions on Computer and Information Technology (ECTI-CIT), Vol. 15, No. 3, pp. 313-323, December 2021.

[https://doi.org/10.37936/ecti-cit.2021153.223151]

- S. Jayawardena, Image based Automatic Vehicle Damage Detection, Ph.D. Dissertation, Australian National University, Canberra: Australia, November 2013.

-

M. A. Berwo, A. Khan, Y. Fang, H. Fahim, S. Javaid, J. Mahmood, ... and M. S. Syam, “Deep Learning Techniques for Vehicle Detection and Classification from Images/Videos: A Survey,” Sensors, Vol. 23, No. 10, 4832, May 2023.

[https://doi.org/10.3390/s23104832]

-

J. Cao, C. Song, S. Song, S. Peng, D. Wang, Y. Shao, and F. Xiao, “Front Vehicle Detection Algorithm for Smart Car Based on Improved SSD Model,” Sensors, Vol. 20, No. 16, 4646, August 2020.

[https://doi.org/10.3390/s20164646]

저자소개

2017년 7월:Henan University(Bachelor)

2019년 5월:Pace University(Master)

2022년 3월~현 재: 동명대학교 컴퓨터미디어공학과 박사과정

※관심분야:AI, 딥러닝, 빅테이터, CNN

2001년 2월:충북대학교 수학과(이학박사)

2004년 8월:충북대학교 컴퓨터공학과(공학박사)

2005년 3월~현 재: 동명대학교 정보보호학과 교수

※관심분야:네트워크 보안, 딥러닝, IoT, 데이터분석