Design of User Emotion-based Recommendation System

Copyright ⓒ 2022 The Digital Contents Society

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-CommercialLicense(http://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

Emotional intelligence computing involves the emotional recognition ability of computer to process human emotions through learning and adaptation, thus enabling a more efficient human-computer interaction. Among emotional data, music and images, which are auditory and visual information, respectively, are considered important factors for successful marketing because they are formed within a short time and last for a long time in memory, and play a very important role in understanding and interpreting human emotions. In this study, we developed a system that recommends music and images taking account of the user’s emotional keywords (anger, sorrow, neutral, joy). The proposed system defines human emotions as four-step circumstances, and uses music/image ontology and emotion ontology to recommend normalized music and images. The proposed system is expected to increase user satisfaction by recommending contents attuned to the user’s emotion taking into account his/her individual characteristics.

초록

감성 지능 컴퓨팅은 학습과 적응을 통해 인간의 감정을 처리하는 컴퓨터의 감성 인식 능력을 포함하여 보다 효율적인 인간과 컴퓨터 상호 작용을 가능하게 한다. 감성 데이터 중 청각 및 시각 정보인 음악과 이미지는 각각 짧은 시간에 형성되고 기억에 오래 지속되기 때문에 성공적인 마케팅을 위한 중요한 요소로 간주되며, 인간의 감정 이해와 정보 전달에 매우 중요한 역할을 한다. 본 연구에서는 사용자의 감성 키워드(분노, 슬픔, 중립, 기쁨)를 고려하여 음악과 이미지를 추천하는 시스템을 제안하고자 한다. 제안하는 시스템은 인간의 감정을 4단계 상황으로 정의하고 음악·이미지 온톨로지와 감정 온톨로지를 이용하여 정규화된 음악과 영상을 추천한다. 제안한 시스템은 개인의 특성을 고려하여 사용자의 감성에 맞는 콘텐츠를 추천함으로써 사용자 만족도를 높일 수 있을 것으로 기대된다.

Keywords:

Emotion, Emotional Intelligence Computing, Ontology, Recommendation System, Feature Information키워드:

감성, 감성 지능 컴퓨팅, 온톨로지, 추천시스템, 특징 정보Ⅰ. Introduction

Human sensibility is manifested in various ways even in response to the same external stimuli according to individual standards established by the experiences of daily living. In the process of recognizing external stimuli, personal standards are applied simultaneously rather than sequentially as in logical calculations. This phenomenon may be compared to white (colorless) light being manifested in rays of specific colors while passing through a colored filter. We can see only the colors that our own filter passes through among the colors constituting the white light which is a mixture of many different colors. Sensory stimuli that can generate different sorts of emotions are accompanied by specific emotions as they pass through our own emotional filter. As such, the emotional filter may have various colors as they are accumulated with accompanying emotions. Therefore, white light, which is raw information received through our sensory organs, generates different emotions while passing through the filter that changes depending on individual and situational characteristics [1].

Emotional intelligence computing enables more efficient human-computer interactions as a computer enabled to recognize and process human emotions by means of learning and adaptation [2]. Among various types of emotion information, music and images, which are auditory and visual information, respectively, are considered important factors for successful marketing because they are formed spontaneously and linger in memory for a long period of time, and thus play a very important role in understanding and interpreting human emotions [3].

In this paper, in order to classify emotional recognition information, emotional colors and emotional vocabulary were matched in the same space, using correspondence analysis and factor analysis [4]. The music and colors were chained together in the process of sequentially matching the colors and frequencies generated by combining the three primary colors with the wavelength ratios from the 12-tone equal temperament chromatic scale. The extracted music emotion information and image emotion information data were then matched with each of the four keywords using the emotion ontology, during which an appropriate image was captured for recommendation. That is, a system was designed that recommends music and images attuned to the user’s emotional keyword (angry, sorrow, neutral, joy) by extracting appropriate characteristic information of the image.

Ⅱ. Related Research

Human emotion does not lend itself well to an explicit description. However, in everyday life, we always express our emotions in response to specific stimuli according to verbal or implicit behavior. Antonio Damasio viewed emotion as a “bodily state” which is amenable to neurophysiological study, unlike personal and subjective “feeling” [5]. However, a number of studies on emotion have recently revealed that emotion has a critical effect on cognitive processes. Many researchers have argued that emotions affect rational processes of decision-making, creativity, and problem-solving, and have presented empirical evidence that it also affects memory [6].

Ongoing research on emotion processing is progressing in two directions depending on the scope of interpretation of the meaning of “emotion.” One examines internal human “emotion” based on the fine-grained classification of emotion originating from psychology, and there is a study on the inner “emotions” of humans. The main focus of research in this direction is on psychology, represented by Plutchik, Russell, and Ekman [7-9]. A Korean study used Plutchik’s emotion model to build an emotion ontology, whereby redefinition was attempted with Plutchik's emotion wheel, using the user utterances in the dialog system for emotion classification. Another Korean study started from the East-West differences in basic human emotions and attempted to construct emotional vocabulary based on seven Eastern emotions [10].

The other research direction for emotion processing involves positive/negative opinion mining on a specific topic sentiment analysis, which provides positive or negative evaluation indicators for a specific topic [11]. This research is intensely used not only for product review analysis of shopping malls, for example, but also for real-time measurement of public preferences for new policies, movies, and people. In particular, research on music recommendation using sentiment analysis has become mainstream among Korean researchers. A typical example is Daumsoft, which developed a mining search system for extracting sentence emotion to use it as a search term, and applying it to the sentiment search service for the music of “Melon”.

Ⅲ. System Configuration and Design

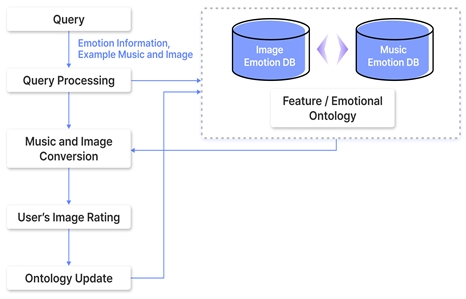

The user emotion-based music and image recommendation system proposed in this paper defines human emotions as four-step circumstances in order to recommend well-matched music and images taking account of four basic human emotions (anger, sorrow, neutral, joy). To enable a targeted recommendation of normalized music and images, music and image ontology and emotion ontology were used to match music and images that match well individual emotional keywords, and image feature information was extracted and similarities were measured to obtain desired results. Figure 1 illustrates the schematic diagram of this system.

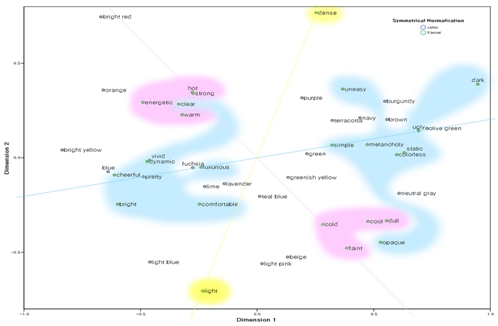

Image emotions were extracted using the web-based color mode RGB. The 20-color emotion models set in “The Meaning of Color” of HP (Hewlett-Packard) was selected as the representative element for the emotion measurement scale, and a 5-point scale questionnaire survey was conducted. Factor analysis and correspondence analysis were performed on the survey results, and emotion space was created for individual colors. The association between emotional vocabulary and emotional element is revealed when each pair of emotional element and vocabulary extracted through correspondence analysis is expressed in an emotion space. The same applies to the relationship between emotional words. Factor analysis is a statistical method that processes a large number or amount of data and extracts a small number of variables with theoretical or contextual significance. That is, factor analysis was used to extract stand-alone and crucial factors from the sheer number of emotional words and their correlational complicity. In addition, the relationships between the words of a vocabulary set for each emotional element surveyed were identified and representative emotional words were extracted. An emotional space was created by displaying the factor score of each of these representative emotional words in the space. Table 1 presents the distance between each RGB and the origin of the color model.

RGB was extracted from a given point of the image and the RGB values of each color were stored in the database. In order to determine the emotion level dependent upon color distribution, the RGB values of each color model were plotted as x, y, z coordinates on a three-dimensional plane and the distance to each extracted color was calculated and each color was assigned to the nearest color model. The image color model distribution is stored in each of the 20-color model fields in the database.

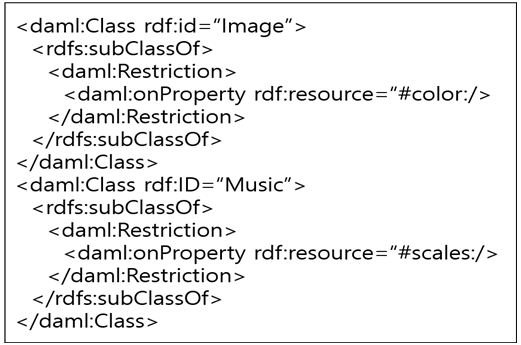

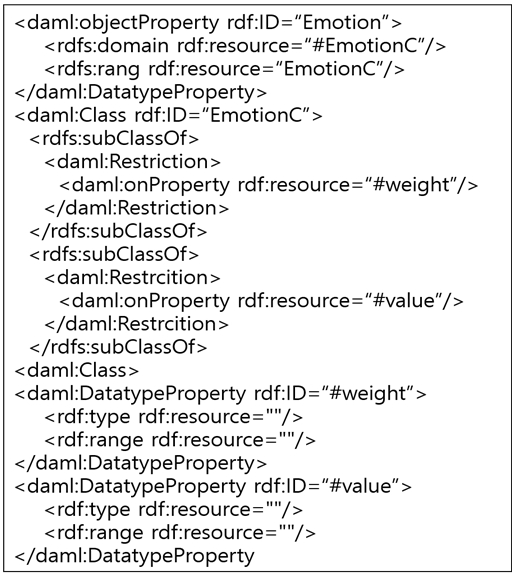

In this paper, image color information and music scale information were defined as data types using XML Schema, which were used for ontology construction to enable the proposed system to use the resulting information for music and image recommendation attuned to the user sentiment. Figure 2 and Figure 3 show the parts of the music/image ontology and emotion ontology, respectively.

The music/image ontology is expressed by the scale and color characteristics and the emotion ontology is composed of two attributes: value and weight, which store emotion information and its weight, respectively. In the attribute value, representative emotion information is stored, as well as emotion information with potential for expansion according to search. In the attribute weight, the number of the users sharing the given emotion is stored as a numerical value. Figure 4 illustrates the search process for emotion information.

To enable image feature extraction according to the same criteria, the longer side of the image was fixed at 180, and the size was normalized to 180 × N or N × 180. In order to minimize the loss of spatial information contained in the image with normalized size, the image was divided into 9 regions (3×3) for the extraction of the representative feature values of each area. Given the inadequacy of the RGB model for calculating the similarity between two colors because the R, G, and B components interfere with one another in the RGB color space, it was converted into HSV color space to extract color feature information. The image color was converted into H (hue), S (saturation), and V (value) using Equation (1).

| (1) |

Furthermore, in order to extract the representative value of color feature information for each region, the maximum H value of the HSV color space and the maximum value among the values quantized to 64 colors for each region were used as the representative feature value. Quantization is a method of selecting the optimum color from the original image, in which the R, G, and B values for each pixel are converted into index values for 64-color quantization using Equation (2).

| (2) |

For shape feature information detection, a Laplacian (second derivative) function was used in order for the contour line at the center of the closed contour to be revealed. For clear contour extraction as the representative value of each region, care was taken to prevent the noise of the image from being recognized as a contour when a sharp contour is extracted, by adding a process of converting the extracted value into a binary image by assigning a cutoff to the extracted value. The Laplacian of f(x,y) of a two-dimensional function can be calculated with Equation (3).

| (3) |

As a measure of image similarity, the distances between image feature information values are generally used. A distance value is simply obtained by using the City-block distance scaling function of Equation (4), which is a variant of the Euclidean distance formula. A smaller absolute value indicates a higher image similarity.

| (4) |

Q is the query image, I is the image in the database, fij is each feature information value of the query image, and fij is the image feature information value in the database. The images searched by the similarity scale were arranged to be displayed on the screen in decreasing order of the similarity value. In addition, in order to quantify the similarity (100%) to the searched image, Equation (5) was used for similarity calculation based on the obtained distance values.

| (5) |

Fq is the feature information value of the query image, and Fd is the feature value of the image stored in the database. For the distance value of a feature information value, similarity is calculated as the sum of the distance values of the nine regions (i) partitioned in the image.

A questionnaire on “color-driven emotional vocabulary” composed of a semantic differential rating scale designed to determine color-driven emotions was distributed to 358 randomly selected subjects (182 males, 176 females) to measure the emotional scale of image emotion information. The data collected were analyzed using the emotional vocabulary reading program developed based on the results of factor analysis and correspondence analysis. Similar answers were clustered, as illustrated in the schematic representation in Figure 5.

As shown in Figure 5, emotional elements and emotional vocabulary are placed in a two-dimensional space, from which the coordinates of emotional vocabulary and emotional elements can be obtained. These coordinates are used for distance measurement, which can be used as a measure for relationship between the emotional vocabulary and the matched emotional elements. A smaller distance at each coordinate indicates a higher correlation (inverse proportion), and a larger color distribution in the image indicates a higher significance (positive proportion). Therefore, the ratio was measured by obtaining the inverse-distance, as expressed by Equation (6).

| (6) |

With Equation (6), the ratio of the distance between the finally obtained emotional vocabulary (i) and emotional element (k) is obtained, where d_ik is the distance between the actual emotional vocabulary (i) and the emotional element (k), and the denominator is the sum of the inverse of distance for 20 emotional words (i).

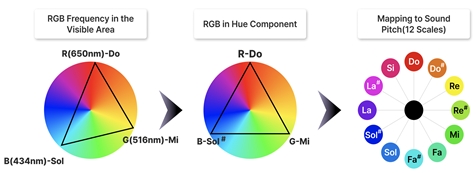

Color and sound, key emotion information important for understanding and identifying emotions, have a common characteristic that both are waves. That is, waves provide a fundamental clue for connecting color and sound. Wavelength and frequency are inversely proportional to each other from physical and mathematical points of view, and are mathematically interconvertible physical quantities. From Do, the wavelength relationship with Mi and Sol is 4/5 and 2/3, and the wavelength ratio is 1 : 4/5 : 2/3. This ratio coincides with the wavelength ratio of 650 nm, 520 nm, and 433 nm, that of the three primary colors red, green, and blue.

Color and sound physically share wave properties such as resonance, amplification, interference, and cancellation, and the frequency ratio of Do-Mi-Sol is almost identical to that of red-green-blue. From this, the frequency conversion formula that converts the wavelength of the visible light spectrum into frequency of the 12-tone equal temperament as the fundamental frequency can be inferred. Also, the frequency band of the visible light spectrum (390–750THz) almost coincides with one octave, and the audible frequency band (20Hz–20kHz) corresponds to 10 octaves, demonstrating inter-correspondence of sound and light [12].

Color has three primary properties of hue, brightness (value), and saturation through which colors can be distinguished, expressed, and conveyed. Sound also has three primary properties of loudness, pitch, and tone which make differentiated sound. In this paper, color-sound conversion was implemented by matching image hue, brightness, and saturation with sound pitch, octave, and tone (harmony), and synthesizing the correspondingly converted sound elements and converting the result into a wave format file. Differently put, instead of a simple conversion by inferring the frequency conversion formula from the principle of color and sound conversion, this paper focuses on the method of mapping the three sound elements to each color element based on the HSI (hue-saturation-intensity) color model. Furthermore, the HSI color histogram was used to extract the three negative sound elements.

Although the typical RGB frequency in the visible spectrum of light matches the frequency ratio of Do-Mi-Sol, the color components of the HSI color model have a 120-degree relationship between RGB. Therefore, the color components were separated by 30 degrees and mapped to 12 scales. .In other words, instead of matching color and sound toward 12-tone frequency ratio by applying the frequency conversion formula, a relatively simple and intuitive method was used in which colors were grouped by 30 degrees to match the frequency ratio of the 12-tone scale.

Figure 6 shows the process of mapping from the frequency components of the visible light spectrum to the color components in the HSI color model and converting the result into the scale component representing the pitch.

When a random color image is entered, RGB information is extracted when the given image is analyzed pixel by pixel. The RGB information thus extracted is converted to information of the coordinate system based on three primary properties of color such as HSI. Among the changed HSI values, a histogram is obtained by dividing each horizontal pixel value of the hue channel into 12 groups, and the maximum value of the histogram is matched with the sound pitch. The pixel values of the saturation channel were matched with the harmonic frequency component that affects the tone applying the same method.

An optimal music matching list is established by analyzing the data inferred by matching the analyzed color measurement values and musical scale used as an indicator. Table 2 is music matching indicators based on emotional keywords.

Ⅲ. System Implementation Results and Performance Evaluation

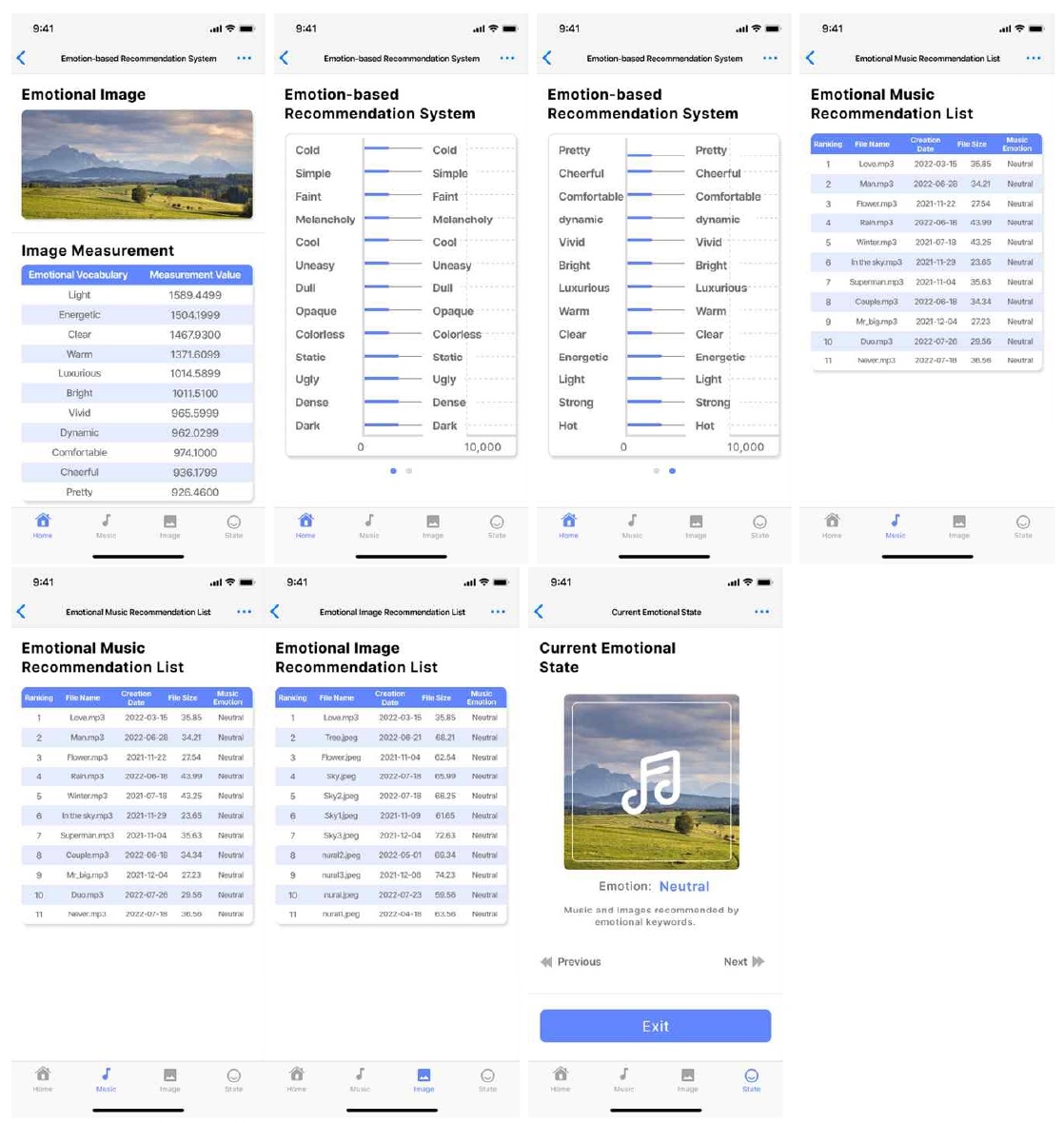

The performance of the proposed recommendation system was evaluated using the widely used mean absolute error (MAE) algorithm. The accuracy of the recommendation system is determined by comparing the user’s estimated preference and actually measured preference for a new item. It is sone by checking the average similarity between the predicted evaluation value and the user’s actual evaluation value. As the data set for the experiment, emotion-based content (music, image) data set built with sound and emotion information were used.

The experiment was conducted by randomly removing 20% of the original data set (100%) consisting of emotion-based content (image, music) data and using the remaining 80% of the original data set to predict the content of the 20% of the data randomly taken from the original data set.

The MAE algorithm, which shows the average absolute errors between the values of two groups to be compared, is an indicator of the degree of similarity of the predicted evaluation values to the actual evaluation values of the user on average. The performance of the recommendation system is evaluated by the MAE value, where a smaller value indicates a higher performance; that is, the closer the MAE value to zero, the higher the prediction accuracy of the recommendation system. Equation (7) is the MAE algorithm.

| (7) |

pi is the actual preference of user p, qi is the predicted preference of user p, and n is the number of contents actually used by user P.

In this paper, the MAE performance evaluation algorithm was normalized. The value of MAE ranges from 0 to 1 (0 = 0% match; 1 = 100% match). Equation (8) was obtained by applying this normalization to Equation (7).

| (8) |

MAX and MIN are the maximum and minimum values of pi - qi, respectively.

Table 3 outlines the results of the recommendation system’s performance evaluation for each emotion obtained using the normalized MAE algorithm. With an average accuracy of 87.05%, the performance evaluation results confirm the high performance of the recommendation system proposed in this study.

The experimental results were also evaluated by measuring the user satisfaction with the search results based on the emotion information provided by the recommendation system as a measure for the reliability of the emotion information as perceived by the user. To this end, 50 sample users were selected, four emotional keywords were presented to them, and user satisfaction with the recommendation results was evaluated.

The sample user satisfaction with the color emotion information conveyed by the recommended music and images, and the average user satisfaction amounted to 85.2%. This proves a fairly high similarity between the proposed recommendation result based on emotion information and the emotion perceived by the user.

As presented in Figure 7, the user’s music emotion information and image emotion information are standardized as a 4-step process by which music and images are searched based on the user's emotional keywords and the search results are recommended in decreasing order of recommendation.

Ⅳ. Conclusions

In this paper, in order to standardize emotion information into four stages (anger, sorrow, neutral, and joy), the extracted data were standardized through factor analysis and correspondence analysis. An image recommendation system was designed based on emotion ontology and image feature data. It was attempted to implement a service that recommends content (images, music) according to the user’s emotion information by recommending contents suitable for each individual using the emotion information thus obtained in this way. The performance of the proposed system was evaluated using the MAE algorithm, and an average accuracy of 87.09% was obtained as a result of the performance evaluation. Moreover, 50 sample users were selected and four emotional keywords were presented to them to evaluate user satisfaction with the recommendation results, which revealed the average value of user satisfaction to be 85.2%. This indicates a fairly high similarity between the proposed recommendation system and actually perceived sentiment. Therefore, the proposed recommendation system is expected to contribute to increasing user satisfaction by recommending contents attuned to the user's emotions.

In future research, it is planned to further enhance the accuracy of recommendation by enhancing its similarity to the actual emotion. In addition, as a measure of emotion, a much higher degree of matching accuracy may be yielded by using a recommendation system based on brain waves or situation recognition of the user's biometric information. In this respect, it is necessary to analyze and study various algorithms to further increase the recognition rate, as shown in the emotion recognition results. Therefore, it is planned to implement a system with a more stable recognition rate using emotions extracted through facial expressions and voice.

Acknowledgments

This study was supported by research funds from Chosun University, 2021.

References

-

A. Kim, E. H. Jang, and J. H. Sohn, “Classification of negative emotions based on arousal score and physiological signals using neural network,” Science of Emotion and Sensibility, Vol. 21, No. 1, pp. 177-186, March, 2018.

[https://doi.org/10.14695/KJSOS.2018.21.1.177]

-

P. Wang, L, Dong, W. Liu, and N. Jing, “Clustering-based emotion recognition micro-service cloud framework for mobile computing,” IEEE Access, Vol. 8, pp. 49695-49704, March, 2020.

[https://doi.org/10.1109/ACCESS.2020.2979898]

-

A. M. Badshah, N. Rahim, N. Ullah, J. Ahmad, K. Muhammad, M. Y. Lee, S. I. Kwon, and S. W. Baik, “Deep features-based speech emotion recognition for smart affective services,” Multimedia Tools and Applications, Vol. 78, No. 5, pp. 5571-5589, March, 2019.

[https://doi.org/10.1007/s11042-017-5292-7]

-

T. Y. Kim, H. Ko, S. H. Kim, and H. D. Kim, “Modeling of recommendation system based on emotional information and collaborative filtering,” Sensors, Vol. 21, No. 6, March, 2021.

[https://doi.org/10.3390/s21061997]

-

Z. T. Liu, Q. Xie, M. Wu, W. H. Cao, Y. Mei, and J. W. Mao, “Speech emotion recognition based on an improved brain emotion learning model,” Neurocomputing, Vol. 309, pp. 145-156, June, 2018.

[https://doi.org/10.1016/j.neucom.2018.05.005]

-

T. Y. Kim, K. S. Lee, and Y. E. An, “A study on the Recommendation of Contents using Speech Emotion Information and Emotion Collaborative Filtering,” Journal of Digital Contents Society. Vol. 19, No. 2, pp. 2247-2256, December, 2018.

[https://doi.org/10.9728/dcs.2018.19.12.2247]

-

R. Plutchik, R. “Measuring emotions and their derivatives,” In The measurement of emotions, pp. 1-35, 1989.

[https://doi.org/10.1016/B978-0-12-558704-4.50007-4]

-

T. Y. Kim, H. Ko, and S. H. Kim, “Data Analysis for Emotion Classification Based on Bio-Information in Self-Driving Vehicles,” Journal of Advanced Transportation. Vol. 2020, pp. 1-11, January, 2020.

[https://doi.org/10.1155/2020/8167295]

-

J. L. Tracy, and D. Randles, “Four models of basic emotions: a review of Ekman and Cordaro, Izard, Levenson, and Panksepp and Watt,” Emotion review, Vol. 3, No. 4, pp. 397-405. September, 2011.

[https://doi.org/10.1177/1754073911410747]

-

H. W. Jung, and K. Nah, “A Study on the Meaning of Sensibility and Vocabulary System for Sensibility Evaluation,” Journal of the Ergonomics Society of Korea, Vol. 26, No. 3, pp. 17-25, August, 2007.

[https://doi.org/10.5143/JESK.2007.26.3.017]

-

Y. Zhang, Y. Wang, and S. Wang, “Improvement of Collaborative Filtering Recommendation Algorithm Based on Intuitionistic Fuzzy Reasoning Under Missing Data,” IEEE Access, Vol. 8, pp. 51324-51332, March, 2020.

[https://doi.org/10.1109/ACCESS.2020.2980624]

-

S. I. Kim, and J. S. Jung, “A Basic Study on the System of Converting Color Image into Sound,” Journal of the Korean Institute of Intelligent Systems, Vol. 20, No. 2, pp. 251-256, April, 2010.

[https://doi.org/10.5391/JKIIS.2010.20.2.251]

저자소개

2003 : Department of Computer Science and Statistics, Graduate School of Chosun University (M.S. Degree)

2015 : Department of Computer Science and Statistics, Graduate School of Chosun University (Ph.D Degree)

2012~2015 : Director of Shinhan Systems Co., Ltd.

2012~2017 : Adjunct Professor, Gwangju Health University

2018~now : Associate Professor, Chosun University

※Research Interest: AI, Big Data, Emotion Technology, IoT