Design of a LiDAR sensor to help AGVs avoid obstacles

Copyright ⓒ 2021 The Digital Contents Society

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-CommercialLicense(http://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

As the 4th industrial revolution progresses, smart factories will be able to offer customized and flexible manufacturing systems of great value. As manufacturing systems get smarter and include more automation, Automated Guided Vehicles (AGVs) are becoming one of the most important technologies in this process. However, in practice, AGVs usually requires magnetic or optical tape to guide them, this can be inconvenient and costly. LiDAR has been widely proposed to solve this problem. However, existing LiDAR for AGVs still experiences the deadlock problem, this is a problem caused by the difference in processing speed during data matching between the SLAM algorithm and LiDAR. Therefore, in order to solve this problem we must tackle it more actively than commercial LiDAR currently does. In this paper, we propose a LiDAR which has a horizontal field of view of 270°, an angular resolution of 0.1°, and a scan rate from 10 Hz to 30 Hz. This suggested LiDAR would then be able to compensate for distance errors using the reflectance of an object. Also, optimized technology for AGV applications has been developed and applied to the suggested LiDAR. Through these advances, it is possible to obtain high accuracy driving performance. In the future, the proposed technology is expected to promote more smart factory within the manufacturing industry.

초록

4차 산업 혁명이 진행됨에 따라 스마트 팩토리는 맞춤형 유연 제조 시스템을 제공할 수 있게 되었다. 제조 시스템이 더욱 스마트해지고 자동화가 포함됨에 따라 Automated Guided Vehicle(AGV)는 프로세스에서 가장 중요한 기술 중 하나가 되었다. 그러나 실제 AGV에는 일반적으로 자기 또는 광학 테이프가 필요하므로, 비용 및 불편함의 문제가 발생한다. LiDAR는 이러한 문제를 해결하기 위해 제안되었다. 그러나 기존 AGV 용 LiDAR는 여전히 교착상태 문제를 겪고 있고, 이는 SLAM 알고리즘 과 LiDAR 간의 데이터 매칭시 처리 속도 차이로 인해 발생하는 문제이다. 따라서 이 문제를 해결하기 위해 현재 상용 LiDAR보다 적극적인 대처가 필요하다. 본 본문에서는 수평 시야각 270°, 각도 분해능 0.1° , 스캔속도 10Hz~30Hz 의 LiDAR를 제안한다. 이 제안된 LiDAR는 물체의 반사율을 사용하여 거리 오차를 보상 할 수 있다. 또한 AGV 응용에 최적화된 기술이 개발되어 제안된 LiDAR에 적용되었다. 이러한 발전을 통해 높은 정확도의 주행 성능을 얻을 수 있다. 향후 제안된 기술은 제조업 내에서 뿐만 아니라 스마트 공장의 발전을 촉진 할 것으로 기대된다.

Keywords:

Automated Guided Vehicle, Light Detection, Simultaneous localization and mapping, LiDAR, sensor키워드:

무인이송로봇, 빛 감지, 동시 위치결정 및 맵핑, 라이다, 센서Ⅰ. Introduction

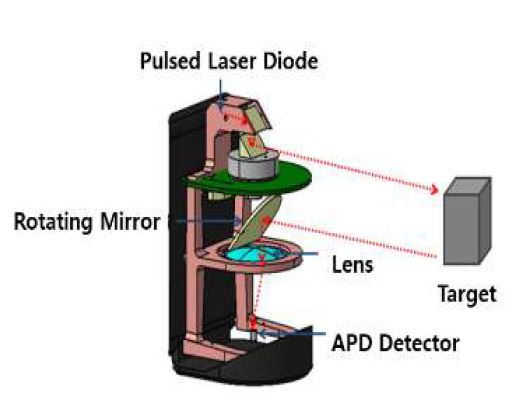

As transport systems for the manufacture and warehouse field develop, AGVs are becoming more and more attractive. The most important characteristic of AGVs is their ability to move automatically indoors. AGVs operate as material transport systems that move independently under their own power while moving along defined paths. As such, it is important they are able to rapidly evaluate the external environment and analyze any data they collect about it. To this end, the interest in laser-based LiDAR sensors for AGVs is increasing. Early AGVs needed metal or magnet lines to guide where they drove. Later, AGVs have developed to use automatic driving system that work by adopting LiDAR technology. Recently, active automatic driving systems that do not use lines have been developed. [1], [2], [3] When using these systems, good obstacle detection is one of the most important requirements for the success of AGVs. For AGV obstacle detection, LiDAR needs to achieve precise detection at a distance of 30m and a range of 180 degrees. Generally, LiDAR sensor systems consist of a Pulse Laser Diode Driver, an APD (Avalanche Photo Diode) Detector, a high-speed signal processer, and an Optical system. A laser signal is transmitted and reflects back off the surfaces of objects in the surrounding environment before being collected back at the LiDAR and converted to electronic signals. The collected data can be converted to a flight time via a TDC (Time to digital converter). [4]

In this paper, we designed a system consisting of a Pulsed Laser Diode Driver with over 70 W peak power, an APD Detector with a 490 MHz wide bandwidth, an optical system combined transmitter and receiver unit, and a signal processing board to improve the sensors detect-ability. Angular resolution is one of the important factors in an AGV system’s ability to detect obstacles. Smaller angular resolution gives better mapping resolution.

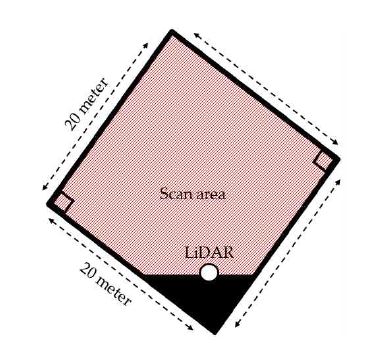

We also develop a circuit and algorithm to compensate for walk error using reflectivity. In this paper, our sensor’s performance is verified by comparing it with commercial LiDAR in a 20 m x 20 m test space. [5], [6], [7]

Ⅱ. Main Subject

2-1 PRINCIPLES BEHIND LIDAR SENSORS

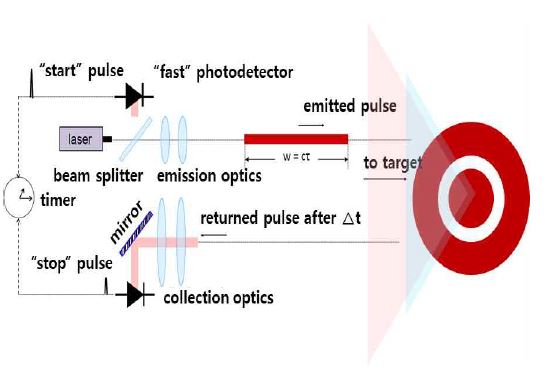

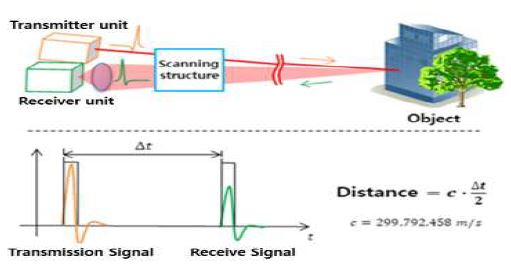

LiDAR sensors exploit a high power pulse laser to rapidly obtain 3D spatial data that is calculated using the travel time of light between the sensor and the object. To calculate the travel distance of light we should know the travel time and the speed of light. The travel time means the round trip travel time between the sensor and object in equation (1). [8], [9], [10]

| (1) |

the speed of light : c,

round trip distance of light : 2D,

round trip time of light : Δt

The only variable in equation (1) is time, so if it is possible to acquire the round trip time of light, the associated distance can easily be obtained. [11]

2-2 ANALYSIS OF SIGNAL TRANSMISSION AND RECEPTION

Most light energy emitted by the laser is lost. Due to the nature of light, energy remaining is inversely proportional to the square of the distance travelled and any reflected signal recorded is in proportion to the reflectivity of the detected object’s surface. Equation (2) shows a mathematical expression for transmitting and receiving a signal with a LiDAR sensor.

| (2) |

In equation (2), is the light energy of emission and is the light energy of return. The light source energy is in inverse proportion to the square of the travel distance R in atmosphere and is proportional to the area of the light source. The area of the light source entering the receiver is determined by the size and performance of the lens. The reflectivity of the material surface, Re, is proportional to the amount of energy that is reflected, which depends greatly on each individual object’s characteristics. Object detection is difficult if that object has high absorption and permeability of light.

2-3 LIDAR SENSOR COMPONENTS

A LiDAR sensor consists of a transmitter unit that shoots the laser at the targets using a high power pulse, a detector that detects the light signal reflected back from the targets, a signal processing unit that calculates the distance to the target, and an optical system for laser collimation and light collection.

Ⅲ. Design of LIDAR Sensor

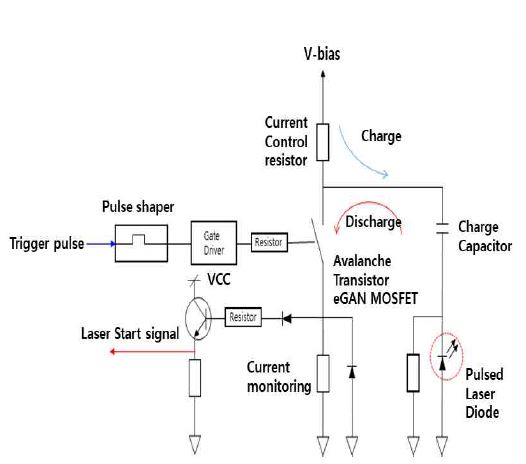

3-1 DESIGN OF PULSED LASER DIODE DRIVER

In order to drive the PLD (Pulsed Laser Diode), a high voltage (45~300V) is generally switched and the current is charged through a charging capacitor. The charged current is used to operate the PLD by switching an avalanche transistor or eGAN MOSFET. Our PLD is operated by an OSRAM SPL PL90_3 laser diode. Table 1 shows the specification of the diode used in this paper. The PLD’s peak wavelength is 905 nm, and its normal peak output power is 75 W. [12], [13]

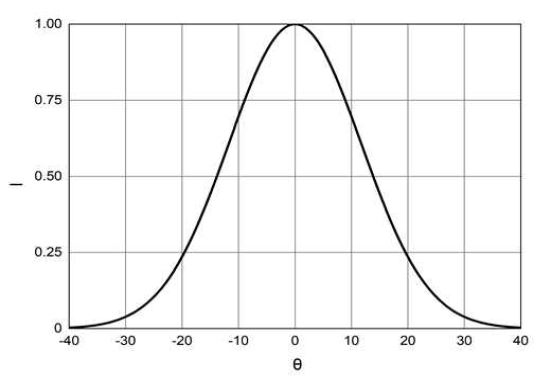

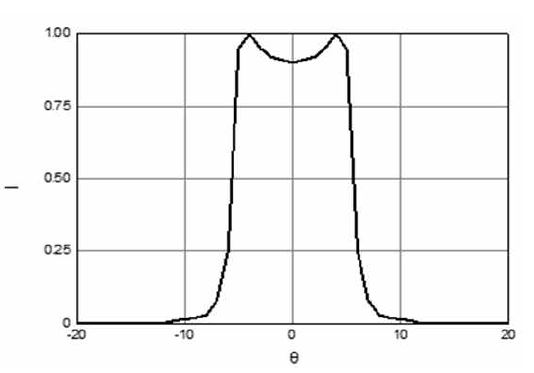

Figure 3 and 4 show the beam divergence of our laser diode in the vertical and horizontal. Typically, the beam divergence parallel to pn-unction is 9 ˚, perpendicular to pn-junction is 25 ˚. The aperture size used was 200×10.

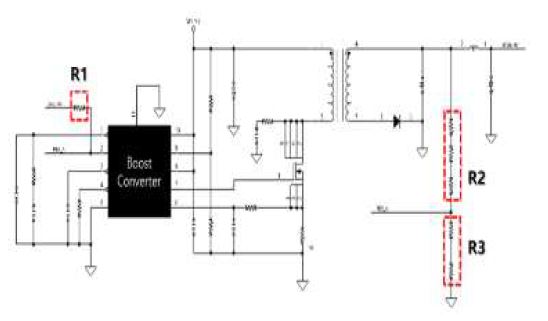

Figure 5 shows a block diagram of the operation of the PLD. The required current capacity (IPS) can be expressed as an equation (3). Total Input Capacity can be expressed as the sum of the Pulse Forming Network (CPFN), MOSFET Capacity (CFET), and the parasitic Capacity (CSTRAY), required current can be obtained from the drive voltage (V) and frequency (f). [14], [15], [16]

| (3) |

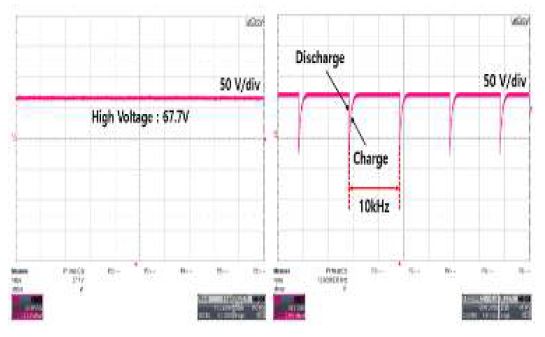

The PLD circuit was designed as shown in Figure 5, where = 500pF, =120pF, = 200pF, frequency = 10kHz, and input voltage is approximately 67 V.

3-2 DESIGN OF APD DETECTOR

Figure 6 shows the charging and discharging pulse when the input voltage is 67.7 V and the Pulse Repetition Frequency (PRF) is 10kHz. The voltage and frequency were measured using a LecCroy Co. WaveSurfer104MXs-B.

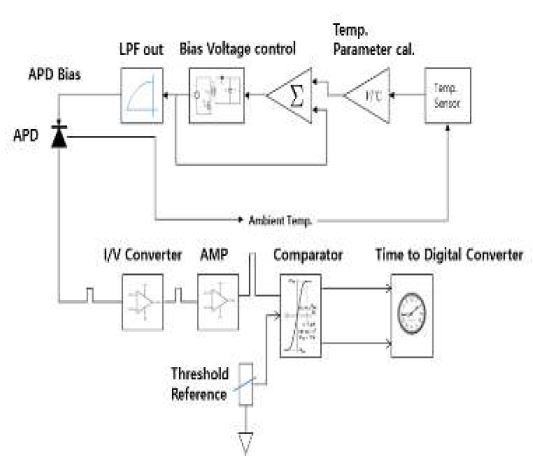

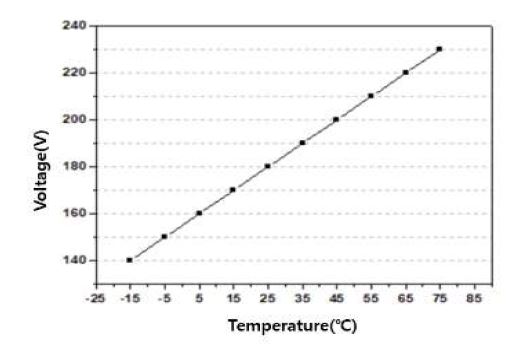

In this system, it is necessary to detect the PLD’s emission signal when it is reflected back from the target. In this study, an I/V convertor was designed for converting a light signal to a voltage signal. Figure 7 shows the APD Detector Block Diagram. The detector was designed using an APD which has a peak wavelength of 905 nm. However, the APD has the disadvantage of changing characteristics according to temperature, therefore, it becomes important to maintain an optimized M (multiplication factor). We suggest a temperature compensation circuit to control the APD bias voltage consistently according to the value from an external temperature sensor, this will enable us to maintain the optimum M value. [17], [18], [19], [20]

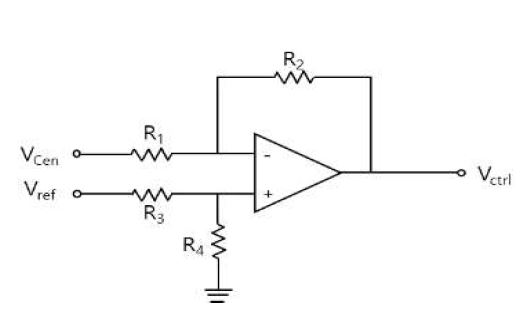

Figure 8 shows a temperature compensation method using a differential amplifier. The amplifier is used to compare the value from the temperature sensor (TMP36) and the APD Bias voltage. A reference voltage is applied to V+ of the op-amp. A voltage from the TMP36 sensor is applied to V- of the op-amp. The temperature compensation circuit equation can be expressed as shown below. [21] Equation (6) can be induced from equation (4) and (5).

| (4) |

| (5) |

| (6) |

Figure 9 shows the designed temperature compensation circuit for the APD bias. It is based on equation (6) and compares the external temperature sensor’s output voltage and the reference voltage to adjust the Ctrl voltage, this makes it possible to maintain the optimum M value according to temperature.[22]

| (7) |

The output voltage of the proposed APD Bias circuit can be represented as shown in expression (7). [23]

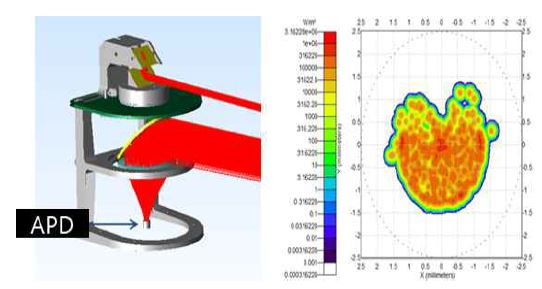

3-3 DESIGN OF LIDAR OPTICAL SYSTEM FOR AGVs

The optical system of the LiDAR sensor consists of elements such as the optical system of the transmitter unit at the top of the rotating mirror, the optical system of the receiver unit at the bottom of the rotating mirror, a PLD, a reflector, a lens, and a detector.

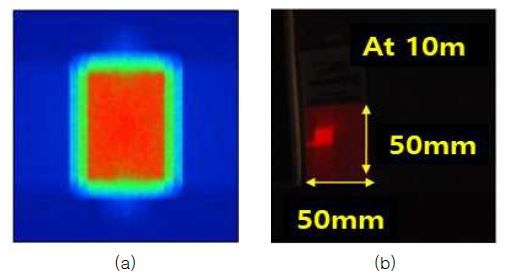

Figure 11 shows the proposed LiDAR sensor’s optical system. It was designed to obtain a 270° FoV (Field of View) using a rotating mirror. The laser aperture of the Pulsed Laser Diode is 200 µm x 10 µm, Beam Divergence is 25° (Fast Axis) and 9° (Slow Axis). The equation for collecting parallel laser beams is shown in equation (8).

| (8) |

Sb is the beam size on the target, d is distance between the lens and target, Sc is the chip size of the laser, and f is the focal distance of the lens. In this paper, the focal distance of the lens must be 30 mm according to equation (8) in order to achieve a radial angle of 5 mrad (i.e. a 100mm beam size at 10 m) and must be at least 27 mm to correspond to a 25° radial angle. Therefore, the focal distance adopted was 30nm and the beam size on the target was designed to be 50nm at 10m using a small collimation lens. [24],[25]

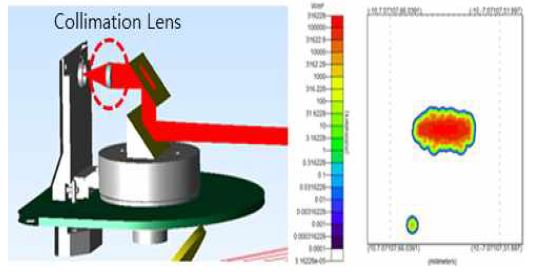

Figure 12 shows a simulation of the beam size on a target at 10 m using the designed lens. The beam was measured to be 50 mm in width and length in the actual measurements, this matches the simulation results.

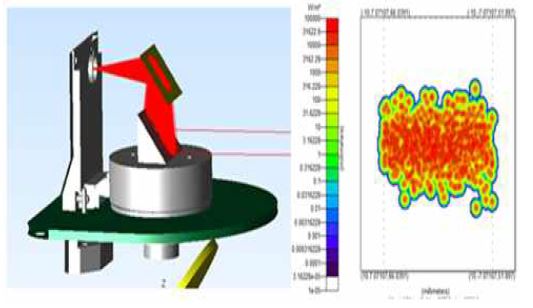

Figure 13 shows the simulation result for a system without a collimation lens in the transmitter unit. In this simulation, at least 1 hundred million rays are needed to give a sufficient spread of beam results to detect the target. This shows how the collimation lens is an essential element of this system.

Figure 14 shows the simulation results with the collimation lens. In this simulation, the beam size on the target is 50 mm x 50 mm at 10 m. The collimation lens is a N-BK7 plano- convex lens. Its EFL (Effective Focal Length) is 20mm, this makes it possible to detect targets 20 mm from laser.

Figure 15 shows the simulation results for the receiver unit’s lens. The simulation results show that an EFL of 30mm gives the best efficiency.

Based on these simulations, we attached a lens for collimation to the transmitter unit. Also, the receiver unit and the lower part of system, is designed as an integral structure that includes the light collection lens. The proposed LiDAR sensor’s optical system has a structure designed to allow easy control of up, down, left, and right movements.

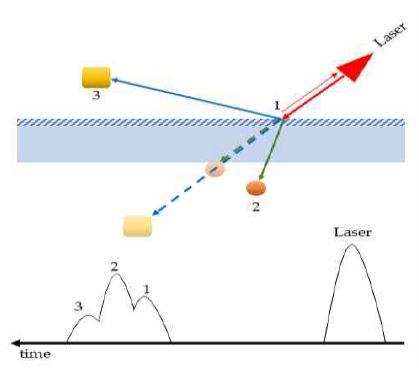

Figure 17 shows the reflection of laser by surface type. The results of measurements of laser signals are different from different types of surfaces such as from mirrors or glass. If the detection object surface is a mirror, it can be effectively measured only when the angle of incidence of the laser is perpendicular to the surface. If the angle of incidence of the laser is not perpendicular, the diffuse reflectance is greatly reduced. Also, precise measurement in the case of transparent objects such as glass is difficult due to light refraction. When measuring a transparent object, multiple signals could be detected such as reflection signals that have come from inside or through the transparent object. Also, we may detect diffusion and reflection signals from the surface of an object that is not totally transparent. Due to the above, uncertain measurement result can occur, as shown in Figure 17. In order to prevent this problem, it is necessary to perform some processing to reduce the transparency and reflectivity of objects that have an unstable surface type in the LiDAR environment. A walk error is a distance error cause by the reflectivity of the target. Ideally, a distance measurement should be made regardless of any differences in reflectivity. However, in the case of laser sensors, distance errors occur while converting the analog signal to a digital signal. In order to solve this problem, we developed a feed forward compensation algorithm technology that experimentally obtained the distance error relationship according to the signal size and converted it into a look-up table.

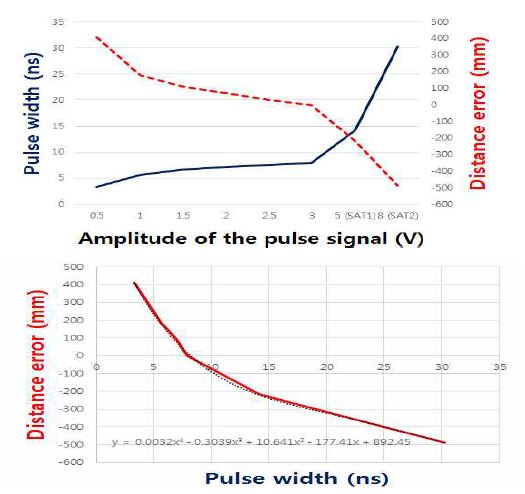

Figure 18 shows a graph of pulse amplitude versus pulse width and distance error, as well as the compensation equation. The size and width of signal have a certain ratio. Therefore, if the width of the signal can be measured, the size of the signal increases and can be inferred even if the saturation region exists. In addition, it is possible to know the degree of distance error that occurs through measurement.

3-4 PROPOSED SIGNAL PROCESSING

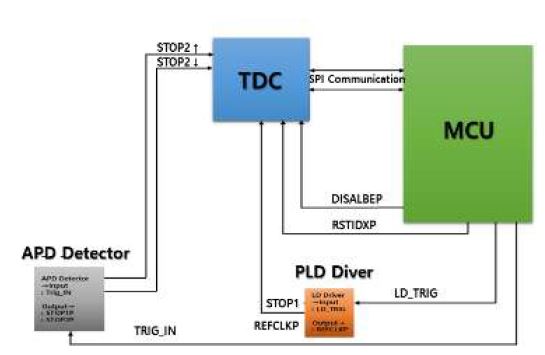

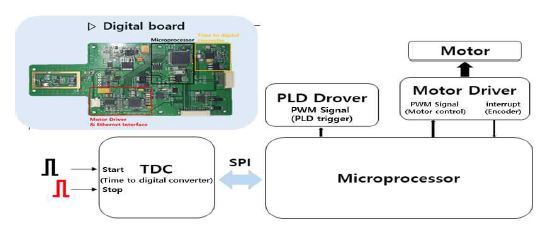

The LiDAR sensor for AGV SLAMs uses an MCU which is based on the ARM Cortex-M4. It analyzes the TDC to obtain a distance using the trigger signal of the BLDC, the stop signal of the APD Detector, as well as using the Motor and Pulsed Laser Diode Driver.

Figure 20 shows △t, the time difference between transmitting a signal and receiving a signal. After this, the TDC passes the gathered data to the gate for the time period between the start and stop signals. We can measure △t by counting the number of gates. △t is calculated by the flight time method, which converts the gathered data into distance values and stores them in memory. The TDC calculation process is performed periodically during the scanning operation, and as the direction irradiated by the scanning structure is changed to a certain angle, the distance data set of the LIDAR coordinate system generated based on the LIDAR sensor was extracted and designed. The distance dataset of the coordinate system is extracted from the combined data. [26]

A motor driver was designed to control the BLDC motor by controlling the PWM pulse. This PWM signal is input into the motor driver to allow an interrupt to operate at certain intervals using an optical interrupt signal from the encoder. As a result of the control of the BLDC, the motor rotates at a constant speed of 15 Hz and increases the stability of the LiDAR’s point data acquisition. Also, the measurement time of each distance is determined by the encoder signal that is attached to the motor. To obtain distance data and scanning data point by point, the encoder signals are used to generate the laser trigger signal. [27]

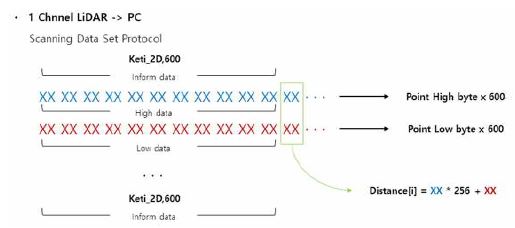

Designed using SCIOSENSE's TDC-GP22 chip to measure the time difference between start and stop signals, the acquired time difference is converted into distance information and stored in memory. [28] The stored data in memory is converted to a predefined protocol form for transmission to the Viewer software. A point data set of 1208 bytes per layer is sent to a PC. One layer is composed of Inform data, High bit data, and Low bit data, and the distance value can be determined by calculating the upper and lower bits. It is designed to know the number of defined LiDAR Points through the number of indexes of Inform data. Figure 22 shows the defined protocol of the LiDAR point cloud data. [29]

Figure 23 was produced by organizing the TDC to analyze signals that were reflected off a target, the MCU processes 32 bits of distance data, and Ethernet communication handles cloud data transmission of the points.

Ⅳ. Environment and Experimental Results

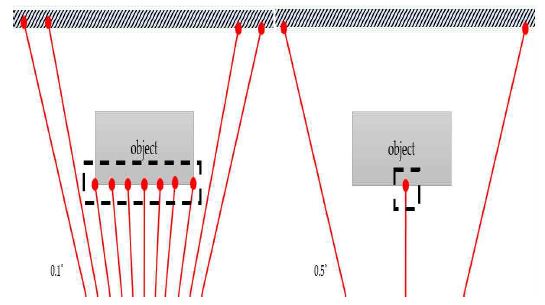

The tested commercial LiDAR for AGVs has a maximum measuring distance of 20 m and a field of view of 300˚. However, the proposed LiDAR for AGVs has a maximum measuring distance of 30 m and a 270 ˚field of view mode. Commercial LiDAR for AGVs has a 0.5˚ angular resolution and a 25 Hz scan rate. However, the proposed LiDAR for AGVs has a 0.1˚angular resolution and a 10 Hz to 30 Hz adaptable scan rate. Both systems use infrared lasers (905 nm).

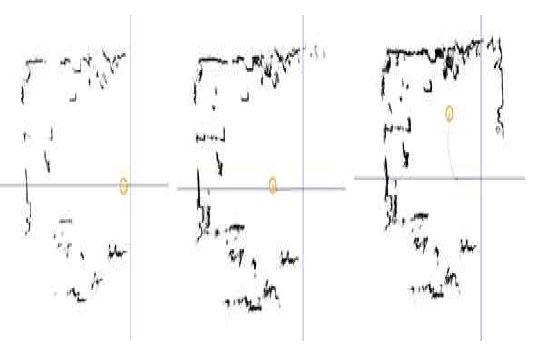

The performance of both sensors was verified by experiments indoors. The commercial LiDAR for AGVs was tested at 0.5 angular resolution, the proposed LiDAR for AGVs was tested at 0.1 angular resolution. Figure 24 shows the indoor field test conditions. The size of the room was 20m x 20m as measured by the proposed sensor.

Distance data was obtained using the Viewer for Point Cloud data measured by commercial LiDAR for AGVs, as shown in Figure 26.

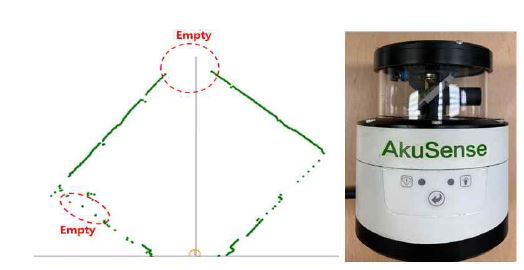

Point Cloud Data from commercial LiDAR for AGVs. The LiDAR for industrial AGVs used in this experiment is from AkuSense Inc., a leading provider of sensor application solutions. The model name used is AKU-LS-D20-300.

Figure 26 shows the Point Cloud Data from the commercial LiDAR for AGVs, its angular resolution is 0.5°, this leads to undetected areas due to the reflectivity and color of certain objects. Our LiDAR sensor has 0.1° angular resolution, improving its detection ability by more than 5 times when compared to the commercial sensor, as shown in Figure 27.

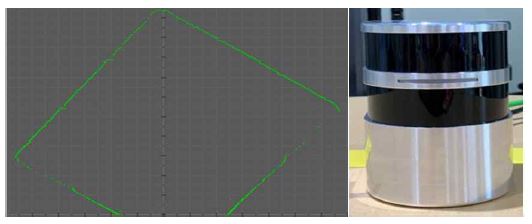

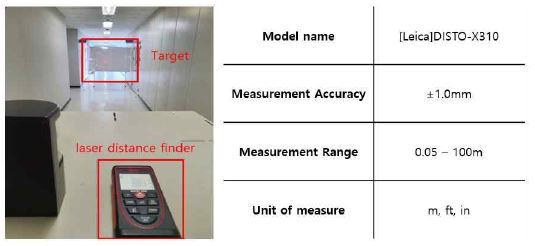

Sensor accuracy at 1 m increments was tested for distances from 1 m to 10 m. A coated white board was used as the target. Each distance from 1 m to 10 m was measured 1000 times. A Leica laser distance finder was used to determine any error. Figure 28 shows the experiment setup and laser distance finder’s specification. Table 2 shows the average errors and accuracies.

Figure 29 shows error and accuracy graphs. According to Table 3, an average error of 0.12 cm occurred at a distance of 1 m. A 0.672 cm average error occurred at 10 m. From 1 to 10 m, the total average error was about 0.33 cm. The accuracy of the distance measurements was 99.88 % at 1 m and 99.93 % at 10 m, the average of accuracy of the distance measurements was about 99.93%. The proposed LiDAR sensor system for AGVs shows an improvement in the reliability of the measured distance.

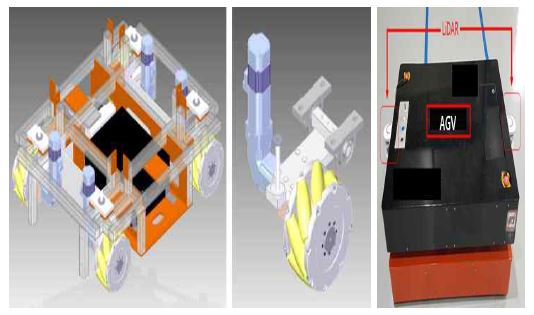

Figure 30 shows a real AGV model for testing AGV obstacle avoidance. It was developed with LiDAR sensors. It is equipped with LiDAR sensors and performs obstacles avoidance based on the LiDAR sensor data.

A mapping experiment was conducted with our LiDAR sensor attached to a real AGV. Figure 31 shows the path of the AGV. Map data was acquired while the AGV was moving on its path.

An autonomous driving test was conducted using the map obtained by the LiDAR sensor on the AGV. This demo AGV experiment confirms that it is possible to locate and autonomously drive to a designated destination by comparing map information with measured information of the proposed 2D laser sensor. As the proposed LiDAR has 5 times better scan resolution than commercial LiDAR, our LiDAR was able to obtain 5 times the amount of data every frame. In addition, the minimum detection size of object is 5 times smaller from the same distance. When the AGV moves and passes between objects, the AGV will hit the objects unless the LiDAR sensor mounted on the AGV detects the exact edge of the object. While driving, it compares the information mapped by the AGV with the information scanned by LiDAR to determine if the AGV should avoid the object. If angular resolution is not precise, errors occur while driving. Figure 32 shows the different detection points of the same object using 0.1 angular resolution and 0.5 angular resolution from the same distance.

Ⅴ. Conclusion

In this paper, a LiDAR sensor for AGV’s was proposed that uses simultaneous localization and SLAM. The designed and manufactured LiDAR sensor technology consists of a PLD with a pulsed repetition frequency of 10 kHz, an APD detector with temperature compensation and a –100 V to –300V range, an optical system with integrated transmitter and receiver unit, a LiDAR sensor with a FoV of 270° and an angular resolution of 0.1°. The LiDAR sensor output data is sent to a PC via an Ethernet connection. The proposed LiDAR sensor system was attached to a real AGV for performance evaluation. The system’s average distance error was found to be 0.33cm, the average of accuracy was 99.93 %. These results show that the proposed sensor’s detection ability give a more than 5 fold improvement compared to commercial LiDAR sensors

Acknowledgments

This work was supported by commercialization’s Promotion Agency for R&D Outcomes(COMPA) grant funded by the Korea government. (3D LiDAR Technology for autonomous mobile robots in unspecific environment), in part by the National Research Foundation of Korea (NRF) grant funded by the Korea government. (MSIT) (Grant number: 2018R1A2B600821613) and in part by the BK21 FOUR Program(Fostering Outstanding Universities for Research, 5199991714138) funded by the Ministry of Education(MOE, Korea) and National Research Foundation of Korea(NRF).

References

-

Dongqing Shi, Haiyan Mi, Emmanuel G. Collins, and Jun Wu, “An Indoor Low-Cost and High-Accuracy Localization Approach for AGVs,” IEEE Access., vol. 8, pp. 50085-50090, March. 2020.

[https://doi.org/10.1109/ACCESS.2020.2980364]

-

Yunlong Zhao, Xiaoping Liu, Gang Wang, Shaobo Wu, and Song Han, “Dynamic Resource Reservation Based Collision and Deadlock Prevention for Multi-AGVs,” IEEE Access., vol. 8, pp. 2169-3536, April 2020.

[https://doi.org/10.1109/ACCESS.2020.2991190]

-

T. Nishi, and Y. Tanaka, “Petri net decomposition approach for dispatching and con_ict-free routing of bidirectional automated guided vehicle systems,” IEEE Trans. Syst., Man, Cybern. A, Syst. Humans, vol. 42, no. 5, pp. 1230-1243, Sept. 2012.

[https://doi.org/10.1109/TSMCA.2012.2183353]

-

Mostafa Rashdan, “Multi-step and high-resolution vernier-based TDC architecture.” 2017 29th International Conference on Microelectronics (ICM)., pp. 1-8, Dec. 2017.

[https://doi.org/10.1109/ICM.2017.8268819]

-

Sibo Quan, and Jianwu Chen, “AGV Localization Based on Odometry and LiDAR,” 2019 2nd World Conference on Mechanical Engineering and Intelligent Manufacturing., pp. 483-486, Nov. 2019.

[https://doi.org/10.1109/WCMEIM48965.2019.00102]

- G. D. Choi, M. H. Han, M. H. Song, H. S. Seo, C. Y. Kim, S. C. Hong, and B. K. Mheen, “Development trends and expectation of three-dimensional imager based on LiDAR technology for autonomous smart car navigation,” Electronics and Telecommunications Trends., pp. vol.31, 86-97, Aug. 2016.

-

Yilmaz. A, and Temeltas. H, “Self-adaptive Monte Carlo method for indoor localization of smart AGVs using LIDAR data,” Robotics and Autonomous Systems., vol. 122, Dec. 2019.

[https://doi.org/10.1016/j.robot.2019.103285]

-

De Ryck. M, Versteyhe, and F. Debrouwere, “Automated guided vehicle systems, state-of-the-art control algorithms and techniques,” Automated guided vehicle systems, state-of-the-art control algorithms and techniques., vol.54, pp. 152-173, Jan. 2020.

[https://doi.org/10.1016/j.jmsy.2019.12.002]

-

Pierzchała. M, Giguère. P, and Astrup. R, “Mapping forests using an unmanned ground vehicle with 3D LiDAR and graph-SLAM,” Computers and Electronics in Agriculture., vol. 145, pp. 217-225, Feb. 2018.

[https://doi.org/10.1016/j.compag.2017.12.034]

-

Royo. S, and Ballesta-Garcia. M, “An Overview of Lidar Imaging Systems for Autonomous Vehicles,” Appl. Sci., vol. 9, pp. 4093, Sept. 2019.

[https://doi.org/10.3390/app9194093]

- P. Palojarvi, T. Ruotsalainen, and J. Kostamovaara, “A new approach to avoid walk error in pulsed laser rangefinding,” IEEE International Symposium on Circuits and Systems (ISCAS), vol.1, pp. 258-261, Jun. 1999.

- John Glaser, “High Power Nanosecond Pulse Laser Driver Using an GaN FET,” PCIM Europe 2018; International Exhibition and Conference for Power Electronics, Intelligent Motion, Renewable Energy and Energy Management., pp. 662-669, June. 2018.

- OSRAM Opto Semiconductors Inc., SPL PL90_3 Datasheet,2018.[Online].Available:https://www.osram.com/ecat/Radial%20T1%203-4%20SPL%20PL90_3/com/en/class_pim_web_catalog_103489/global/prd_pim_device_2220019/

-

Armin Liero, Andreas Klehr, Sven Schwertfeger, Thomas Hoffmann, and Wolfgang Heinrich, “Laser driver switching 20 A with 2 ns pulse width using GaN,” 2010 IEEE MTT-S International Microwave Symposium., pp. 23-28, May. 2012.

[https://doi.org/10.1109/MWSYM.2010.5517952]

-

Xu Lei, Bin Feng, Guiping Wang, Weiyu Liu, and Yalin Yang, “A Novel FastSLAM Framework Based on 2D LiDAR for Autonomous Mobile Robot,” Electronics., vol. 9, pp. 695, Apr. 2020.

[https://doi.org/10.3390/electronics9040695]

-

Żbik. M, and Wieczorek. P.Z, Charge-Line Dual-FET High-Repetition-Rate Pulsed Laser Driver, Appl. Sc., vol. 9, 1289.

[https://doi.org/10.3390/app9071289]

-

Anand Singh, and Ravinder Pal, “Infrared Avalanche Photodiode Detectors,” Defence Science Journal., vol. 67, pp. 159-168, Mar. 2017.

[https://doi.org/10.14429/dsj.67.11183]

-

Yihan Cao, Xiongzhu Bu, Miaomiao Xu, and Wei Han, “APD optimal bias voltage compensation method based on machine learning,” ISA Transactions., vol. 97, pp. 230-240, Feb. 2020.

[https://doi.org/10.1016/j.isatra.2019.08.016]

-

Lixia Zheng, Ziqing Weng, Jin Wu, Tianyou Zhu, Meiya Wang, and Weifeng Sun, “A current detecting circuit for linear-mode InGaAs APD arrays,” 2016 IEEE International Conference on Electron Devices and Solid-State Circuit., 399-402.

[https://doi.org/10.1109/EDSSC.2016.7785292]

- MAXIM Integrated Inc., MAX40660 Datasheet, 2019, [Online].Available:https://www.maximintegrated.com/en/products/analog/amplifiers/MAX40660.html

- Analog Devices Inc., TMP36 Datasheet, 2015, [Online]. Available:https://www.analog.com/en/products/tmp36.html#product-overview

- Shijie Deng, John Hayes, and Alan P. Morrison, “A bias and control circuit for gain stabilization in avalanche photodiodes,” IET Irish Signals and Systems Conference., pp. 1-5, Jun. 2012.

-

Po-Wa Lee, Yim-Shu Lee, D.K.W. Cheng, and Xiu-Cheng Liu, “Steady-state analysis of an interleaved boost converter with coupled inductors,” IEEE Transactions on Industrial Electronics., vol. 47, pp. 787-795, Aug. 2000.

[https://doi.org/10.1109/41.857959]

-

Ershov, Petr, et al, “Fourier crystal diffractometry based on refractive optics,” Journal of Applied Crystallography., vol. 46, pp. 1475-1480, Aug. 2013.

[https://doi.org/10.1107/S0021889813021468]

-

ShuYuan, HuajunYang, and KangXie, “Design of aspheric collimation system for semiconductor laser beam,” Optik., vol. 121, pp. 1708-1711, Oct. 2010.

[https://doi.org/10.1016/j.ijleo.2009.04.002]

- Youngjoon Cho, Yeomin Yoon, Joowan Lyu, and Kyihwan Park, “Auto Gain Control method using the current sensing amplifier to compensate the walk error of the TOF LiDAR,” 2019 19th International Conference on Control, Automation and Systems (ICCAS)., pp. 1403-1406, Jan. 2020.

-

Jun Chen, Mingjiang Wang, and Xueliang Ren, “The Control System Design of BLDC Motor,” International Conference on Mechanical, Electrical, Electronic Engineering & Science (MEEES 2018)., vol. 154, pp. 343-349, May. 2018.

[https://doi.org/10.2991/meees-18.2018.60]

- Sciosense Inc., TDC-GP22 Datasheet, 2019, [Online]. Available:https://www.sciosense.com/products/ultrasonic-flow-converters/tdc-gp22-ultrasonic-flow-converter/

-

Maliang Liu, Haizhu Liu, Xiongzheng Li, and Zhangming Zhu, “A 60-m Range 6.16-mW Laser-Power Linear-Mode LiDAR System With Multiplex ADC/TDC in 65-nm CMOS,” IEEE Transactions on Circuits and Systems I: Regular Papers., vol. 67, pp. 753-764, Dec. 2019.

[https://doi.org/10.1109/TCSI.2019.2955671]

-

Takamoto Watanabe, and Hirofumi Isomura, “All-digital ADC/TDC using TAD architecture for highly-durable time-measurement ASIC,” 2014 IEEE International Symposium on Circuits and Systems (ISCAS)., pp. 674-677, Jun. 2014.

[https://doi.org/10.1109/ISCAS.2014.6865225]

저자소개

2016년 : 교통대학교 (공학학사) the Korea National University of Transportation(B.S degrees)

2021년 : 전남대학교 대학원 (공학석사) Chonnam National University(M.S. degree)

2019년~현 재: 한국전자기술연구원(the Korea Electronics Technology Institute)

※관심분야:센서(sensor), 라이다(LiDAR) 등

2006년 : 전남대학교 대학원 (공학석사) Chonnam National University(M.S. degree)

2017년 : 전남대학교 대학원 (공학박사) Chonnam National University(Ph.D. degree)

2007년~2009년: 엘지 이노텍(LG Innotek)

2010년~2013년: 삼성전자(Samsung Electronics)

2014년~현 재: 한국전자기술연구원(the Korea Electronics Technology Institute)

※관심분야:전력전자(power electronics), dc-dc converter, 라이다 센서(LiDAR sensor), fusion sensor, wireless power transfer 등

2003년 : 서울대학교 대학원 (공학석사) Seoul National University(M.S. degree)

2007년 : 서울대학교 대학원 (공학박사) Seoul National University(Ph.D. degree)

2007년~2011년: SK 하이닉스반도체 (SK Hynix Semiconductor)

2011년~2014년: 특허청(Korean Intellectual Property Office)

2014년~현 재: 전남대학교 ICT융합시스템공학과 교수 Department of ICT Convergence System Engineering, Chonnam National University Processor

※관심분야:전력반도체(Power semiconductor device design), 전력회로(Power circuit design), 차세대 반도체 소자 (Emerging fabrication technologies and devices), 라이다 센서(LiDAR sensor), 집적회로(Integrated circuit design) 등