Need to belong, privacy concerns and self-disclosure in AI chatbot interaction

Copyright ⓒ 2020 The Digital Contents Society

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-CommercialLicense(http://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

This paper attempts to investigate what brings individuals to self-disclose in the context of an artificial intelligent (AI) chatbot interaction and what outcomes of such interaction would be, specifically if any differences exist when individuals think they are interacting with a human or an AI on the levels of self-disclosure, social presence, and intimacy. In addition, the user’s personality trait of ‘need to belong(NTB)’ is hypothesized to bring in meaningful differences in the nature and evaluation of the chatbot interaction. For this, a 2 (perceived humanness: AI chatbot or human) x 2 (NTB: high, low) x 2 (privacy concerns: high, low) between-subject experimental designed was employed (N=646). The results revealed no significant effect of perceived humanness with regard to self-disclosure, social presence, and intimacy: Participants experienced the interaction similarly whether they perceived to communication with either AI or human. The study also explained how NTB affected individuals’ interaction with an artificial agent. No significant moderating role of privacy concerns was found. Implications of these results are discussed in light of the recent growth of AI agent services.

초록

본 연구는 AI 챗봇 이용자가 상호작용에 있어 대화를 나누는 상대방에 대해 인지된 인간성(perceived humanness)에 따라 자기 노출, 사회적 현존감과 친밀감의 경험에 차이가 발생하는지를 살펴보고자 하였다. 특히, 이용자 속성인 ‘소속 욕구(need to belong, NTB)’에 따라 챗봇과의 상호작용이 불러일으키는 다양한 심리적 효과에 차이가 발생하는지를 연구하였다. 이를 위하여 2 (소속감: 고 /저) x 2 (인지된 인간성 : 인공지능/ 사람) x 2 (프라이버시 우려: 고/저)의 피험자간(between-subject) 실험 설계가 이루어졌다 (N=646). 실험 참여자는 동일한 상호작용에 대하여 상대방이 챗봇, 혹은 사람이라고 소개되었으며, 미리 준비된 시나리오에 따라 본인에 대한 정보를 밝히는 질문으로 구성된 대화를 나누었다. 연구 결과, 대부분의 종속 변인에서 인지된 인간성은 유의미한 차이를 드러내지 않았다. 다만 이용자의 소속 욕구에 따라 자기 노출의 정도가 유의미하게 변화하였으며, 프라이버시 우려의 조절 효과 역시 유의미하지 않은 것으로 나타났다. 이 결과에 따라 향후 AI 챗봇 산업의 발전에 대한 함의가 제시되었다.

Keywords:

AI, Chatbot, Perceived humanness, Need-to-belong, Self-disclosure, Social presence, Intimacy, Privacy concerns키워드:

인공지능, 챗봇, 자기노출, 사회적 현존감, 친밀감, 프라이버시 우려Ⅰ. Introduction

Chatbots are artificial intelligence programs that are programmed to interact with individuals using natural language. Also known as conversational agents they are defined as “software that accepts natural language as input and generates natural language as output, engaging in a conversation with the user”[1]. As chatbots have recently regained prominence with the development of “smart assistants” such as Google assistant or Siri, the interaction between user and conversational agent became more “human-like” to better respond to individual’s needs by simulating natural conversations[2]. Through the creation of a more personalized interface with more catered cues: such as voice tones, use of humor, or types of response, the interaction between a user and an AI chatbot is becoming increasingly natural and meaningful. To achieve the unique AI, there would be a need for the AI to develop more ‘natural and random’ type of responses. This would lead to individuals interacting with a more personal type of AI and hypothetically have them develop a more ‘intimate’ type of interaction with the AI interface.

This study attempts to examine the nature and proces of how individuals interact with AI agents, specifically a conversational chatbot. To understand why an individual self-discloses in an interaction with an AI agent, if there is a difference in the interaction when individuals believe they are interacting with an AI or a human. In addition, the user’s psychological trait, need to belong, will be taken into account for a more wholesome understanding of how individual differences can affect the quantity and quality of human-AI interaction. Significant interactions are created when individuals ‘affectively bond’ with another person, consequently the study examines the possibility of NTB explaining why some people might have a tendency for a deeper form of interaction with an AI agent than others. Subsequently, feelings of social presence and intimacy will also be measured in the interaction in order to look for possible difference between AI and human conversational partner. The poossible moderating effect of privacy concerns will be explored as well.

Ⅱ. Literature Review

2-1 Perceived humanness of chatbots

Anthropomorphism is “the assignment of human traits or qualities such as mental abilities”[3]. It can also be defined as “The process of determining humanity, intelligence, and social potential of others is largely based on perceptions of anthropomorphism”[4]. Regarding human- computer interaction, anthropomorphism is seen as favorable regarding the interaction with AI agents. Human-human conversation is natural for individuals and the closer a human-computer interaction can mimic human’s face-to-face conversation, the more natural and intuitive it becomes for an individual to converse with an AI based media platform.

Under the overarching theoretical framework of CASA(computerere are social actors), a number of empirical studies have shown that humans mindlessly apply the same social heuristics used for human interactions to computers[5-7]. However, many studies have shown that this could also be because the users project their messages to the programmer behind the screen[6]. There is also a belief that people need to respond socially to computers, interfaces are becoming more and more human, leading users to respond socially more easily[7]. Human-computer relationships are governed by many of the same social rules of behavior that characterize interpersonal interaction[7]. The presence of anthropomorphic cues on a computer interface would elicit mindless responses, triggering “social presence heuristic”. Agency affordance also influences information processing through the “social presence heuristic”. Interactivity affordance is associated with psychological empowerment, generated by user’s active engagement with interface and content.

With regard to chatbots, the research centered on how individuals interacted differently when they knew their conversation partner was a computer or a human[7-8]. The study found that individuals engaged in more with the chatbot by sending as much as twice more messages than to the human counterpart, this phenomenon was hypothesized as a result of individuals modeling their communication level in order to correspond to the chatbots’[8]. Although a chatbot is not able to functionally replicate an intelligent human conversation, individuals still engage with chatbots. In the context of the study, we need to look into the possible effect of the artificial intelligent chatbot, how it is perceived by individuals during an interaction and what those effects could lead to. For this study, the following research question is first proposed:

RQ 1. What are the effects of perceived humanness on a chabot user’s self-disclosure, social presence and intimacy?

2-2 Self-disclosure of chatbot users

Self-disclosure is one of the elements of Altman&Taylor’s[9] social penetration theory which states that the more individuals reveal themselves, the more their partnerst end to like them. Thus, self-disclosure tends to be rewarding and individuals like those who provide them with rewards. The degree of self-disclosure is often categorized in two dimensions: disclosure breadth, which refers to how much an individual makes self-relevant statements in an interaction, and disclosure depth, which refers to the level of disclosure intimacy[9]. As mentioned earlier, previous research has shown that individuals form relationships with computers regardless of whether the computers are represented by an object, a voice, an agent or any other device[5]. Human-computer relationships possess many of the same social rules of behavior that characterize interpersonal interaction. However, according to the affective bonding theory, relationships are unique between two sides communicating and each interaction is unique, making established relationships not transferable to other computers or humans, there is a uniqueness of interaction[10].

Individuals are increasingly more brought to interact with non-human interlocutors such artificial agents or robots, Hoetal[11] proposed to further the current impact of disclosure and see if technology alters disclosure, leading to media equivalency as a base mechanism in the result interpersonal interactions focused on disclosure with a chatbot. This study aims to better understand the psychological effects of perceived humanness the user forms towards the chabot in order to better understand how people perceive interaction with a chatbot compared to a human.

2-3 Social presence and intimacy of chatbot use

Previous work that explores the impact of agency on social presence usually presents the virtual human as “an actual person or a computerized character prior to the interaction[12].” Nowak & Biocca[5] found that “as artificial entities are represented by images or use language like humans, then they may elicit automatic social responses from users[5].” Results showed that there was no significant difference regarding the sense of presence regardless if participants were interacting with a human or not.

This was proven in Appeletal[13] using the Rapport Agent, where individuals felt higher levels of social presence when they thought they were interacting with a real human compared to when they believed it was an artificial intelligence. These results correlate the findings from Blascovich et al.’s model of social influence, which states that “avatars require a lower threshold of realism than agents to yield social presence”[14]. The goal in interaction is to manage uncertainty and enhance social presence. The social information processing theory states that given enough time, people can achieve similar levels of interpersonal outcomes of face-to-face communication via computer-mediated communication. We may expect people to come to similar levels of social presence when talking to both computerized agent and human.

Intimacy is closely linked to how individuals perceive others and in the context of the interaction with an artificial intelligent agent, it will be an indicator on how one feels more or less close to one’s interlocutor and influence subsequent feelings about the interaction. In the case of an initial encounter, feelings of intimacy are the elements that will influence subsequent possible interaction. If no intimacy feelings are felt by the individuals, then further interaction might not be preferred[15]. In this study, intimacy will be measured according to Berschied et al.’s[13] intimacy scale, in order to better measure how much individuals can feel close to, as well as emotionally close, to an artificial intelligent agent.

2-4 Chatbot users’ need to belong

To further our understanding on what causes individuals to react socially to chatbots, this study examines a dispositional attribution of individuals, the NTB, to provide a potential answer to the question. The need belong refers to the fact that “human beings are fundamentally and pervasively motivated by a NTB, that is, by a strong desire to form and maintain enduring interpersonal attachments” and this need is defined by “multiple links to cognitive processes, emotional patterns, behavioral responses, and health and well-being[16].”

A number of studies have shown the important role of the sense of belonging in online environments[17-19]. Kim et al.[20] have shown in the case of human-robot interaction, that individuals’ differences in need to belong had a role in the quality of the interaction. In the context of human-AI interaction, the user’s level of NTB is postulated to affect the experience and psychological repurcusssions resulting from the interaction. Accordingly, the following reearch question is put forth:

RQ 2. How does the user’s level of need to belong affect self-disclosure, social presence and intimacy?

2-5 Privacy Concerns

All communicative outcomes of chatbot use depend on the quantity and quality of the user’s sharing of personal information. This inevitably raises an issue over ther user’s concerns for their private protection. Overall, privacy concerns have been shown to be an important element in understanding individual’s behavior regarding new forms of interactions. However, the resarch on privacy concenrs disagree on their effects on the user’s information disclosure behavior. Some studies have found that privcy concerns hardly impat self-disclosure[21]. On the other hand, studies based on privacy calculus theory assume that people make conscious decisions about their self-disclosure by “weighing the benefits of disclosure against their privacy concerns associated with such disclosure”[22].

In this study, a possible moderating role of privacy concerns are explored in determining individuals’ behavior towards self-disclosure, with the prior influence of NTB and perceived humanness. Which, in tern, is expected to influence the level of self-disclosure in the interaction with an artificial intelligent agent or perceived human, as well as possibly the levels of social presence and intimacy. The following research questions are put forward:

RQ 3. Does the effect of privacy concerns moderate the interaction of perceived humanness and need to belong on self-disclosure, social presence and intimacy

Ⅲ. Methods

3-1 Experiment

A 2(perceived humanness: AI chatbot, human) x 2(NTB: high, low) x 2(privacy concerns: high, low) between-subject online experimental design was employed. Perceived humanness was manipulated through the “Wizard of Oz” technique, which is commonly used in human-computer interaction studies: Participants were randomly assigned to either the conversation “with AI Chatbot” or “with human” condition when in fact both situation’s responses were actually managed by a confederate based upon a preplanned scenario. After interacting with AI or human through a chat window, participants answered a questionnaire regarding their perceived levels of self-disclosure, social presence and intimacy regarding the interlocutor. Questions about their levels of NTB followed. A Korean online research company(Embrain) collected 646 experimental participants who are AI chatbot users. 51.4% of the participants was female, with the average age of 39.17 (SD=10.63).

3-2 Measurements

Self-disclosure was measured using 8 statements, including “The interlocuter and I exchanged enough personal information,” “I could speak to the interlocutor candidly,” “The amount of self-disclosure information was enough,” “I used many intimate words in my self-disclosure,” “I could talk about even my private information,” “I expressed my feelings when the interlocutro asked me about it,” and “My conversation contained information and facts.” Participants’ agreements on these items were rated on a 7-point Likert scale (Cronbach’s =.90).

Social presence was measured with 12 items, to reflect the perception of being together with the interlocuber. Statements were reverse-coded when appropriate. The statemetns included “I felt as if I was in the same space with my conversation partner,” “My conversation partner seemed to be together during the chat,” “My conversation partner was intensely involved in our interaction,” “My conversation partner communicatited warmth rather than coldness” “My interaction partner acted bored by our conversation,” “My conversation partner was inerested in talking to me,” and “My interaction partner seeemd to find our interaction stimulating.” All items used a 7-point Likert scale (Cronbach’s =.87).

The intimacy scale developed by Berschied et al.[23] was used to assess the closeness of an interaction. Five items inlcuding “I felt as if my partner was my friend,” “I felt emotionally close to my conversation partner,” My conversation partner used supportive statements to build a favorable relationship with me,” and “I developed a sense of familiaity with the conversation partner”. All items were based on a 7-point Likert scale (Cronbach’s =.94).

NTB scale developed by Leary[24] was adopted to measure the need to beling of participants. A total of 10 items were used using a 7-point Likert scale (Cronbach’s =.82). Items included statements such as “If other people don’t seem to accept me, I don’t let it bother me,” “I try hard not to do things that will make other people avoid or reject me,” “I seldom worry about whether other people care about me,” “I want other people to accept me,”“Being apart from my friends for long periods of time does not bother me,” and “My feelings are easily hurt when I feel others do not accept me.” Some items were reverse-coded accordingly, and the median-split method was used to group the respondents into high and low NTB users.

Privacy concerns were mesasured using 7 items that included “When I use an AI service, I feel uneasy due to the fact that the AI has information about myself,” “When I use an AI service, I feel like my personal information are being accessed,” “I beliebve that through the Ai, the owner of the AI system can access my personal information,” “Using an AI system can lead to a high possibility of intrusion of my private information” and “I believe that compared to the advantages of using an AI, the risks ofmy private information being lead is higher.” All items were rated on a 7-point Likert scale (Cronbach’s =.82). The median-split method was used to categorise the respondents into high- and low-privacy concern groups.

Ⅳ. Results

4-1 Effects of perceived humanness

A MANOVA examined the effects of perceived humanness on the chatbot user’s self-disclosure, social presence and intimacy. There was no statistically significant effect in self-disclosure, social presence and intimacy based on perceived humanness, F (4,641) = 1.29, n.s. Therefore, the multivariate effect for the three variables as a group in relation to the perceived humanness was not significant.

4-2 Effects of need to belong

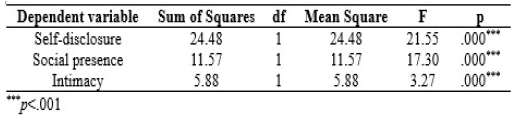

A MANOVA examined the effects of perceived humanness on the chatbot user’s self-disclosure, social presence and intimacy. There was a statistically significant effect in self-disclosure, social presence and intimacy based on NTB, F (4,641) = 9.80, p<.001, Wilk's Λ = 0.94, partial η2 =.06. All dependent variables were significantly affected by NTB, as illustrated in Table 1. High NTB users exhibited a greater extent of self-disclosure (M=5.06, SD=1.09; Low NTB M=4.66, SD=1.04), social presence (M=4.19, SD=.89; Low NTB M=3.91, SD=.76), and intimacy (M=3.80, SD=1.49; Low NTB M=3.60, SD=1.24) than low NTB counterparts.

4-3 Moderating effects of privacy concerns

In order to examine the moderating effects of privacy concerns between perceived humanness, NTB, and all three communication variables, three separate hierarchical regression were conducted, Prior to conducting the analyese, the relevant assumptions were tested. Assumptions of singularity were met for the independent variables, and by examining the correlations between independent variables none were highly correlated. Furthermore, regarding the collinearity statistics, the Tolerance and VIF values were all within accepted limits (VIF <5, Tolerance <1).

For the first block of the hierarchical regression, a significant regression equation was found, F (3, 642) = 3.08, p<0.05. Within the first block there was a significant effect of age (β=.08, p=.04) and amount of use (β=.08, p=.03). In the second block, a significant regression equation was found, F (5, 640) = 5.49, p<0.001. Amount of use (β=.08, p=.04) NTB (β=.16, p<.001) contributed significantly to model 2. For the third block, a significant regression equation was found, F (8, 637) = 3.49, p<0.001. In model 3, age (β=.07 p=.05) and the amount of use (β=.08 p=.03) were significant. However, the result showed no significance of the interaction of privacy concerns with both perceived humanness and NTB on self-disclosure, and no significance as well for the three-way interaction among the three variables.

Hierarchical regression analysis was conducted to reveal the relationship among the variables with regard to social presence. The first model was found to be significant, F(3, 642) = 6.21, p<0.001. Two control variables of gender(β=.07, p=.05) and age(β=.14, p<.001) were significant. For the second block of the hierarchical regression, a significant regression equation was found, F (5, 64) = 8.39, p<0.001. Age(β=.14 p<.001) and NTB(β=.18, p<.001) both contributed significantly to model 2. For the third block of the hierarchical regression, a significant regression equation was found, F (8, 637) = 13.81, p<0.001. Gender(β=.08, p=.03), age(β=.13, p<.001) and NTB(β=.37, p<.001) were significant to the regression model. None of three-way and two-way interaction effects of privacy concern was found to be significant.

A three-stage hierarchical regression was conducted with intimacy as the dependent variable. For the first block of the hierarchical regression, a significant regression equation was found, F (3, 642) = 9.36, p<0.001. Age (β=.19, p<.001) was found to be significant to the regression model in the first block. The model 2 was also found to be significant, F (5, 640) = 8.12, p<0.001. Both age (β=.18, p<.001) and NTB (β=.13, p<.001) contributed significantly to the regression model. The model 3 was significant as well, F (8, 637) = 5.31, p<0.001. Regarding the moderating effect of privacy concerns on intimacy, there was no significant effect of the interactions of privacy concerns with perceived humanness or NTB. Finally, the three-way interaction between privacy concerns, perceived humanness and NTB was found to not be significant.

Ⅴ. Discussions

The purpose of this study was to investigate what brought individuals to self-disclose in the context of a chatbot interaction and what outcomes of such interaction would be, specifically if any differences existed when individuals thought they were interacting with a human or an AI on the levels of emotional and factual disclosure.

Regarding the effect of perceived humanness, if the user perceived the chatbot to be operated by artificial intelligence or human did not have significant effects on self-disclosure, social presence, or intimacy. This finding aligns well with the CASA paradigm that media users do not clearly distinguish their computer counterpart from human counterpart [5][7]. Kim and Sundar (2012) explained that “individuals will deny their mindless responses to computer interactions” meaning that there would be no significant outcomes coming from differences in perceived humanness[20]. This study’s results conform to the findings of Nowak and Biocca[4] as well in which no significant difference was found regarding the feelings of social presence or intimacy when the interlocutor was perceived as human or AI.

In addition, this study wanted to examine the effects of user trait, specifically their NTB. NTB was confirmed to be a strong predictor of self-disclosure, social presence, and intimacy. High NTB users more willingly disclosed about themselves, experienced a greater extent of social presence, and rated the interaction to be significantly more intimate than low NTB users. The results show that the user’s characteristics can bring about meaningful differences in their experience of AI-mediated communication. This finding fits with the results of previous studies. Kim and Sundar[20] also illustrated the importance of difference resulted from NTB in the context of human-robot interaction (HRI) such that the user’s NTB level enhanced the interaction experience.

Interestingly, the analyses yielded no significant moderating effect of privacy concerns. Since much AI-mediated communication requires voluntary sharing of personal information on the communication system, it was postulated that the user would have increased concerns over their privacy once they perceive the interaction to be more intimate. However, no significant interaction effect of privacy concern with all of major variables rather conforms to the pattern suggested by the ‘privacy paradox’[25]. The notion of privacy paradox refers to the phenomenon where, although one’s sense of privacy concerns could be high, the user’s actual behavior of self-disclosing remains unaffected. This could be explained by the fact that when one’s privacy concerns were higher, individuals had the tendency to distance themselves from the interlocutor and therefore not feel as close emotionally to whom they were interacting with, but still disclose information. This could be explained by the fact that when one’s privacy concerns were higher, individuals had the tendency to distance themselves from the interlocutor and therefore not feel as close emotionally to whom they were interacting with, but still disclose information. The results could also be explained with Metzger’s[26] view on online privacy concerns. He explains that an individual’s action of disclosing personal information online has become a form of norm or requirement in the online setting in order to have access to online services such as social media or online purchases. In this setting, individuals are pre-conditioned to share personal information online without prior questioning on why one has to provide such information. Privacy concerns has to take into consideration that nowadays self-disclosing information online to strangers has been normalized and therefore this could explain the presence of a privacy paradox. Different contexts under which the user’s concerns over his/her privacy has to be explored in the future research as the issue of privacy protection is increasingly salient in the midst of rapid deployment of AI-based communication in most media platforms.

This study presents of a few limitations. Firstly, the experiment’s cross-sectional nature limits the ecological validity of the results. In real life, AI users often form a long-term relationship with the chatbot, and further research is warranted to look into the effect of familiarization through the element of time. Also, this study incorporated text-based chatbot only while, in reality, voice-based AI services are widely popular. Voice is a stronger anthropomorphic cue that could even further diminish the boundary of AI communication systems. Future research should investigate the possible effect of such human-like cues to analyze if a stronger effect can be found. Taken together, In the day and age of artificial intelligence and the development of the field of human-AI interaction, this research brings to light that some individuals are more prone to interact with artificial intelligent agents. Although a future were individuals become close, friends or partners, with their AI, has yet to come, it is undeniable that AI in their various form are part of our future. No one can predict what limit will technology advancements push the boundaries between human and artificial intelligent agents in the years to come.

References

-

D, Griol, J. Carbo, J. and M. Molina, “An automatic dialog simulation technique to develop and evaluate interactive conversational agents”, Applied Artificial Intelligence, Vol. 27, No. 9, pp.278-297, 2013.

[https://doi.org/10.1080/08839514.2013.835230]

-

L. Klopfenstein, S. Delpriori, S. Malatini, and A. Bogliolo, “The rise of bots: A survey of conversational interfaces, patterns, and paradigms. In Proceedings of the 2017 Conference on Designing Interactive Systems, pp.555-565, 2017.

[https://doi.org/10.1145/3064663.3064672]

-

J. S. Kennedy, The New Anthropomorphism, Cambridge University Press: NY, 1992.

[https://doi.org/10.1017/CBO9780511623455]

-

K. Nowak, and F. Biocca, “The effect of the agency and anthropomorphism on users’ sense of telepresence, co-presence and social presence in virtual environments”, Presence: Tele-operators and Virtual Environments, Vol.12, No.5, pp.481-494, 2003.

[https://doi.org/10.1162/105474603322761289]

- B. Reeves, and C. Nass, The media equation: How people treat computers, television, and new media like real people and places, Cambridge University Press: NY, 1996.

-

S. Sundar, and C. Nass, “Source orientation in human computer interaction: Programmer, networker, or independent social actor?”, Communication Research, Vol.27, pp.683-703, 2000.

[https://doi.org/10.1177/009365000027006001]

-

C. Nass, and Y. Moon, “Machines and mindlessness: Social responses to computers”, Journal of Social Issues, Vol.56, No.1, pp.81-103, 2000.

[https://doi.org/10.1111/0022-4537.00153]

-

J. Hill, R. Ford, and I. Farreras, “Real conversation with artificial intelligence: A comparison between human-human online conversations and human-chatbot conversations”, Computers in Human Behavior, Vol.49, pp.245-250, 2015.

[https://doi.org/10.1016/j.chb.2015.02.026]

- I. Altman, and D. Taylor, Social penetration: The development of interpersonal relationships, New York, NY: Holt, Rinehart, & Winston, 1973.

-

M. Ainsworth, “Attachments beyond infancy”, American Psychologist, Vol.44, No.4, pp.709-716, 1989.

[https://doi.org/10.1037/0003-066X.44.4.709]

-

A. Ho, J. Hancock, and A. Miner, “Psychological, relational, and emotional effects of self-disclosure with a chatbot”, Journal of Communication, Vol.68, No.4, pp.712-733, 2018.

[https://doi.org/10.1093/joc/jqy026]

- C. Oh, J. Bailenson, and G. Welch, “A systematic review of social presence: Definition, antecedents, and implications”, Frontiers in Robotics and AI.

- J. Appel, N. Kramer, and J. Gratch, “Does humanity matter? Analyzing the importance of social cues and perceived agency of a computer system for the emergence of social reactions during human-computer interaction”, Advances in Human-Computer Interactions.

-

J. Blascovich, J. Loomis, A. Beall, K. Swinth, C. Hoyt, and J. Bailenson, “Immersive virtual environment technology as a methodological tool for social psychology”, Psychological Inquiry, Vol.13, No.2, pp.103-124, 2002.

[https://doi.org/10.1207/S15327965PLI1302_01]

-

L. Jian, N. Bazarova, and J. Hancock, “The disclosure-intimacy link in computer-mediated communication: An attributional extension of the hyper-personal model”, Human Communication Research, Vol.37, pp.58-77, 2011.

[https://doi.org/10.1111/j.1468-2958.2010.01393.x]

-

R. Baumeister, F. Leary, and R. Mark, “The need to belong: Desire for interpersonal attachments as a fundamental human motivation”, Psychological Bulletin, Vol. 117, No. 3, pp.497-529, 1995.

[https://doi.org/10.1037/0033-2909.117.3.497]

-

H. Lin, “Determinants of successful virtual communities: Contributions from system characteristics and social factors”, Information & Management, Vol.45, No.8, pp.522-527, 2008.

[https://doi.org/10.1016/j.im.2008.08.002]

-

H. Lin, W. Fan, and P. Chau, “Determinants of users' continuance of social networking sites: A self-regulation perspective”, Information & Management, Vo.51, No.5, pp.595-603, 2014.

[https://doi.org/10.1016/j.im.2014.03.010]

-

L. Zhao, Y. Lu, B. Wang, P. Chau, and L. Zhang, “Cultivating the sense of belonging and motivating user participation in virtual communities: A social capital perspective”, International Journal of Information Management, Vol.32, No.6, 574-588, 2012.

[https://doi.org/10.1016/j.ijinfomgt.2012.02.006]

-

Y. Kim, and S. Sundar, “Anthropomorphism of computers: Is it mindful or mindless?”, Computers in Human Behavior, Vol.28, pp.241-250, 2012.

[https://doi.org/10.1016/j.chb.2011.09.006]

-

M. Taddicken, “The ‘privacy paradox’ in the social web: the impact of privacy concerns, individual characteristics, and the perceived social relevance on different forms of self-disclosure”, Journal of Computer-Mediated Communication, Vol.19, pp.248–273, 2014.

[https://doi.org/10.1111/jcc4.12052]

-

T. Dinev, and P. Hart, “An extended privacy calculus model for e-commerce transactions”, Information Systems Research, Vol.17, No.1, pp.61– 80, 2006.

[https://doi.org/10.1287/isre.1060.0080]

-

E. Berschied, M. Snyder, and A. Omoto, “The relationship closeness inventory: assessing the closeness of interpersonal relationships”, Journal of Personality and Social Psychology, Vol.57, pp.792–807, 1989.

[https://doi.org/10.1037/0022-3514.57.5.792]

-

M. Leary, “Construct validity of the need to belong scale: Mapping the nomological network”, Journal of Personality Assessment, 2013.

[https://doi.org/10.1080/00223891.2013.819511]

-

C. Hallam, and G.Zanella, “Online self-disclosure: the privacy paradox explained as a temporally discounted balance between concerns and rewards”, Computers in Human Behavior, Vol.68, pp.217–227, 2017.

[https://doi.org/10.1016/j.chb.2016.11.033]

-

M. Metzger, “Comparative optimism about privacy risks on Facebook”, Journal of Communication, Vol.67, No.2, pp.203-232, 2017.

[https://doi.org/10.1111/jcom.12290]

2016년: Institut national des langues et civilisations orientales (문학학사)

2019년: 이화여자대학교 (문학석사)

※관심분야: 미디어심리학, 미디어이용자, HCI

2000년 : 이화여자대학교 (문학석사)

2005년 : Stanford University (Ph.D. Communication)

2010년~현재: 이화여대 커뮤니케이션·미디어학부 교수

※관심분야: 미디어심리학, 미디어이용자, HCI, 소셜미디어