Study on Multimodal Tactile Interface using Wearable Technology for Virtual Reality Environment

Copyright ⓒ 2020 The Digital Contents Society

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-CommercialLicense(http://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

Various wearable devices are being developed owing to the growth of the virtual reality market. However, the interfaces of existing wearable devices offer only limited functions such as vibration. The requirement for a corresponding multimodal interface is increasing in terms of the development of interactive content that enables users to participate and communicate with users who are not in a one-way interaction.. ‘SensVR’ that is developed for this study is a wearable multimodal tactile interface for interactive VR content. It can be worn in both hands of users and aims to enhance users' immersion and emotional responses by delivering vibrations, wind, heat, and electrical stimulation for stories in a virtual reality environment. We applied the multimodal interface to a VR animation and conducted in-depth interviews to verify its effects.

초록

가상현실 시장의 성장으로 다양한 웨어러블 디바이스가 개발되고 있다. 그러나 기존 웨어러블 기기의 인터페이스는 진동과 같이 제한적인 기능만을 제공해왔다. 현재의 인터랙티브한 가상현실 콘텐츠들의 개발과 함께, 기존의 단방향적 인터랙션이 아닌 사용자들이 참여하고 서로 대화할 수 있는 멀티모달 인터페이스가 요구 되고 있다. 본 연구에서 개발된 ‘SensVR’은 인터랙티브 VR콘텐츠를 위한 웨어러블 멀티모달 촉각 인터페이스이다. 이 웨어러블 기기는 사용자의 양손에 착용할 수 있으며 가상환경 속에서 사용자의 손에 진동, 바람, 열, 전기적 자극 등을 전달함으써 사용자의 몰입도와 감성적 반응을 높이는 것을 목표로 하였다. 우리는 가상현실 애니메이션에 개발한 멀티모달 인터페이스를 적용하고 사용자의 심층인터뷰를 진행하여 그 효과를 검증하였다.

Keywords:

Wearable Technology, Virtual Reality, Multimodal Interface, Tactile, Interaction키워드:

웨어러블 기술, 가상현실, 멀티모달 인터페이스, 촉각, 인터랙션Ⅰ. Introduction

Cutting-edge technologies have emerged with the advent of the Fourth Indus-trial Revolution, for example, artificial intelligence, virtual reality, and augmented reality. These technologies have been combined to be used in various fields. In particular, virtual reality has been studied in various directions over a hundred years. Early virtual reality technologies focused on expressing objects on 2D mon-itors or screens of limited size. However, to maximize visual effects and deliver immersion to users, the development of a tiled display or CAVE system that delivers high-resolution visual information and can fill users’ viewing angles has been started. A Study on the High Perception and Sensitivity of Virtual Reality is a factor and studies with various peripheral devices experience through development and convergence of various technologies and application of specialties research is continuing into technologies that can further increase the degree [1].

Users must manipulate virtual objects to gain a more detailed understanding of a target object. Even though mice and keyboards were mainly used to convey a user's commands to a virtual environment, it was difficult to operate them intuitively because they were not based on the user's natural motion. To compensate for this, intuitive manipulators were developed based on user motion. Sugarman et al. developed motion sensing systems based on user motion [2]. Microsoft’s Kinect and Wii also developed systems that recognize user motion and deliver it to a virtual environment [3, 4]. However, these systems were frequently insufficient for providing users sense of being presence in VR environment because the user input to a command did not exist or was extremely weak.

In recent years, Oculus Quest and HTC Vive have improved resolution and viewing angles to enhance the audiovisual immersion of users and solved the problem of delay speed. However, they still provide a controller-type VR interface that enables users to feel only vibrations in their hands.

Sensory information is a natural method for humans to express and recognize. In addition, tactile sensation can trigger the recollection of the senses and memories and be highly associated with memory. Thus, it is necessary to develop a sensory interface to enable humans to interact naturally in a virtual environment. However, due to the limited sensory feedback of current VR(Virtual Reality) interface, users can’t immerse to the VR environment fully. In this study, we present a wearable multi-modal interface that can be worn in the hands of the users of HMD(Head Mounted Display)-based virtual spaces and apply the interface to a virtual reality animation. We wanted to expand users’ sensory feedback to strengthen the users’ immersion in the VR environment.

Ⅱ. Related Works

Multimodal interfaces are being investigated by MIT Media Lab, Microsoft, HP, and Intel, and the research is focusing on voice recognition, facial recognition, haptic interface, body tracking, and Data Glove-based gesture recognition technology. Dizio used skin irritation in VR environments to induce accurate pointing values during manipulation, which resulted in accurate pointing results for VR objects [5]. In addition, interactions using vibrotactile feedback for skin were implemented by Klatzky and Lederman [6]. Hauber implemented a 6DOF tactile pointer that used a buzzer and developed the second ver-sion, i.e., a bending actuator that used piezers [7].

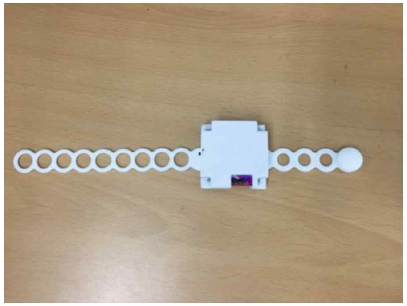

In addition, tactile devices that could be worn on users’ hands were investigated to determine the performance and configuration information (deployment size and spacing) of the equipment by specifying the tactile capacity type to utilize the tactile devices in the form of gloves with vibrators, as shown in Figure 1, and to understand the preferred use of the devices. However, the multimodal interfaces that use existing tactile information are screen-based interfaces, not virtual reality environments. In addition, they use preprogrammed haptic information, rather than responses from users' real-time inputs. Furthermore, most haptic interfaces only focus on vibration effects. Therefore, a new haptic interface is required that can provide various types of sensory feedback immediately in response to users' real-time interactions in current immersive virtual reality environments.

Ⅲ. Multimodal Haptic Interface

Virtual reality is a technique that is applied not only to the visual but also to the senses of hearing and touch. Among them, immersive virtual reality interfaces are used to allow users to fully immerse themselves in the virtual world, and users experience and interactively communicate with each other by completely immersing themselves into the virtual environment created using computers, using special interfaces such as HMD and data headsets. Users in this immersive virtual reality environment experience a variety of interactions in virtual space, through which they feel as if they were there. However, it is true that the cur-rent virtual reality interface is not enough to convey the same sense of reality to users. While most commercialized virtual reality systems are developed solely by focusing on the input that recognizes the user's gestures and the corresponding audio-visual information, the development of an interface to the output that provides the user with an extended sense is very scarce. High-end virtual reality systems, such as Oculus and Vive, also provide users with only haptic reactions through vibrations and so on that accompany audiovisual information. To compensate for the lack of user feedback sensory information in the virtual reality space, this study aims to present a multimodal interface focuses on various haptic feedbacks. Multimodal refers to an interaction created by an interface that is applied to different senses for smooth communication between humans and machines [8]. A multimodal interface is a cognitive board technology that interprets and encodes information about natural human behaviors such as human gestures, gaze, hand movements, patterns of behavior, voice, and physical position. A multimodal interface allows for the input and output of multiple modalities simultaneously during human and computer interaction and interacts with each other through the combination of modality and the integrated analysis of input signals. In other words, a multimodal interface can perform input and output functions simultaneously. The advantages of a multimodal interface can be reduced efficiently through learning when users experience a more immersive feeling by rapidly obtaining feedback for their inputs [9] or when incorrect behavior or errors occur [10]. Multimodal interfaces enable users to become more immersed and emotionally assimilated into a virtual space by extending users’ sensory feedbacks in the virtual reality space.

3-1 Wearable multimodal VR interface ‘SensVR’

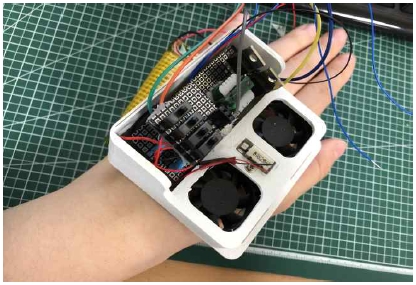

The multimodal interface presented in this study is divided into two parts. A user's right hand is the gesture input that allows for free gesture interaction in a virtual reality environment, and the left hand is the output that feeds back various haptic reactions depending on the input. The wearable band on the right hand input is equipped with an inertial measurement unit sensor, which is a combination of an acceleration sensor and an inclination sensor, enabling users to interact freely while users wearing on the wrist without lifting the controller. A user's gesture information entered through this system is designed to interact with the VR animation so that the tactile information suitable for the gesture is instantly felt on the left hand.

The wearable band for the left hand is a sensory interface developed by the author in a prior study that provides users with four types of tactile information, i.e., vibration, thermal sensation, wind, and electrical stimulation. Unlike the previous studies that developed only a sense interface referred to as 'Emogle', this study tested the effects of user gesture interaction by providing an interface for users to instantly feel the interaction between objects or characters in VR environments through extended tactile senses on their hands.

3-2 Haptic Interface for Virtual Reality

Humans are living on constant stimulation from the outside. These stimulations are used largely in the body through five human sensory organs. In general, vision, hearing, touch, taste and smell are referred to as human five senses. In a dictionary sense, sensation means the physical ability to see, smell, taste, and feel sensations through the skin. That is, the senses here are closely related to human body sensory organs such as ears, nose and eyes. Human five-sensory abilities remain unchanged, but the evolution of the media's sensory apparatus can provide users with a new sensory experience. Mechanical devices such as computers and interfaces for human communication are evolving into various forms with technological advances. Recently, it has developed into a more convenient and realistic interface utilizing five senses such as the user's visual, auditory, tactile, olfactory and palate. Gibson stated that vision, tactile, auditory, olfactory and palate were also considered as sensory organs of the body [11]. Sensory received through the organs of each body's use causes a variety of physical reactions, called sensations or feelings. For example, something that feels soft, warm, spicy, noisy through the eyes, ears, nose, tongue, skin can be said sensation. Sensation from the sensory organs soon switches to perception [12]. The perception also serves as a feedback, that tells you how to act in response to stimuli, when you learn what stimuli it is through the action of the brain. Media technology, which had focused on visual elements since Gutenberg's printing invention, has evolved toward audio-visual synesthesia as the 20th century enters, but in the process of convergence and diversion, where the boundaries between media have disappeared and services have been consolidated and distributed, users have embraced media as part of their experience. Reporting and listening alone cannot be linked to everyday experiences, and expectations of interaction with touch and feel through different types of touch and feel have led to the emergence of touch media. In other words, touch media is a media technology that emerged during the emotional convergence of users' media behavior in the process of integrating devices and services brought by Media Convergence. What is currently being developed in many areas is the tactile function. A sense of touch among five senses has a very good advantage. Visual and auditory enter the critical memory during long-term memory (memory stored for long periods of time for reuse in the long term), but tactile behavior enters the muscle-moving action, procedural memory. Due to this, the multimodal interface presented in this study developed an interface that allows the senses to expand around the user's tactile information, unlike the existing multimodal interface that was developed around the user's movement and voice.

Ⅳ. Interactive VR Animation 'Noah'

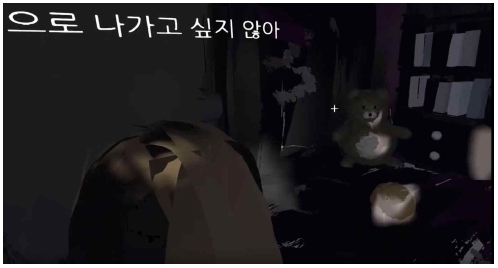

Virtual reality interactive animation 'Noah' is an interactive animation that allows users to interact with each other while wearing a multimodal interface 'SensVR'. A child named Noah is grieving over the loss of a dear friend in the real world, and users will act as an assistant to encourage Noah and guide him back into the bright world to lead his hand. Through this VR animation, users were configured to avoid the crisis that came with Noah and to engage him in the virtual world with amazing experiences, and to use the tactile interfaces developed in this study for more effective emotional immersion. The animation features a total of four tactile feedbacks designed to be experienced by the user and allowed to experience them in three interaction branches as follows.

- 1. Take Noah's hand and lead her out of the dark room. Input: Hold Noah's hand. Feedback: A user’s left hand becomes warm.

- 2. Go after the bat running to Noah. Input: Stir user’s hands. Feedback: A user can feel weak electrical impulses and wind on his (her) left hand.

- 3. Play fireworks with Noah. Input: Reaching out to the sky and shooting up flames. Feedback: Vibrations are felt when the flame is extinguished.

As a user guides Noah along the journey, he (she) can gesture in three interactions, i.e., holding Noah's hand, chasing a bat, and playing fireworks with Noah, through a wearable band worn on the right hand. The user experiences tactile feedback that enables him (her) to feel warmth, electrical stimulation, wind, and vibration instantly when a gesture is successfully performed.

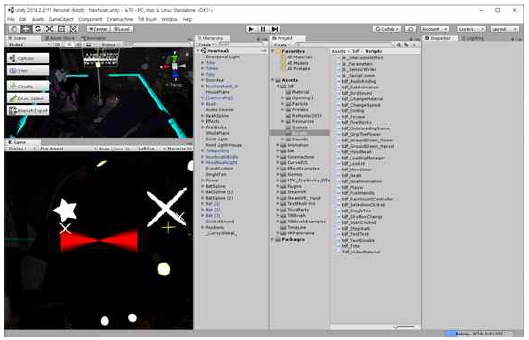

Interactive VR animation 'Noah' was produced using Unity Game Engine and Tiltbrush. First, the virtual environment and characters such as Noah and Bats were drawn using Tiltbrush, which can be painted in 3D in a virtual reality environment, to implement the virtual space. The space and characters were then brought to Unity and programmed to interact with the wearable bands that users are wearing. As the user interacts by wearing wearable bands, the user's right hand is free to gesture interactions without holding the controller, and the left hand is delivered tactile stimuli that are pre-programmed according to the interaction to receive immediate feedback.

Ⅴ. User Study

10 women and 10 men participated in the user study. All participants had experienced virtual reality games or movies more than once, and they understood the virtual reality interface. Among them, all male users had experience of tactile interfaces through different games, while only three of the female users had experience of tactile interfaces through virtual reality games. We fully explained the tactile feedback of 'SensVR' prior to the experiment so that the participants would not be surprised by the feedback. In the experiment, the participants played the VR animation for approximately 5 to 7 minutes. After the experiment, we conducted an in-depth interview and survey to evaluate user response. The user survey consisted of the following three questions based on the Likert scale:

1) Did the effect of immersion increase when you felt the tactile response?2) Did the tactile response go well with the story?3) Was the tactile response natural?

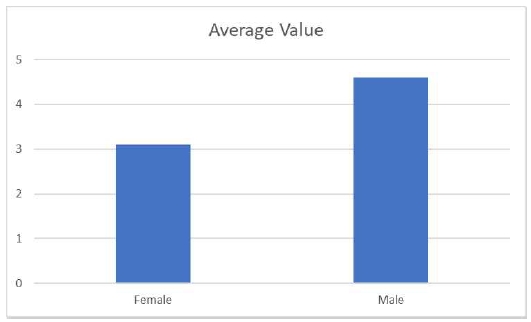

In the three questions, 20 users answered 3.85 satisfaction score on average, providing a positive assessment of the tactile feedback in general. Interestingly, the average value for male users was 4.6 satisfaction score compared to 3.1 satisfaction score for female users. This showed that male users had more positive feedback compared to female users about the extended sensory response. In-depth interviews also showed positive comments such as, 'At first I was a little surprised, but I actually felt good about interacting.' and 'I was surprised because I felt real when I punched out the bats that I was attracted to.' In other words, the tactile reactions to the gestures from users in the virtual reality space were evaluated as positive factors and the immersion of users increased.

As a result, both men and women responded that they were generally satisfied with their own gesture interactions and received a corresponding tactile response while playing 'Noah.' What was interesting was that men showed a more positive response to the tactile interface compared to women, such as Fig. 8. One might infer that this was because most of the male users were already familiar with the tactile interface through other games, as we could see from the pre-interview. However, it is thought that further study of the emotional effects on tactile interfaces of these genders will be necessary in the future.

Ⅵ. Conclusion

We investigated a multimodal interface using wearable technology to extend the senses provided to users in an HMD-based immersive virtual reality environment. Users in virtual environments must interact with virtual characters or objects in a virtual space. It is difficult to increase the immersion in these interactions through current tactile feedback such as vibration. In this study, we presented 'SensVR', which is a virtual reality wearable interface that extends a user's tactile sense of touch using 'Emogle' in the left hand. ‘Emogle’ enables users to input with natural gestures and immediately respond to them. Experiments showed that users experience an extended multimodal tactile sensation, they are immersed, and they have a positive response. In future, experiments will be carried out on a wider variety of content on actual extended tactile sensations and comparative analysis with virtual reality interfaces that do not involve tactile sensations will be performed. Even though this study focused on tactile feedback in multimodal interaction in virtual reality environments, further expansion into more diverse senses like smell and taste can be examined in future. Virtual reality provides users with ultimate immersion in an environment, and if various studies continue, it will be a promising technology that can be used in various areas like education, sight-seeing, military and medical simulations.

Acknowledgments

This research was supported by the Ministry of Science and ICT, Korea, under the Information Technology Research Center support program (IITP-2019-20150-00363) supervised by the Institute for Information & Communications Technology Planning & Evaluation. This research was supported by the National Research Foundation of Korea (NRF-2017R1D1A1B03027954).

Reference

-

S. H. Lee and E. J. Song, A Study on Application of Virtual Augmented Reality Technology for Rescue in Case of Fire Disaster, Journal of Digital Contents Society, 20(1), 60, 2019.

[https://doi.org/10.9728/dcs.2019.20.1.59]

-

Sugarman H, Weisel-Eichler A, Burstin A, Brown R, Use of the Wii Fit system for the treatment of balance problems in the elderly: A feasibility study. Virtual Rehabilitation International Conference, pp. 111–116, 2009.

[https://doi.org/10.1109/ICVR.2009.5174215]

- Microsoft, ESPN Winter X Games. http://www.microsoft.com, . Accessed 2019.

- Nintendo, WII Remote. http://www.nintendo.com, . Accessed 23 April 2019.

-

Klatzky R, Lederman S, Touch. In: Weiner IB (ed) Handbook of psychology, Wiley, 2003.

[https://doi.org/10.1002/0471264385.wei0406]

-

Buchmann V, Violich S, Billinghurst M, Cockburn A, FingARtips: gesture based direct manipulation in augmented reality. Proceedings of the 2nd International Conference on Computer Graphics and Interactive Techniques in Australasia and SouthEast Asia. 2004.

[https://doi.org/10.1145/988834.988871]

- Dizio P, Proprioceptive adaptation and aftereffects. In: Hale KS, Stanney KM (eds) Handbook of virtual environments, 2nd . Taylor & Francis, 2002.

- Bourguet ML, Designing and prototyping multimodal commands. INTERACT, pp.717–720, 2003.

- Kim N, Kim GJ, Park CM, Lee I, Lim SH, Multimodal menu presentation and selection in immersive virtual environments. Proceedings of IEEE Virtual Reality Conference 281, 2002.

-

Oviatt S, Mutual disambiguation of recognition errors in a multimodal architecture. Proceedings of the SIGCHI conference on Human Factors in Computing Systems, pp. 576–583. 1999.

[https://doi.org/10.1145/302979.303163]

- Gibson JJ, The senses considered as perceptual systems. Houghton Mifflin Co, Boston & New York, 1966.

- Kim D, Boradkar P, Sensibility Design. http://new.idsa.org/webmodules/articles/articlefiles/ed_conference02/22.pdf, . Accessed 1 May 2019. 2002.

2004년 : Pratt Institute 컴퓨터 그래픽스 (학사)

2006년 : New York University, ITP (석사)

2013년 : 한국과학기술원(공학박사-인터랙션 디자인)

2012년~현 재: 단국대학교 영화콘텐츠전문대학원 부교수

관심분야: 가상현실(VR), 증강현실(AR), 인터랙션 디자인 등