OpenDKP: Designing an Open Data Knowledge Platform for Disaster Risk Reduction and Management

Copyright ⓒ 2019 The Digital Contents Society

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-CommercialLicense(http://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

With respect to the phases of the entire disaster management cycle, such as prevention, mitigation, preparedness, response, and recovery, data interconnectivity, availability, and sharing with multidisciplinary and cross-domain approaches play a crucial role in disaster risk reduction and management. In this paper, an open data knowledge platform, which is a knowledge sharing platform for disaster data collection, management, distribution, and analysis, designed for use in interdisciplinary studies in the field of disaster risk reduction and management, is presented. The proposed system can play an important role as a knowledge hub for supporting the development of disaster response technology as well as for risk mitigation and disaster response, disaster recovery, and risk assessment studies. In this study, the architectural design of an open disaster data platform that consists of a set of modular platforms, namely an open data knowledge portal, an API gateway, a disaster knowledge-based platform, and a big data analytics platform, is proposed. The proposed platform will enable the researchers and decision makers involved in the analysis of disaster risk to integrate and collaborate on the collected disaster data and the corresponding analyses, which will allow for the highest levels of disaster risk reduction and management.

초록

재난위기 경감 및 관리 분야에서의 오픈 데이터는 재난의 예방, 완화, 준비, 대응 및 복구 등의 전체 재해 위험 관리 프로세스와 타 분야 도메인 간 연계분석 등에 있어서 매우 중요한 역할을 한다. 본 연구에서는 재난 위험 감소 및 관리 분야의 최적 설계된 재난 데이터 수집, 관리, 배포 및 분석을 위한 지식 공유 플랫폼을 제안하였다. 본 연구에서는 개방형 데이터 지식 포털, API 게이트웨이, 재난 지식기반 플랫폼 및 빅데이터 분석 플랫폼과 같은 모듈형 플랫폼으로 구성된 개방형 데이터 플랫폼의 아키텍처 설계를 소개한다. 본 제안 시스템은 재난 대응 기술개발을 지원할 뿐만 아니라 재난 위험 완화 및 재난 대응, 재난 복구 및 위험 평가 연구를 위한 지식 허브로서도 중요한 역할을 할 수 있을 것이다.

Keywords:

Bigdata analytics, Knowledge-based system, Modular platform architecture, Open data, Disaster risk reduction키워드:

빅데이터 분석, 지식기반시스템, 모듈형 플랫폼, 개방형 데이터, 재난위기경감Ⅰ. Introduction

A disaster is a serious disruption that results in a wide range of human, material, economic, and environmental loss that exceeds the capability of a community or society [1, 2]. The severity of a disaster depends on the extent of risk affecting the society and the environment.

Disaster risk reduction (DRR) is a systematic effort to identify, analyze, and reduce the risks of disaster [3]. The goal of DRR is to reduce the casual factors of disasters such as lessened risk exposure, reducing vulnerability to people and property, managing land and environment, and improving preparedness for other hazards [4]. With an increasing number of risks worldwide, there has been a growing interest in the international cooperation regarding disaster risk reduction.

The recently adopted Sendai Framework for Disaster Risk Reduction 2015-2030 (SFDRR), the first global policy framework of the United Nations’ post-2015 agenda, declares knowledge-related issues and supports the opportunity to highlight the critical role of knowledge in disaster risk reduction [5].

It aims to significantly reduce the disaster risk and the loss of the lives, livelihoods, and health, of the communities and countries, and of the economic, physical, social, cultural and environmental assets. The SFDRR emphasizes the role of science and technology in terms of realizing disaster mitigation and building resilience [6]. It also requires reliable and interconnected multidisciplinary knowledge to serve informed decision making and coordinated action.

Thus far, most of the studies dealing with disaster data have focused on geospatial data and information for disaster management [7–14]. Geospatial information is essential for disaster management, and its value increases when integrated with other sources [8].

In[10], the researchers introduced a GIS-based disaster management platform that supports dynamic hazard models and GUIs to provide disaster management tools for emergency response. In [11], the researchers proposed a framework of spatial planning and spatial data infrastructure (SDI) , for the exchange and sharing of spatial data among the stakeholders by using a spatial data-based platform for disaster risk reduction.

The sharing of spatial data significantly contributes to the disaster risk reduction in terms of maximizing the use of spatial analysis among the stakeholders utilizing the spatial data for a disaster risk analysis [10-14].

In[14], the researchers introduced a public platform for geospatial data sharing for disaster risk management. Open DRI aims to reduce the damage caused by disasters by providing decision makers with better information and tools to help them make decisions.

A previous study dealing with a data exchange platform presented software procedures between different systems for the bridge disaster prevention [15]. A data exchange platform was used to obtain the disaster information for all the agencies that served as the basis for the hazard prevention system. In [16], the scholars introduced the role of information and knowledge management in disaster management in terms of the relevance of multidisciplinary and cross-domain approaches. Further, they described that data quality is a very significant factor from the operational perspective of disaster management. For optimized decision making in disaster management, it is necessary to build a knowledge-based application system along with technical advancement. In addition, in [17], the researchers presented a review of the application of knowledge-driven systems in support of an emergency management system and emphasized the importance of knowledge management in disaster management.

Therefore, this study presents the design of an open data knowledge platform (OpenDKP) to manage disaster risks by generating, merging, and sharing the open knowledge. The purpose of the proposed system is to function as a collaborator and assistant by using vast amounts of data in the fields of hazard and disaster research. The OpenDKP system can also play a significant role in supporting the development of a disaster response technology as a knowledge-based platform, as well as for risk and disaster response, disaster recovery, and risk assessment research.

In addition, the proposed system helps researchers and decision makers to better comprehend the difficulties of acquiring disaster data, including the Internet-of-Things (IoT) sensor data, open data, and scientific simulation results, and provides guidance on data analysis and the challenges faced by the nationwide “Open Science” movements. Such movements pursue other open knowledge movements, such as open source, open hardware, open content, and open access [18–24], as well as open data, which are based on the idea that some data should be freely available to every researcher to investigate and republish according to the goal of their research without any restrictions.

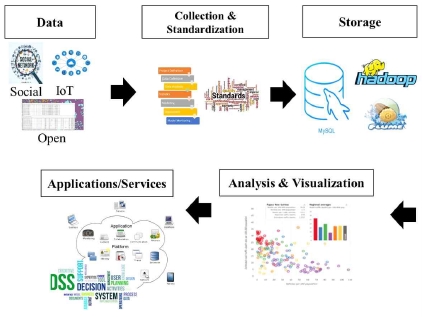

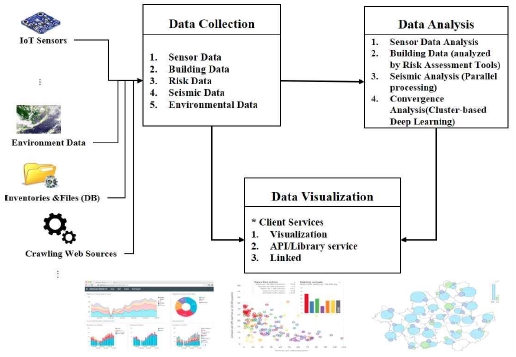

In particular, regarding the phases of the whole disaster management cycle, such as prevention, mitigation, preparedness, response, and recovery, data interconnectivity, availability and sharing with multidisciplinary and cross-domain approaches play a crucial role in disaster risk reduction and management. Therefore, the proposed OpenDKP not only supports the sharing of data, which must include machine-readable versions of datasets containing IoT sensor data, public open data, scientific simulation outputs, and related experimental data in order to make disaster-related information available, but also provides analytical results for resolving complex issues arising from large amounts of uncertain data. The objective of the proposed platform is to provide integrated analysis of disaster risk reduction through the big data analysis process as illustrated in Figure 1.

Various factors including the support of data standardization, guarantee of data quality, scalability of various application services and systems, provision of big data-based analysis, and the sharing of interconnected multidisciplinary data among the stakeholders should be considered with an appropriately designed knowledge-based system for disaster risk reduction and management. Consequently, this study presents an open disaster risk knowledge-based platform, which is an open data platform that enables integrated data storage, management, re-use, sharing, and analysis by designing a modular platform that successfully provides a variety of researchers and professionals with various degrees of hazard and disaster knowledge.

The rest of this paper is organized as follows. The background studies are introduced in Section 2. In Section 3, the overall system architecture and the functions of the open data knowledge platform is introduced. Some of the main functions related to the big data analytics platform, including both big data processing techniques and knowledge-based curation services, are discussed in Section 4. Finally, the conclusions are drawn in Section 5 and a summary is presented.

Ⅱ. Related Work

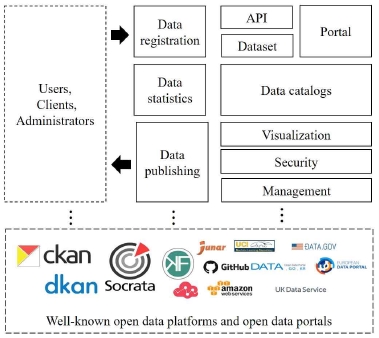

In this section, background studies along with a brief description of open data platforms and applications related to disaster risk management are presented. Figure 2 shows the various functionalities of an open data platform, which supports open data management, data utilization, and various types of application services. There are many types of open data platforms with huge benefits in terms of big data analytics and management. In particular, disaster and hazard analytical platforms that apply various types of datasets, are introduced as an application case study.

2-1 Open Data Platform

Representative platforms for sharing the data owned by government and public agencies include CKAN [25] and Socrata [26]. DKAN is an open source based open data platform that allows organizations and individuals to publish and use structured information for free [27]. CKAN [25] and Socrata [26] are considered representative open data platforms that can serve as a catalogue and repository for publishing various types of datasets, as shown in Figure 2. CKAN is widely used in more than 40 countries, such as the United Kingdom, the United States, and Canada, and was developed by the Open Knowledge Foundation (OKF) [14]. Furthermore, additional functions and extended features for open platforms have been implemented, which allow them to support various data convergence services, including visualization, analysis, and API extraction.

DSpace [28, 29] is another open source software data repository platform and is typically used for scholarly content. The DSpace repository software provides a digital archiving system that focuses on the storage, access, distribution, and preservation of digital content, including text, images, videos, audio, and data [29]. It has been widely used and applied in various fields in more than 1000 institutions, including universities, research institutes, government agencies, and medical centers [14].

In particular, data platforms can play a key role in the fields of natural hazard and disaster management and risk resilience by providing processed, organized data, and analyzing the outcomes required for each of the phases of the entire disaster management cycle, such as prevention, mitigation, preparedness, response, and recovery.

2-2 Applications

A number of disaster risk assessment software packages have been developed to analyze the damage caused by various disasters and natural hazards [30–35]. The impact of such disasters may provoke casualties and, building, bridge, and infrastructure damage, and cause direct and indirect economic effects. There have been various studies investigating the socio-economic impact of hazardous events as well as the direct damage suffered by infrastructures. However, to cope with the effects of disasters, it is necessary to build an appropriate data repository that can handle different types of formats, features, and data management [35, 36].

In particular, two software tools, namely ERGO-EQ [30] and HAZUS-MH [31], have been regarded as the most appropriate tools for assessing all the different types of datasets from various disasters. Chae and Suh [36] proposed the Korean disaster inventory for earthquake damage estimation by considering different types of data and building a database to estimate the impact of disasters by using social, open, and sensor data. In addition, they developed a distributed data analysis platform that can process massive amounts of data in a short period of time [37].

Ⅲ. Open Data Knowledge Platform

This section presents the entire architecture of the proposed system. Subsequently, its main functions are described after its main components are introduced.

3-1 Architecture

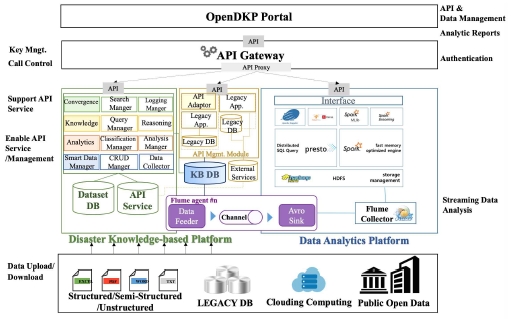

The proposed Open DKP, which is designed using a modular platform architecture by considering its scalability, is meant to provide a system with an open architecture for scalability and ease of use. The platform contains the four key modules of any scientific open data sharing process, namely collection, processing, sharing and re-use, and analysis, and is implemented using the Open Data Knowledge Platform Portal, an API gateway, a knowledge-based platform, and a data analytics platform. The Open DKP Portal was designed to use the REST API. All of the portal core functions of the platform are available through the API, with which users can manipulate data via the web interface shown in Figure 3 and Table 1. All the datasets hosted in this platform can be accessed using the REST API. In addition, the retrieved information can be used by external portal API calls.

3-2 API Gateway

The API gateway plays a crucial role in the management of security issues, including key management, authentication, authorization, access control, call control, call logging, and service orchestration. A knowledge-sharing portal that uses the API gateway is accessible to external partners or third-party applications. The API gateway supports the management of legacy API services, the control of layered and modular platforms, and reporting, which provides real-time visibility into the internal data activity.

3-3 Knowledge Management Module

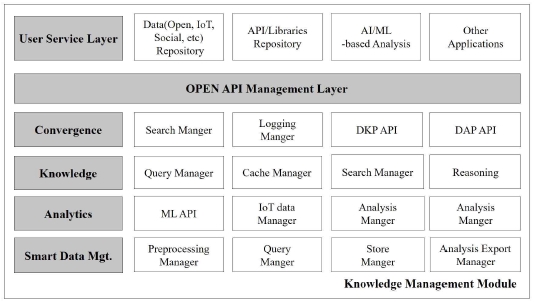

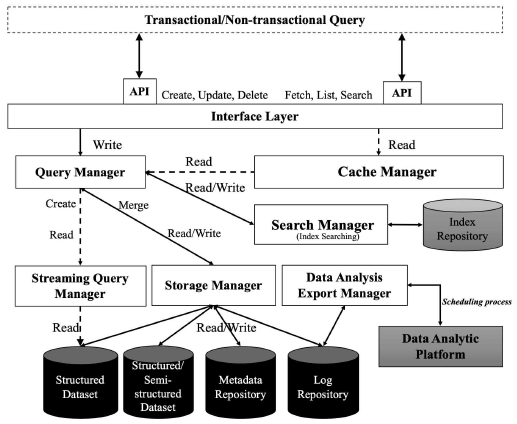

The knowledge management module consists of multiple sub-modules and several sub-layered architectures, such as the smart data management, analytics, knowledge, and convergence layers, as shown in Figure 4.

The smart data management layer consists of a Create, Read, Update and Delete (CRUD) manager, a data collector, and cleansing and storage packages. The role of the smart data management layer can be assigned according to the conditions associated with particular channels on the basis of the data collection and the data features or content types.

The analytics (information) layer handles information retrieval from raw data sources and converts complex data into refined meta-data or compatible usable datasets. Additionally, this layer performs classification or clustering by using machine learning techniques in the knowledge-based platform. This layer consists of a topic classification and analysis manager, while the knowledge management layer is in charge of indexing and reasoning with the given information.

In addition, the principal process of the knowledge management layer enables users to use the analytical results and the inferred knowledge acquired from the preprocessed or raw data.

The convergence layer is responsible for the connections between the analyzed results and other inter-external services or third-party applications. The convergence layer provides users with a deployment dataset of the retrieved knowledge obtained from the lower layers by using open APIs and offers a way to combine datasets and APIs to create new services, such as data mash-up.

Lastly, the user service layer is located in the highest layer of the knowledge management module that is closest to the user. The user service layer plays an important role in handling big data service by using libraries and APIs, which are associated with machine learning methods.

The knowledge management module enables big data analytics to offer all types of scalability services, constituting interlinks with other legacy applications, such as external API services and knowledge-based databases, and is responsible for the identification in bigdata analyses.

The task of identifying a given data author is a knowledge-identification process. Thus, it can be devised as a general classification problem depending on the distinguishing features that represent the author’s style [39, 40].

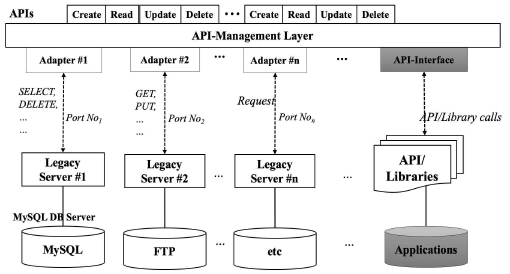

3-4 API Management Layer

The API management layer provides multiple ways of interfacing external services. This layer oversees the “adapter” component as a substitute for the existing legacy applications and supports APIs for mediation services to use the required functions or particular applications.

Figure 5 shows a microservice design architecture that can provide sub-services or applications that can be applied and extended independently of each other. First, the query manager is responsible for queries related to data retrieval and generation and performs the appropriate action according to the type of query being processed. Non-transactional queries have a caching function at the controller level to reduce as much load on the system as possible. The policy regarding caching allows the data provider to take control of the data flow.

The search manager query supports full-text searches through search engines, such as Elastic Search and Solr and indexes all the string-type metadata of the data. The media streaming manager provides a unique access path for the uploaded data with an API format. In case of an API Request, the authentication values must be transmitted using cookies.

Finally, the store manager saves the datasets and the multimedia streaming data in a database and can write log files into the database. Figure 6 presents the service architecture of the API management layer.

3-5. Data Analytics Platform

The entire proposed platform not only provides researchers with large scale data processing, but also offers scientific data curation services in the context of statistical analyses.

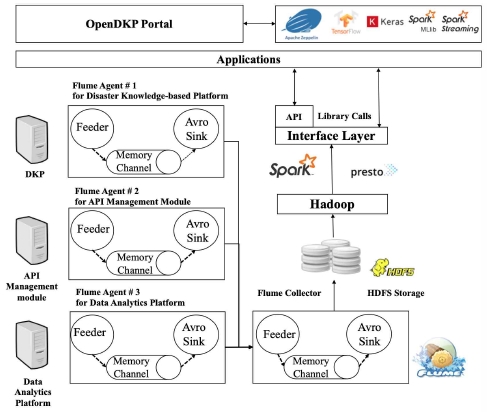

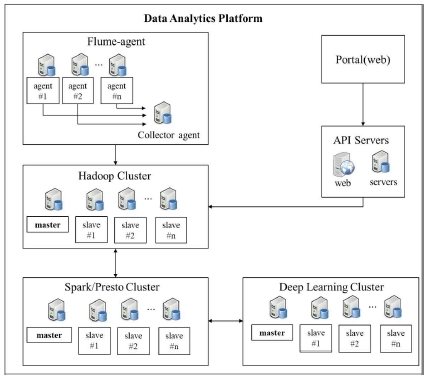

The knowledge-based platform deploys the data analytics platform based on Apache Spark on a Hadoop framework, which supports iterative algorithms through in-memory computations, and is designed to support multiple types of real-time event processing with high scalability, high availability, and fault tolerance [39]. This big data framework, which adopts Apache Spark running on Apache Hadoop, is a fast, in-memory data processing engine for big data processing and allows for the data processing to efficiently perform SQL functions, streaming, machine learning, and graph processing, which require fast iterative access to datasets.

The proposed Hadoop stack is composed of three major components, which are Hadoop distributed file systems (HDFSs) [40] and the Apache Spark framework. HDFS is a type of distributed file system for big data manipulation that supports high availability and fault tolerance. Apache Spark is also a big data processing framework built for high-speed cluster computing, ease of use, and sophisticated analytics, allowing users to perform in-memory computations on large clusters.

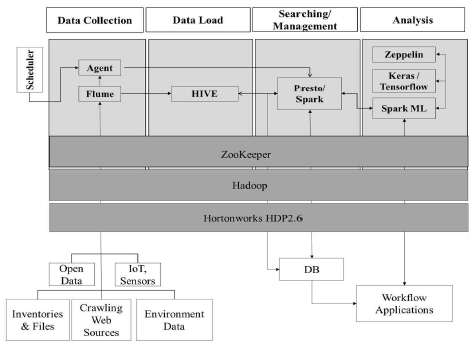

The proposed system is capable of managing an open source distributed SQL query engine, called Presto, which was designed and developed considering interactive analytic queries and can perform its functions at sizes ranging from gigabytes to petabytes [41]. Moreover, the proposed system is designed to deliver outcomes by performing AI and machine learning analyses. It is thus able to learn and improve automatically through experience without explicit programming [42, 43]. Figures 7 and 8 show the architecture of the data analytics platform in detail.

3-6. Hardware Design

Figures 9 describe the hardware block diagram of the data analytics platform, which oversees the data analyses performed via cluster-based big data processing.

Stream processing makes it possible to publish data on the Hadoop and Spark clusters in real time. In these cluster systems, Spark streaming configures a receiver that acts as an Avro sink for Flume, which allows the user to establish multi-streams. Flume can guarantee that the data received by the agent nodes on each domain will be sent to the collector agent at the end of the flow according to policy and priority while the agent node is running. Therefore, data can be delivered to the final destination.

Ⅳ. Discussion

The proposed platform enables users, with the help of researchers from the field of disaster risk, to integrate and collaborate on the collected disaster data and analyses to provide the highest levels of analytical results in disaster management.

Therefore, the proposed system was designed considering real-time analyses, which are widely used in IoT analyses as well as big data analytics. Next, I will briefly discuss the applications of knowledge-based analysis services based on simple scenarios.

4-1. Big Data Processing and Analysis

Disasters can occur suddenly without warning. Therefore, it is critical to predict the damage of disasters before severe hazardous events happen as well as to react immediately after the events. Thus, they first need to be investigated, and then the data should be collected considering every type of dataset that comes from the various processes of the disaster management cycle, such as prevention, preparedness, response, and recovery.

Because various types of disasters occur frequently, systems that use data that can be used to detect and analyze these disasters are becoming increasingly important to prevent the occurrence of such disasters.

According to previous studies [44, 45, 46], both data standardization with respect to the characteristics of structured/unstructured data and database design are already being investigated. Therefore, the proposed system was designed considering various types of data, including social, open, and sensor-gathered data related to each step of the hazardous events of disasters, as shown in Figure 10. On the basis of the previous research [36, 46] on disaster analysis, the proposed system provides a distributed and parallel processing pipeline, allowing for a huge volume of data to be processed in a short period of time.

4-2. Knowledge-based Curation Service

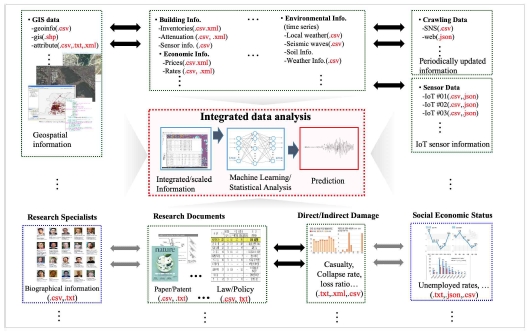

The data analytics platform presented in Section 3 plays an important role in knowledge-based curation services. When using knowledge-based curation or analytic services, it is possible to derive new knowledge or results from certain given conditions by linking with knowledge databases, knowledge extraction and inference, and identification-based services, as well as services based on big data statistics, as mentioned above. Therefore, the application of the knowledge-based analytical service will be briefly presented as an example case, as described in Figure 11.

The core module of the data analytics platform supports three different identification tasks, namely content generator ID, disaster-type identification, and technical terminology identification, through a semi-automatic verification process. This semi-automatic verification process consists of two steps, namely an encompassing automatic identification/extraction process and a manual verification/evaluation process. In the proposed system, however, because of the limitations of the data identification technology, manual processes are used.

The identification of knowledge (e.g. data, contents, and analytical results) and knowledge types and the execution of the verification process with high accuracy are essential tasks in authorship categorization [38]. In addition, the automatic identification and extraction of technical terminology are a crucial challenge for constructing a substantial knowledge-based database in scientific and technological fields.

The knowledge-based analytical module was designed considering the types of open data, IoT sensing data, and simulation results presently available [45]. Figure 11 depicts a representative example case using knowledge-based analysis services. In particular, scholarly big data that contain information including millions of scholars, research articles, proposed research models, citations, and the data of linked scholarly citations can resolve social issues when analyzed in conjunction with various types of open datasets that are publicly collected and released by the government and, IoT sensing data gathered using various types of sensors and social sensing data periodically crawled on the Web. In addition, scholarly big data can be analyzed along with precedent research results and datasets that have been studied in the past as well as are expected to provide important information for developing various social problem-solving models based on AI and machine learning.

4-3. Limitation

It is highly significant to construct a big data environment system considering a diverse and wide range of data formats, a huge volume of data, and data management strategies. Besides, various novel data services, including the IoT-based big data processing and knowledge-based curation services mentioned above, can play a vital role in converting raw data into useful knowledge. Most importantly, it is necessary to promote the data collaboration between public institutions and the private sector, maintain the quality of data, and ensure the privacy and security ofdata in order to make the knowledge hub widely used in a reality where reliability and security are the chief concerns. Thus, many people who work in various domains will be able to deal with a variety of social issues by connecting knowledge.

Ⅴ. Conclusion

This study presented an open data knowledge platform that supports the development of the disaster response technology as a knowledge hub and that can be used for risk and disaster response, disaster recovery, and risk assessment studies. The proposed open knowledge-based platform allows for the creation, collection, processing, sharing, and analysis of hazard and disaster data, which can be of any type and can be either structured or unstructured.

Furthermore, in this paper, a novel architecture for an open disaster risk knowledge hub system was introduced. The proposed knowledge platform has a modularized platform architecture, consisting of an open data knowledge portal, an API gateway, a knowledge-based platform, an API management module, and a data analytics platform, all of which were designed to achieve the desired scalability. These modules were designed to provide an open architecture system that is easy to use and extend. Additionally, the proposed platform can reduce the number of redundant implementations of the basic functions and make the management of various components easier. This highly reliable open data knowledge platform can also play a helpful role in supporting researchers and decision makers in the field of disaster risk reduction and management on a variety of scientific issues, helping them to solve many critical problems and providing guidance in the form of a considerable amount of practical advice for the decision makers.

Acknowledgments

This work was supported by the National Research Foundation of Korea(NRF) grant funded by the Ministry of Science and ICT(2018R1C1B5084507) and supported by Korea Electric Power Corporation.(Grant number : R19XO01-04)

References

- Disaster, https://en.wikipedia.org/wiki/Disaster

- What is a disaster, https://www.ifrc.org/en/what-we-do/disaster-management/about-disasters/what-is-a-disaster/

- Disaster risk reduction, https://en.wikipedia.org/wiki/Disaster_risk_reduction

- 2009 UNISDR terminology on disaster risk reduction, https://www.unisdr.org/we/inform/publications/7817

-

J. Weichselgartner, P. Pigeon, The role of knowledge in disaster risk reduction, International Journal of Disaster Risk Science, Vol. 6, No. 2, pp. 107-116, 2015.

[https://doi.org/10.1007/s13753-015-0052-7]

-

E. Carabine, Revitalising evidence-based policy for the Sendai framework for disaster risk reduction 2015–2030: Lessons fromexisting international science partnerships, PLOS Currents, 2015.

[https://doi.org/10.1371/currents.dis.aaab45b2b4106307ae2168a485e03b8a]

-

G. Li et. al., Gap analysis on open data interconnectivity for disaster risk research, Geo-spatial Information Science, Vol. 22, No.1, pp. 45-58, 2019

[https://doi.org/10.1080/10095020.2018.1560056]

-

L. Spinsanti, F. Ostermann, Automated geographiccontext analysis for volunteered information, Applied Geography, Vol. 43, pp. 36-44, 2013

[https://doi.org/10.1016/j.apgeog.2013.05.005]

-

A. Aitsi-Selmi et al., Reflections on a science and technology agenda for 21st century disaster risk reduction, International Journal of Disaster Risk Science, Vol. 7, No. 1, pp. 1-29, 2016

[https://doi.org/10.1007/s13753-016-0081-x]

- H. Sutanta, A. Rajabifard, I. D. Bishop, An integrated approach for disaster risk reduction using spatial planning and SDI platform. In Proc. of the Surveying & Spatial Sciences Institute Biennial International Conference, Surveying & Spatial Sciences Institute, pp. 341-351, 2009

-

A. Rajabifard, M. E. F. Feeney, Spatial Data Infrastructures: Concept, Nature and SDI Hierarchy, Developing spatial data infrastructures: From concept to reality, pp. 17-40, 2003

[https://doi.org/10.1201/9780203485774.ch2]

- P. H. Hsu, S. Y. Wu, F. T. Lin, Disaster management using GIS technology: a case study in Taiwan, In Proc. of the 26th Asia Conference on Remote Sensing, pp. 7-11, 2005

-

G. Giuliani, P. Peduzzi, The PREVIEW Global Risk Data Platform: a geoportal to serve and share global data on risk to natural hazards, Natural Hazards and Earth System Science, Vol. 11,No. 1, pp. 53-66, 2011

[https://doi.org/10.5194/nhess-11-53-2011]

-

S. Balbo et al., A public platform for geospatial data sharing for disaster risk management, International Society for Photogrammetry and Remote Sensing (ISPRS) Archives, Vol. 43, pp. 189-195, 2013

[https://doi.org/10.5194/isprsarchives-XL-5-W3-189-2013]

-

M. Y. Cheng, Y. W. Wu, Data Exchange Platform for Bridge Disaster Prevention Using Intelligent Agent, In Proceedings of the 23rd ISARC, pp. 321-326, 2006

[https://doi.org/10.22260/ISARC2006/0062]

-

D. K Von Lubitz, J. E. Beakley, F. Patricelli, ‘All hazards approach’to disaster management: the role ofinformation and knowledge management, Boyd's OODA Loop, and network‐centricity, Disasters, Vol. 32, No. 4, pp. 561-585, 2008

[https://doi.org/10.1111/j.1467-7717.2008.01055.x]

-

M. Dorasamy, M. Raman, M. Kaliannan, Knowledge management systems in support of disasters management: A two decade review, Technological Forecasting and Social Change, Vol. 80, No. 9, pp. 1834-1853, 2013

[https://doi.org/10.1016/j.techfore.2012.12.008]

-

BA Nosek et. al., Promoting an open research culture, Science, Vol. 348, pp. 1422–1425, 2015

[https://doi.org/10.1126/science.aab2374]

- Open data, Available: https://en.wikipedia.org/wiki/Open_data#cite_note-1

-

J. S. Erickson, A. Viswanathan, J. Shinavier, Y. Shi, J. A. Hendler, Open government data: A data analytics approach, IEEE Intelligent Systems, Vol. 28, No. 5, 19-23, 2013.

[https://doi.org/10.1109/MIS.2013.134]

- Open Data Barometer - 2013 Global Report (Open Data Barometer, 2013), Available: http://www.opendataresearch.org/dl/odb2013/Open-Data-Barometer-2013-Global-Report.pdf

- Open Data Barometer - 2015 Global Report (Open Data Barometer, 2015), Available: http://opendatabarometer.org/doc/3rdEdition/ODB-3rdEdition-GlobalReport.pdf

- OECD, Making Open Science a Reality, OECD Science, Technology and Industry Policy Papers, No. 25, OECD Publishing, Paris, 2015.

- Y. C. Jung, D. Suh , H. J. Lee & K. Y. Kim , A trend of the Open Data Platform, Korea Information Processing Society Review, Vol. 23, pp. 53–63, 2016.

- CKAN, http://ckan.org

- Socrata, http://socrata.com

- DKAN, http://docs.getdkan.com

- Dspace, http://www.dspace.org

- Dspace information, https://en.wikipedia.org/wiki/DSpace

- ERGO, http://ergo.ncsa.illinois.edu/?page_id=44

- HAZUS-MH2.1 TM, https://www.fema.gov/media-library/assets/documents/24609

- SYNER-G, http://www.vce.at/SYNE R-G

- CAPRA, https://www.ecapra.org

- OpenQuake, https://platform.openquake.org

-

S. Chai & D. Suh, A review and analysis of earthquake disaster risk assessment tools and applications, Journal of Digital Contents Society, Vol. 19, pp. 899–906, 2018.

[https://doi.org/10.9728/dcs.2018.19.5.899]

- S. Chai, S. Shin & D. Suh, A study on the development of Korean inventory for the multi-hazard risk assessment, Journal of Digital Contents Society, Vol. 18, pp. 1127–1134, 2018.

-

S. Chai S, S. Y. Jang & D. Suh, Design and implementation of big data analytics framework for disaster risk assessment, Journal of Digital Contents Society, Vol. 19, pp. 771–777, 2018.

[https://doi.org/10.9728/dcs.2018.19.5.899]

-

J. Kim, S. Lee, D. Suh & K. Kim, A study on the method and system for organization’s name authorization of Korean science and technology contents, Journal of Digital Contents Society, Vol.17, pp. 555–563, 2016

[https://doi.org/10.9728/dcs.2016.17.6.555]

-

M. Armbrust et al., Spark SQL: Relational Data Processing in Spark, Proc. of the 2015 ACM SIGMOD International Conference on Management of Data, pp:1383–1394, 2015.

[https://doi.org/10.1145/2723372.2742797]

- D. Borthakur, The Hadoop distributed file system: Architecture and design. Hadoop Project, https://svn.apache.org/repos/asf/hadoop/common/tags/release-0.16.0/docs/hdfs_design.pdf

- Presto, https://prestodb.io

- Tensorflow, https://www.tensorflow.org

- Keras, https://keras.io

- S. Shin, D. Suh & S. Chai, A Study on the Management of Earthquake Measurement Data for Facility Monitoring, International Conference on Convergence Content, pp. 245–246, 2017.

- S. Chai & D. Suh, Design of Scalable IoT Platform Using Hazard Sensor, Open and Social Data, International Workshop on Social Sensing, pp. 6, 2018.

- D. Suh, J. Lee, S. Chai, S. Shin, C. Navarro & Y. Baek, Development of a Risk Assessment for Korean High-rise Mixed-use Buildings, in Proceeding of the 16th European Conference on Earthquake Engineering, 2018.

2007 : 한국과학기술원(KAIST) 공학석사

2014 : 한국과학기술원(KAIST) 공학박사

2018~현재: 경북대학교 융복합시스템공학부 조교수

2015~2018: 한국과학기술정보연구원 (KISTI) 선임연구원

2014~2015: KAIST IT융합연구소 연구조교수

2007~2010: HUMAX, 소프트웨어개발 연구원

※관심분야: 빅데이터, 재난 위험도 분석, 인공지능 및 기계학습, 스마트제어시스템, 건설IT융합 등.