Design and Implementation of Interworking System for Device Interaction in a Virtual Reality Setting

Copyright ⓒ 2019 The Digital Contents Society

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-CommercialLicense(http://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

The virtual training system utilizing Augmented Reality(AR), Virtual Reality(VR) and Mixed Reality(MR) eliminates realistic limitations and enables a repeated experience of safe and efficient VR training. Considering the training system is usually designed for one individual and limited to a simple repetitive experience, there’s a restriction to develop as a mutually interactive training mechanism. In order for multiple users to interact with each other in the training, it is necessary to identify the objects through a head mounted display (HMD) and to have a middleware facilitating the interactions. This paper aims to design a system in which two individual users can simultaneously identify each other’s movements in the same training based on the related technology.

초록

증강현실(AR)/가상현실(VR)/혼합현실(MR)을 이용한 가상훈련시스템은 현실의 제약을 없애고 안전하고 효율적인 반복경험을 가능하게 한다. 보통 1인을 대상으로 한 훈련시스템이 주를 이루고 있고 단순반복경험수준에 그치기 때문에 사용자간 상호작용이 동반되는 학습모델로 성장하기에 제약이 있다. 공간 내 다수 사용자가 상호작용하기 위해서는 HMD(Head Mounted Display)를 통해 객체 간 식별이 가능해야 하고 그것을 담당하는 연동시스템이 필요하다. 본 논문에서는 관련 기본기술을 바탕으로 두 명의 사용자가 동시에 같은 공간에서 서로의 움직임을 확인할 수 있는 시스템을 설계한다.

Keywords:

Virtual Reality, Augmented Reality, Mixed Reality, Interworking System, Virtual Training System키워드:

가상현실, 증강현실, 혼합현실, 상호연동시스템, 가상훈련시스템Ⅰ. Introduction

Virtual Reality refers to a virtually simulated environment. Virtual Reality is a technique that enables users to experience life-like spatial and temporal experiences by sending sensory stimulation. This indicates the VR technology is more than just simply constructing a virtual space. Recent improvements have allowed Virtual Reality technology to display the user’s current location by tracking the location of the connected HMD and the controller. In the virtual training system using virtual reality, the user can view the controller in the HMD and can make movements such as picking up, selecting, and using objects with the controller. However, this one-person training does not satisfy the needs of the training field. In order for multiple users to perform training in the same place and at the same time, the VR devices such as the HMD and the controller should be able to spot the other user’s devices and the training system should be equipped with a middleware capable of controlling the related functions [1].

In this paper, different devices are connected to a single control system(Simulation engine server), and devices are interconnected through real-time data transmission in a virtual reality environment. Final conclusion is to verify the suitability of the data interworking by connecting the clients to the simulation engine server and comparing the frame per speed(FPS), CPU operation delay time, GPU output delay time of interworking data.

Ⅱ. Related Technology

2-1 Virtual Reality

Virtual Reality(VR) refers to a specific environment, situation, or technology that is created by artificial technology using a computer that is similar to the real world but is not real. The created virtual environment or situation stimulates the user's five senses and allows them to experience the same spatial and temporal experience as the real world, freeing the boundaries between reality and imagination. In addition to being simply immersed in virtual reality, users can interact with things implemented in virtual reality, such as adding operations and commands using real devices. Generally, after wearing the head-up display (HMD), the external view is blocked and the feeling of immersion is provided through the screen in the HMD. It can be seen that existing 3D / 4D contents are provided as a new space. However, since the content is mostly personal, it is limited in the variety of contents. It is also pointed out that dizziness can be caused by adjusting the visual sense to generate immersion. Recently, HMD devices have been offering a variety of products ranging from HMDs for mobile phones that can be experienced at low prices to high-quality, light-weight, and wireless functions but at relatively high prices [2].

2-2 Augmented Reality

Augmented Reality(AR) is a field of Virtual Reality(VR), which is a computer graphics technology that synthesizes virtual objects or information in a real environment to make them appear as objects in real environments. As a result, augmented reality is a technique of overlapping virtual objects on the real world viewed by the user. Augmented Reality uses a virtual environment created by computer graphics, but the main area is the real environment. Computer graphics only serve to provide additional information for the real world. By overlapping the 3D virtual image with the real image that the user is viewing, the division between the real environment and the virtual screen is make it ambiguous[3].

VR technology allows users to be immersed so that they cannot see the actual environment. However, AR technology, which is a mixture of real environment and virtual objects, allows the user to see the real environment and provides better realism and additional information. For example, when you look around with a smartphone camera, information such as the location of a nearby shop and phone number is displayed as a stereoscopic image. Compared to a VR in which information on an environment that does not exist in reality is displayed through a display device, AR is a form in which virtual information is added to the environment that the user is currently viewing. So they are interested in advertising, management, maintenance, and medical care.

2-3 Mixed Reality

Mixed reality technology is a technology to show virtual world having additional information in real time in the real world as one image. The difference from augmented reality can be whether it is interaction or not. Augmented Reality simply plays the role of displaying virtual information on the real world. Mixed reality makes it possible to interact with the information seen, to manipulate the objects and to enable real-time interaction between the reality and the virtual environment. Research on mixed reality technology that combines the real environment and the virtual environment has been underway full-scale research and development since the latter half of the 1990s. There are not many instruments that support mixed reality at present, and it forms an expensive price. Although it remains at the research level, it will replace the virtual reality technology within a few years[4].

2-4 Modeling and Simulation

The academic definition of Modeling and Simulation(M&S) has not yet been well established. Given the importance of M&S's industrial applicability in recent years as ICT contributions increase, M&S concepts, terminology, and There is an increasing need for a formal academic definition of the range.

The Department of Defense(DoD) defines M&S as "An activity that creates a model that expresses systems or entities of interest, phenomena, or processes through physical, mathematical, or logical methods and analyzes them statically and dynamically." In this context, domestic research defined M&S as a time sequential representation of the system of interest and the operation principle of the system. The National Science Foundation(NSF) highlighted M&S activities as a means to streamline national R&D and defined M&S from an engineering perspective. According to the NSF, M&S builds the engineering system and predicts physical events and responses using computational methods.

As is evident from the definition of M&S, M&S is primarily a technology operating in a digital environment, is closely related to the development of various computer science fields, has a multidisciplinary nature that is embedded in various scientific fields such as arithmetic. The term "arithmetic" M&S is not only a field of engineering and science, but also of various kinds of research such as science, social science, business, medicine, education, etc. It has the characteristic that it can be applied to several fields where the field can come in and experiment with the dynamic model.

When these engineering and M&S technologies were introduced into the military and private enterprise, we would substitute the physical model of the real world to create a simulated product in digital space and predict its performance. From this point of view, engineering and M&S can be defined as "the virtualization of the design stage of a product, which is an important step in product development, A technology that can significantly reduce the cost and time of product development by replacing physical product manufacturing and experimental activities with virtual product modeling and analysis functions"[5][6].

2-5 Virtual Training System

A VR training system is a system that provides an experience that is difficult to experience in the real world by wearing an HMD and using VR technology. For example, training such as military, terrorist attacks, and fire suppression. This training requires a process to solve various problems in order to perform. These problems are as follows. First, it takes a lot of money to do the actual training once. Second, training should be conducted at certain times. Third, it is necessary to secure space for training. Finally, there is always a safety risk. Therefore, it takes a lot of cost, time, and place to carry out the training, and unexpected safety problems occur during the training, and it is difficult to carry out a lot of training because it can cause the human injury. As the number of training decreases, the training skill decreases, which leads to a vicious circle that can not achieve high performance in a practical environment.

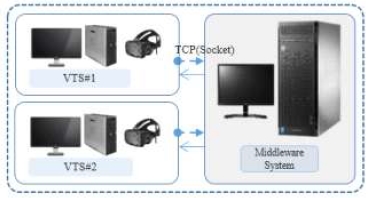

A training system using VR can solve all of these problems. Obviously, the initial cost and space for building a VR training system is needed, but it can be constructed at a lower level than the actual training. Futhermore, a once-constructed system can be freely trained, with no extra cost, no time limitation, less space, relatively high safety, except for maintenance costs. This leads to frequent training times and ensures high proficiency. In the early days, simulators using displays were popular, but this was only for one-way education. Recently, a variety of training systems have been introduced in combination with VR technology that provides an immersive experience through interaction. It has a higher educational effect compared to the previous simulator, but still has a limit of single person training system. Since VR devices using HMD have limitations in view, it is difficult for many trainees to receive training in one space. In order to solve this problem, motion capture technology using motion camera is introduced, but it is too expensive to produce the system and it is difficult to supply the system. In this paper, we propose an mutually interactive middleware system for multiple training system through real-time data interworking between HMD devices using sensors, as shown in Fig. 1.[7][8].

Ⅲ. Virtual Reality Training System

3-1 Middleware

Middleware is collectively known as software that bridges the gap between various hardware, network protocols, application programs, local network environment, PC and operating systems. This paper defines middleware as a software system that coordinates the actual location information of each device between different VR devices [9].

Ⅳ. Mutual Identification System

4-1 Overall System Configuration Diagram

The overall system configuration is shown in Fig 2 below.

It consists of a separate system for driving the HMD and a middleware system that relays each driving system and process and transmit the information provided by the HMD. HTC Considering VIVE officially supports SteamVR, the virtual training system is designed using Unity and the middleware system is composed of C++. Finally, the SteamVR is used to link the HMD devices.

4-2 Keypoints for Interaction

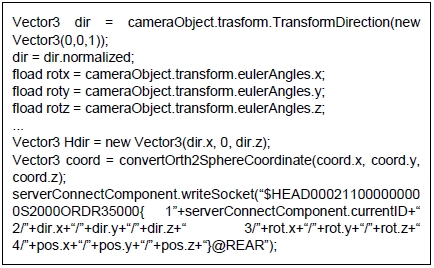

In unity, the HMD object information is generated in the form of a three-dimensional Vector. Basically, it processes and uses the data including three-dimensional position (POS), the left and right angles from the objects (DIR) and the upper and lower angles (ROT).

The procedure is as follows.

- HMD object data is transferred to the middleware using the TCP socket communication.- The middleware system processes the data and sends it to the connected HMD operating system.- Each HMD operating system applies the data received from the middleware to each object.

The main source code is shown in Fig 3 below.

Although only two HMD objects are used in this experiment, more than two objects can recognize each other by setting each object identifier.

Unity is updated frame by frame, and the packets used in this system are not transmitted to the middleware. Given the size of the transmitted data is not so large, the impact on the entire frame is subtle so that the user does not feel dizziness or a sense of awkwardness.

4-3 Experimental Process

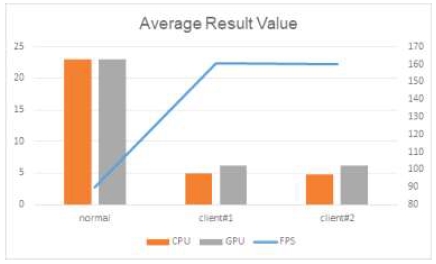

Based on the development contents, the following three experiments were conducted.

The frame per second (FPS) has the purpose of verifying how many frames are output per second in the HMD screen output. If the experimental result is low, the trainee feels dizziness and it is difficult to proceed with the training. The higher the measured value, the more natural the training becomes possible. Generally, 90FPS or more is used as a standard. The CPU operation delay rate measures the time it takes for the CPU to finish the 3D software operation and send the operation result to the GPU. The GPU output delay rate measures the time that the GPU actually outputs to the screen.

The measurement program used in each experiment is shown in Table 4 below.

FPS are measured using FCAT_VR_Capture and EnableVROverlay. When the measurement is started, the VR content is executed, and when the content execution is completed, the measurement is ended. The results are output in the form of a csv file, which can be analyzed by FCAT.py, among which the Unconstrained FPS item is confirmed. The CPU operation delay rate is calculated from the difference between Warp End (ms) and Frame Start (ms) in log file contents. GPU Output Delay Rate can be confirmed by Avg Frametime among log files. The experiment was conducted 20 times for each client and the average value was measured.

4-4 Result

The experimental results are shown in Fig 4 below.

As a result of the experiment(Table 5), first, it can be confirmed that the delay rate is measured below the reference value when the content is executed through the middleware. The recommended level for FPS is over 90 fps and the average measured value is about 160 fps. The recommended level for CPU Operation Delay Time is less than 23 ms, and the average measured value is less than 5 ms. The recommended level for GPU Output Delay Time is less than 23ms and the average measured value is 6ms. According to the above experimental results, It can be seen that the delay rate is lower than the standard so that the middleware can synchronize in real time by providing common information that is not delayed to the trainees in controlling each client. Second, it does not affect the number of FPS. Since the number of FPS can be secured more than a certain value, this interactive mutually middleware system can confirm the position and motion of the other party on the own HMD even when the HMD is hermetically sealed.

Ⅴ. Conclusion

Currently, the widely used virtual reality training systems usually apply a 360-degree imaging technique for visual immersion, by which only one user can display contents through the connected HMD. In order to overcome this, it is imperative to develop and widely distribute computer graphics-based virtual reality where more than two users can interact with each other. In addition, in order for multiple users to simultaneously use one content, they should be able to identify each other and the result should be shown through the HMD. This interactive system enables users to identify different users in the same space by sharing information of different objects on a separate training system. Experimental results show that the interworking data between clients connected to the simulated engine server has better frame per speed (FPS), CPU delay operation time, GPU output delay time than the average level. This can be used as an underlying basic technology to further expand the range of virtual reality training systems.

Reference

-

Z. Pan, A. D. Cheok, H. Yang, J. Zhu, and J. Shi, “Virtual Reality and mixed reality for virtual learning environments”, Computers&Graphics, 30(1), p20-28, (2006).

[https://doi.org/10.1016/j.cag.2005.10.004]

-

N. E. Seymour, A. G. Gallagher, S. A. Roman, “Virtual reality training improves operating room performance: results of a randomized, double-blinded study”, Annals of surgery, 236(4), p458-63, (2002).

[https://doi.org/10.1097/00000658-200210000-00008]

-

J. Mott, S. Bucolo, L. Cuttle, J. Mill, M. Hilder, K. Miller, R. M. Kimble, “The efficacy of an augmented virtual reality system to alleviate pain in children undergoing burns dressing changes: A randomised controlled trial”, Burns, 34(6), p803-808, (2008).

[https://doi.org/10.1016/j.burns.2007.10.010]

- L. Kobayashi, X. C. Zhang, S. A. Collins, N. Karim, D. L. Merck, “Exploratory Application of Augmented Reality/Mixed Reality Devices for Acute Care Procedure Training”, Western Journal of Emergency Medicine, 19(1), p158-164, (2017).

- D. K. Pace, "Modeling and simulation verification and validation challenges", Johns Hopkins APL Technical Digest, 25(2), p163-172, (2004).

-

O. Balci, "A methodology for certification of modeling and simulation applications", ACM Transactions on Modeling and Computer Simulation (TOMACS), 11(4), p352-377, (2001).

[https://doi.org/10.1145/508366.508369]

-

N. Gavish, "Evaluating virtual reality and augmented reality training for industrial maintenance and assembly tasks", Interactive Learning Environments, 23(6), p778-798, (2015).

[https://doi.org/10.1080/10494820.2013.815221]

-

M. Zahiri, R. Booton, C. A. Nelson, D. Oleynikov, K. C. Siu, "Virtual Reality Training System for Anytime/Anywhere Acquisition of Surgical Skills: A Pilot Study", Military medicine, 183, p86-91, (2018).

[https://doi.org/10.1093/milmed/usx138]

-

M. Garcia Valls, I. R. Lopez, and L. F. Villar, “iLAND: An Enhanced Middleware for Real-Time Reconfiguration of Service Oriented Distributed Real-Time Systems”, in IEEE Transactions on Industrial Informatics, 9(1), p228-236, Feb.), (2013.

[https://doi.org/10.1109/TII.2012.2198662]

2013 : 한남대학교 컴퓨터공학 공학석사

2018 : 한남대학교 컴퓨터공학 공학박사

2013–2018 : 한남대학교 탈메이지교양교육대학 강사

2018–현재 : (주)유토비즈 기업부설연구소 차장

※관심분야 : 가상현실, 증강현실, 사물인터넷, 블록체인, 재난네트워크 등

2018 : 공주대학교 군사과학정보학과 공학박사

2013 : 한남대학교 국방전략대학원 국방무기체계M&S학과 공학석사

1997 : 한남대학교 전자공학과 학사

1998–2002 : 군인공제회 C&C 선임연구원

2003–2017 : M&D정보기술/ARES 개발부 이사

2017–현재 : (주)유토비즈 대표이사

※관심분야 : 국방M&S, 데이터연동, 워-게임, 가상현실, 증강현실 등

2012 : 중국 상하이교통국립대학교 재료공학과 학사

2016 : 부경대학교 무기체계공학과 공학석사

2017 - 2018: 부경대학교 무기체계공학과 공학박사과정중

2012–현재 : 해군병기장교/전력지원체계사업단 유도탄모의체계개발담당

※관심분야 : 유도무기, 국방M&S, 무인체계 등

2007 : 공주대학교 영상예술대학원 공학석사

2016 : 부산외국어대학교 대학원 ICT창의융합 공학박사

2012–2017 : 아이에이치테크 이사

2017–현재 : (주)유토비즈 이사

※관심분야 : HCI, 빅데이터(Bigdata), ICT, 가상현실, 증강현실, 인터렉티브 등