Development of an evaluation index to evaluate the impact of public mobile apps with user participation

Copyright ⓒ 2018 The Digital Contents Society

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-CommercialLicense(http://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

Many public agencies have been developing and operating various kinds of public mobile apps as the market penetration rate of smartphones exceeds 90%. The central government wanted to evaluate the impact of those apps to determine whether or not to maintain the services. However, it has been very difficult to find effective and quantitative measures. Therefore, evaluation indexes for public apps have been proposed in this study by analyzing the existing evaluation measures used by the government. Through the analysis, it was found that the increasing rate of use from the users’ perspective and the change rate of processing time from the managers’ perspective can be the representative measures. The measures have been applied to the respective services of the public apps. It was found that the increasing rate of use tends to converge below 5% and the change rate of processing time becomes stable around 45% after 3 years of operation.

초록

국내의 스마트폰 보급률이 90%가 넘어감에 따라 앱을 이용한 업무가 급격히 증가하고 있다. 정부를 비롯한 공공기관에서는 공공서비스의 일환으로 다양한 공공앱을 개발 및 운영하고 있다. 공공앱의 개수 및 예산이 증가함에 따라 정부에서는 공공앱의 성과측정을 통해 지속 또는 폐지를 결정하고자 하고 있으나, 객관적이고 구체적인 평가지표가 부족한 실정이다. 본 연구의 목적은 공공앱의 평가지표를 제시하는 것이며, 이를 위해 정부에서 시행하고 있는 평가지표를 분석하였다. 기존의 평가지표 분석을 통해 이용자 관점의 이용건수 증감률과 관리자 관점의 처리시간 증감률을 대표적인 평가지표로 제시하였다. 국토교통부에서 실제 운영하고 있는 공공앱의 데이터를 사용하여 실제 적용하였으며, 서비스 개시 이후 3년차부터 이용건수의 증감률이 5% 이내, 처리시간의 증감률 역시 45% 내외의 안정적인 값을 보여주는 것으로 나타났다. 향후 동일한 평가지표를 이용하여 타 공공앱의 적용을 통해 적정한 경계값(threshold)을 제시할 수 있을 것으로 판단된다.

Keywords:

Public Mobile App, Evaluation Index, Evaluation of Satisfaction Level, User Participation, Mobile App키워드:

공공앱, 평가지표, 성과측정, 국민참여, 모바일 앱Ⅰ. Introduction

As of October 2010, the number of smartphone users in Korea has surpassed 5 million, which is the reference point of popularization, and the smartphone penetration rate in Korea has exceeded 90% as of 2018. According to a survey by the PWE (Pew Research Center) in the US, Korea is top ranked in smartphone ownership around the world (94%), followed by Israel (83%), Australia (82%), Sweden (80%), and the Netherlands (80%)1). Smartphones are being applied to various fields beyond their original function of voice calls and message service, and their impact on real life is expected to further increase in the future. People can use smartphones to search for information, perform simple work, use online banking, shop, and even enjoy leisure activities [1]-[5].

According to these social changes, the government and other public agencies are also launching various public apps to provide public services in mobile environments. As of 2016, 1,768 public apps were operated by the central and local governments, affiliated organizations, public corporations, and municipalities. As of the 1st half of 2018, 895 apps are being operated as a result of the overall performance measurement and organization of public apps by the Ministry of the Interior and Safety (MOIS) [6]. Although the costs may differ, the average development cost of these apps is about 10 million won per app. However, the number of users is lower compared to the development cost [7]. This is due to the fact that most of these apps neglect to provide up-to-date information. App development companies fail to provide regular updates due to the lack of maintenance after focusing only on the initial development. In addition, most apps provide low-quality information while some feature overlapping functions due to the lack of ongoing management. If there are only a handful of users due to poor maintenance, an objective evaluation should be performed to determine whether to continue or discontinue the app. In reality, however, there are no clear evaluation indicators, and also most of the related studies are limited to app development. In addition, public apps are being developed without forethought due to the lack of evaluation methods, which lowers the credibility of the central and local governments. Of course, some apps should be operated regardless of the results of a performance evaluation, such as apps that provide information to the socially vulnerable, apps directly linked to national safety, and apps that provide the required information by law. While these are some exceptions, we need to evaluate the performance of most of the other apps through objective metrics.

Therefore, this study aims to develop an objective and efficient evaluation methodology for public apps to address the aforementioned issues. First, we examine the public app inspection method by MOIS, which is responsible for managing public apps, and propose classification and evaluation indexes to evaluate public apps. In order to verify the field applicability of the proposed evaluation indexes, we analyze the performance of the ‘Road Problem Reporting Service’ application, which was launched in March 2014 by the Ministry of Land, Infrastructure, and Transport (MOLIT).

Ⅱ. Review on Public App Evaluation Methods

2-1 Public App Evaluation and Maintenance

In 2011, about 100 public apps were being operated by 43 government agencies. Since then, the number of apps skyrocketed due to the central and local governments’ intention to improve public participation and safety. As a result, MOIS has performed a major overhaul of public apps since 2016. The purpose was to improve the apps that cause inconvenience to the public and/or waste administrative resources because they are either similar to private services or have much lower utilization rates.

To this end, MOIS performed a complete survey of public apps operated by each department through December 2015. The evaluation criteria include download counts, the lack of security and maintenance budgets, similarity to private services or the lack of development objectives, and integration with other systems or pilot operations. Based on such evaluation criteria, 642 out of 1,768 public apps were selected and no longer on service. Specifically, 244 (38%) public apps had low usage with less than 1,000 downloads, and 128 apps (20%) were difficult to maintain due to the lack of security and maintenance budgets. A total of 77 apps (12%) were similar to private services or no longer maintained the development objectives, and 193 apps (30%) were integrated into other systems or pilot operations.

MOIS presented a principle that public apps should be developed only in cases where services are provided only through mobile devices. In addition, in order to reduce the wide range of public apps, new developments were minimized while preliminary reviews were performed to find any overlaps with private services. To measure fair operational performance, public apps had to be registered in the Enterprise Architecture (EA) support system to measure the performance every year using download counts, user satisfaction, and updates. In addition, the Korea Internet & Security Agency (e-Government SW-IoT Security Center) examined and improved the security vulnerabilities of public apps. Table 1 shows the status of public apps by each department as of 2016.

After the evaluation in 2016, MOIS reviewed the operation of public apps for a second time until August 2017 by complementing the loopholes from the previous results, and decided to keep 510 out of 895 apps on service, improve 215 apps, and discontinue 147 apps. Unlike the previous examination, the final decision was made by notifying the performance analysis results through evaluation criteria and receiving implementation plans on whether to discontinue or improve the apps. The proposed evaluation criteria consisted of indexes includes the cumulative number of downloads, number of users, user satisfaction, and updates. Using the scores from the evaluation indexes, it is decided to have apps with a score of 40 or less on a scale of 70 for “no longer on service”, apps with a score between 40-50 for “improved”, and apps with a score over 50 for “maintained” with a condition that utilization and function upgrades were effected for them whose service period is less than 3 years.

As a result of the government’s efforts to evaluate, maintain, and discontinue public apps, more than half of the 1,768 apps from 2016 were discontinued, resulting in a total of 895 public apps as of 2017. In terms of operating costs, the cumulative investment cost of public apps declined by 13% from 92 billion won in 2016 to 80 billion won in 2017, indicating that the performance measurement and management of public apps have some effect. In particular, 111 new apps were developed in 2017, down 37% from 175 in 2016, leading to a decline in the growth of public apps. The budget for new development also decreased by 4.6 billion won from 9.7 billion won in 2016 to 5.1 billion won in 2017. In addition, the average number of downloads per public app increased by 61% from 97,000 in 2016 to 156,000 in 2017, and the average number of users increased by 79% from 19,000 in 2016 to 34,000 in 2017. Of course, the number of downloads and users cannot be directly linked to the usage of the apps. By measuring the performance of public apps, however, we could integrate public apps such as multiple similar apps within one agency or similar apps from higher and lower level agencies.

2-2 Need of Evaluation

With the widespread use of smartphones, the use of public apps have exploded within each department of the central and local governments. While this growth has led to greater convenience for people to perform government and municipal affairs through apps, it has also resulted in the public sector’s unique culture of creating apps without forethought and consideration of duplication and ripple effects. In particular, each local government created their own public app for areas that require direct communication with local residents, such as administrative civil affairs and tourism, which resulted in the launch of similar apps without unique characteristics and garnered criticism about government budget waste. There was also criticism on monopolizing of app development and the hindering of technology developments in the private sector, as some areas should be developed and operated from the ideas generated in the private sector. In fact, according to the “Report on the status of duplicate public/private apps and improvement plans” announced by the Korea Institute of Start-up and Entrepreneurship Development in 2014, the rate of duplicate apps reached 40.6% [9].

To address these problems, the government is constantly evaluating whether to maintain or discontinue public apps through strict performance measures. Although public apps are an important means for people to easily obtain information or communicate with government agencies, the evaluation methodology used to determine whether to maintain or discontinue should be fair as they require the budget and efforts of the central and local governments. However, the evaluation by MOIS was performed internally and therefore cannot avoid the blame of the lack of objectivity. Above all, since the evaluation criteria are changed every year, there is also a question of evaluation fairness. Moreover, as it is difficult to predict the usage in the early stages in terms of the evaluation period (although preliminary deliberation is possible), evaluation indexes during the actual operation period are required. It is also necessary to review whether it is advisable to measure performance based on simple quantitative evaluation standards for public apps with various roles in each field.

Therefore, this study tries to develop objective indexes for evaluating public apps in Korea. Public apps are classified in terms of their purpose, target of development, and data limitations. Furthermore, because all public apps cannot be evaluated using the same evaluation indexes, we classified the types of public apps and selected types of apps that is required to be evaluated. We reviewed previous public app methodologies to develop objective evaluation indexes, and presented the Measurement of Effectiveness (MOE) for the perspective of users and managers to evaluate the effectiveness of public apps. In addition, the developed evaluation index was applied to an actual public app in the field of road traffic to analyze the field applicability through case studies.

Ⅲ. Review on Previous Evaluation Methodology

3-1 Characteristics & Classification of Public Apps

Numerous apps are being created and distributed in various fields by the private sector. In areas that cannot be covered by the private sector due to the use of public data, the central and local governments, and public agencies are producing, distributing, and operating apps for each specialized field. Public apps can be classified into three types according to their characteristics. The most common type is called Type I, which provides information in a specific field, such as the ‘National Law Information’ by the Ministry of Government Legislation and the ‘Patent Information Search’ by the Korean Intellectual Property Office. The application scope of Type I is not only limited in the field of law and patents but also covers information pertaining to traffic, taxation, tourism, and the economy. However, there is criticism that interactive communication is not possible because the contents are biased in certain areas such as the central government’s policy guidance and travel information by local governments [7].

The interactive communication between public services and the general public is most active in Type II (public participation), in which people directly participate in the matters they want the public to solve and receive feedback from public officials, such as the ‘Reporting Daily Life Complaints’ app by MOIS, the ‘Road Problem Reporting Service’ app by MOLIT, and the ‘Safety Stepping Stone’ app by the National Fire Agency. While Type I simply provides data, Type II resolves real-life problems, and also identifies recurring problems and suggests new policies.

Finally, Type III apps provide information and convenience to the general public using data from government and public agencies such as the ‘Korail Talk’ app by the Korea Railroad Corporation and the ‘Expressway Traffic Information’ app by the Korea Expressway Corporation. In fact, according to a survey performed by MOIS in 2018, most of the apps are included in the top of the list of cumulative downloads of public apps, such as ‘Korail Talk’ by the Korea Railroad Corporation and the ‘Hometax Mobile App’ by the National Tax Service. Table 2 shows the classification of public apps by operation, service area, and typical applications.

Among the three types of public apps, Type I simply provides information in a specific field, and hense it is difficult to evaluate user satisfaction. Although we can evaluate the quality level of the provided information, it is difficult to evaluate in real life because the users who wish to receive information have a wide variety of needs. Type III apps, which are developed for the convenience of the users have a structure with many users because of the monopoly of data. If metrics such as the number of users or downloads are adopted, there is simply no reason to compare Type III with other public apps.

Therefore, we proposed the evaluation criteria for Type II (public participation) apps which facilitate communication between the users and public officials. Although Type I & III apps also need to be evaluated, the provision of information in certain fields needs to be maintained to guarantee the public right to know unless the usage sharply declines. In addition, as mentioned above, as apps made for the convenience of the general public use exclusive data by public agencies, it would be unfair to evaluate them using the same standards as other apps. In fact, according to the survey on the performance of public apps performed by the MOIS in 2018, some public apps with exclusive information or for exclusive service areas received a perfect score of 70 points. As shown in Table 3, apps that provide users with exclusive information or apps that provide information about an exclusive service area, such as the Korea Expressway Corporation, will always receive a high score when the evaluation method is based on the number of downloads and users.

3-2 Development of Evaluation Criteria

The number of downloads and updates are the most commonly used evaluation indicators to evaluate the performance of public apps. To illustrate, in 2015, MOIS presented indicators such as less than 1,000 downloads and no updates for over 1 year as part of their measures to improve mobile apps. Those criteria are the most common standards for evaluating public apps in terms of usage and management. Also it seems to be applicable because their purpose is to focus on whether to maintain or discontinue the app. On the other hand, these criteria are inappropriate to evaluate the efficiency of public apps because the number of downloads does not correspond to the actual frequency of use, while the provision of updates is essential in terms of efficiency.

Therefore, this study defines the purpose of evaluating public apps as evaluating the efficiency of the app itself, and presents the evaluation criteria accordingly. As mentioned in the previous section, the targets are Type II (public participation) public apps. Type II apps basically recognize and report specific complaints through the app. They require indexes from the perspective of the user and manager since the system requires constant communication between people and central/local governments. Table 4 shows the items that can be used as evaluation indexes for Type II public apps.

From the user’s perspective, the download count is an index of how many people downloaded and installed the app, facilitating the usage evaluation. However, the download count does not reflect whether or not the app was eventually deleted. Moreover, even if the app was installed it is difficult to know whether it is actually used. Recently, the MOIS divided the download count into cumulative downloads, downloads in the past year, and the number of actual users. However, it is still unclear whether the download count directly leads to the actual frequency of use.

Unlike Type I apps, the number of usage (number of reports) has a significant meaning as an evaluation index. The number of usage of Type I apps does not have a significant meaning because there is no data on how much information the user has acquired. In contrast, the number of usage of Type II apps can be a valid evaluation index as the actual users report complaints by providing their personal information. Review ratings are the user ratings provided on the app installation screen of each store, which, like the download count, is an easy and simple way to evaluate the usage. However, as the sample size of the people who provide ratings is too small compared to the download count, this method is inappropriate as the results may be biased.

This study presented the evaluation convenience, data acceptability, and reliability as detailed indexes to determine whether each evaluation index is suitable for measuring the efficiency of public apps. Therefore, in terms of classifying the evaluation indexes from the user’s point of view, the download count is quantitative, which is excellent for evaluation convenience. While the download count is also easy to access as the numbers are presented in each store, the reliability of the evaluation results is poor due to the inconsistency with the actual users as mentioned above. The number of usage (number of reports) is not presented in the app stores, but can be presented by each department that maintains the system. The reliability of the results is considered high as quantitative figures are presented for the usage. On the contrary, the review ratings are very good in terms of evaluation convenience and data acceptability, but there is a high possibility of bias due to the lack of sufficient samples.

When the evaluation indexes from the manager’s perspective are evaluated in the same way, the processing rate is passable in terms of evaluation convenience and accessibility. However, the processing rate will eventually reach 100% over time due to the nature of civil complaints process. That is, it is inappropriate to apply to the evaluation of public apps on a six-month or one-year basis because the processing rate increases over time. Most civil complaints have a maximum processing term of 7 or 14 days, and if long-term processing is required, post-processing will be performed according to the budget and timing after completing the response. Furthermore, as the processing rate will continue to change according to the point of inquiry, it cannot be an appropriate evaluation index.

The resolving time is an index of how quickly complaints have been processed compared to the number of reports received. It is an index to assess the efficiency of public apps, even though there may be some differences depending on the types of complaints considering the evaluation convenience, data acceptability, and reliability. The update rate is an index that can be used to determine how much effort has been put into improving the functions of the app, and it is actually an index included in the evaluation criteria of MOIS. Although the update rate is convenient to evaluate the maintenance of the app, it is difficult to make a quantitative evaluation because the number of updates is not proportional to the total amount of functional improvement. In addition, the efficiency relative to budget investment, differentiation from other apps, and public interest can be presented as evaluation indexes, but were excluded due to subjective standards or difficulties in quantitative assessment. The details for each evaluation index are as follows.

Ⅳ. Field Applicability Test

4-1 Evaluation Index Segmentation

In order to evaluate the performance of Type II public apps, this study selected representative evaluation indexes from the perspective of the user and manager. The selected indexes are the number of usage (number of reports) from the user’s perspective and the resolving time from the manager’s perspective. However, it is difficult to apply the absolute figures of both indexes directly to the evaluation. For example, assuming in the cases in which a certain app has 100 usages per month and an average resolving time of 10 days, while another app has 200 usages per month and an average resolving time of 7 days, it is difficult to determine which app showed better performance. The purpose of evaluating the performance of public apps is not only to evaluate the status and performance of the apps, but to select apps that lack usage and ultimately discontinue them. In this way, a simple comparison of figures is a meaningless way of evaluation.

Therefore, this study presents indexes applicable in the actual evaluation stage using the two evaluation indexes derived above, which are the number of usage and the resolving time. First, the number of usage from the user’s perspective is defined as the CIR (Contemporary report Increasing Ratio), and is calculated as the rate of increase compared to that of the previous year of service. The resolving time from the manager’s perspective is defined as the CRR (Contemporary Resolving time Ratio), and is calculated as the reduction of resolving time compared to that of the previous year of service.

4-2 Application

For the case analysis of the public app evaluation, we applied the evaluation methodology to the app of ‘Road Problem Reporting Service’ by MOLIT in the field of road traffic. This app provides a service where people can easily report road inconveniences anytime and anywhere using smartphones. When road inconveniences such as pavement damage and fallen objects on any kinds of roads including expressways, national roads, and local roads are reported using this app, a one-stop service is provided by the designated mobile maintenance team who processes the reports within 24 hours and notifies the results to the reporter. Since its launch in March 2014, the download count reached 30,000 by 2018, and the number of reports cases exceeded 30,000 cases.

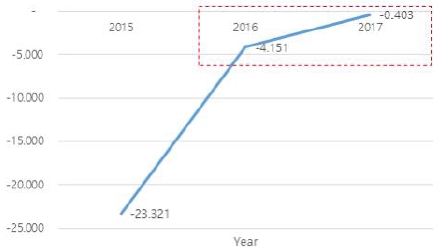

First, as a result of analyzing the CIR from the user’s point of view, the value of CIR was -23% in 2015 based on when the service was launched (2014), and was -4% and -0.4% in 2016 and 2017, respectively, indicating no significant change. The annual trend of CIRs is shown in Fig. 2.

The –23% CIR, which can be used to compare the performance between the year of 2014 and 2015, is attributed to the fact that the most publicity and users were concentrated when the service was launched due to the characteristics of apps. In 2016 and 2017, the rate of change has been stable to within 5% where the absolute value of the ratio, rather than the (+) or (-) of the CIR, is important. The stability of the rate of change not only represents the steady demand for the public app being developed and serviced, but is also linked to the number of downloads presented as one of the previous public app evaluation indexes. As the public app used in this analysis was launched in 2014, the number of samples was limited. However, considering that most of public apps have been launched since 2010, the CIR can be used as an important index as the data accumulates.

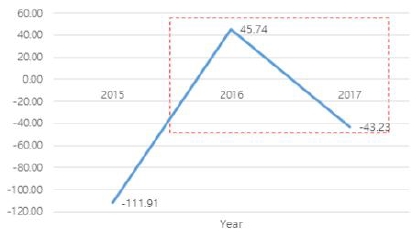

As a result of analyzing the CRR from the manager’s point of view, the CRR was -111% in 2015 based on when the service was launched (2014), and was 45.7% and -43.2% in 2016 and 2017, respectively, showing an increase and decrease. Fig. 3 shows the values of CRR for each year.

As in the CIR above, the rate of CRR change in 2015 was as high as -111% due to the increase in the number of complaints reported when the service was launched. However, the rate of change was maintained within ± 45% in 2016 and 2017 and was thus relatively stable. In addition, the absolute value, that is, the size of the ratio, rather than the (+) or (-) of the CRR, is important

In this study, the public app selected as the case study for field applicability is the ‘Road Problem Reporting Service’ app which has been in service since 2014. The two indexes presented in the methodology, CIR and CRR, show a significant change in the first year after the service was launched, but stable figures afterward. Therefore, the proposed evaluation indexes in this study can be applicable from the third year after launching, and the appropriate range for each rate of change can be determined according to the overall evaluation result of the public app.

Ⅴ. Conclusion

With the rapid development of IT (information technology), most IT technologies are being driven by the private sector. Meanwhile, the public sector, which continues to follow this trend, is being observed in many fields. With respect to the application technology using smartphones, as many apps are currently being developed and distributed, without differentiation from other apps, the number of users rapidly drops and leads to the end of service. In such a social atmosphere, if public apps developed by spending public tax funds are only used in specific fields, or if budget funds are wasted due to the lack of routine maintenance such as updates, the development effort will be a great waste for the nation as well.

In this regards, we evaluated previous public app evaluation methods to objectively evaluate public apps, and analyzed and presented evaluation indexes according to classification by the characteristics of public apps. In particular, this study analyzed the appropriate evaluation indexes for Type II (public participation) apps, in which the central and local governments constantly interact with people, and presented two evaluation indexes of CIR and CRR, which are from the perspective of the user and manager, respectively. The proposed evaluation indexes were analyzed using data from a public app in service. As a result, it is found that CIR was stable to within 5% from the third year after the launch of service, and CRR also showed a stable value of about 45%. Of course, 5% and 45% cannot be absolute evaluation values, and other values can be calculated when applied to other public apps. The proposed evaluation indexes are representative indexes applicable to Type II public apps. It is also possible to present a threshold according to a reasonable evaluation index by analyzing the results of other Type II public apps.

By evaluating public apps through the evaluation indexes proposed in this study, we expect that the government will be able to discontinue the public apps with low public use due to poor maintenance, and consistently develop public apps with high usage in the future. In addition, if we avoid developing public apps that are far from meeting public demand and develop highly used public apps considering content quality and usability, the satisfaction and reliability will be improved for all public apps related to national facilities.

References

-

Jeong Yeop Lee, Young Soo Ryu, Jeong Hee Hwang, “Development of Guidance App for Public Transportation”, Journal of Digital Contents Society, 18(1), p115-121, (2017).

[https://doi.org/10.9728/dcs.2017.18.1.115]

- Han-Seob Kim, Jieun Lee, “Virtual Walking Tour System”, Journal of Digital Contents Society, 19(4), p605-613, (2018).

-

Dong-Hee Shin, Yong-Moon Kim, “Activation Strategies of the Disaster Public-Apps in Korea”, The Korea Contents Association, 14(11), p644-656, (2014).

[https://doi.org/10.5392/jkca.2014.14.11.644]

-

Yu-mee Chung, Jong-Hoon Choe, “A Co-family Managerial Photo Share Mobile App UI Development”, The Korea Contents Association, 14(4), p29-36, (2014).

[https://doi.org/10.5392/jkca.2014.14.04.029]

- Dae-Ho Byun, “Development of a Sales Support Application Based on E-Business Cards”, The Korea Contents Association, 18(5), p464-471, (2018).

- MOIS, “No More Duplicate Public Apps”, Public Relation Report, (2016).

- Hee Jung Cho, Seung Hyun Lee, “The Current Status and Developmental Strategy of Public Applications”, National Assembly Research Service, 141, (2011).

- MOIS, “No More Useless Public Apps”, Public Relation Report, (2018).

- http://www.munhwa.com/news/view.html?no=2014071401031624098002

저자소개

2016: Ph.D. in Urban Planning at Seoul National University

2001: MS in Transportation Engineering at Hanyang University

1999: BS in Transportation Engineering at Hanyang University

2001~ present: Senior Researcher, Korea Institute of Civil Engineering and Building Technology

※ Areas of Interest: Road Safety, Bicycles, Personal Mobility, Mobile App, Road Facilities

2011: Ph.D. in Civil Engineering at Univ. of California, Irvine

2000: MS in Urban Engineering at Yonsei University

1998: BS in Urban Engineering at Yonsei University

2011~ present: Senior Researcher, Korea Institute of Civil Engineering and Building Technology

※ Areas of Interest: Advanced Transportation, Self-driving System, C-ITS, Road Safety